HiddenLayer, a Gartner recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its security platform helps enterprises safeguard the machine learning models behind their most important products. HiddenLayer is the only company to offer turnkey security for AI that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded by a team with deep roots in security and ML, HiddenLayer aims to protect enterprise’s AI from inference, bypass, extraction attacks, and model theft. The company is backed by a group of strategic investors, including M12, Microsoft’s Venture Fund, Moore Strategic Ventures, Booz Allen Ventures, IBM Ventures, and Capital One Ventures.

Protection to Facilitate

Enable real-time monitoring, detection, and response to threats specific to LLMs.

Ensure that LLM deployments can be managed securely, mitigating the risk of data leaks, and malicious use.

The Challenges

Optimizing Gen AI Adoption with Security

In the fast-paced world of GenAI, cyber attacks move quickly. Real-time protection demands comprehensive measures to mitigate threats. Additionally, navigating complex ai security frameworks adds complexity and requires compliance. Balancing real-time protection, effective threat mitigation, and regulatory compliance is imperative for fostering a resilient and responsible GenAI ecosystem.

Cyber Attacks

LLMs must be protected from tampering, prompt injection attacks, PII leakage, toxicity, inference attacks, and model theft

Integration Into MLOps Tools

Adoption of security tools for GenAI demands integration options with modern MLOps tooling ensuring data scientists and ML engineers can adopt the controls without friction

Generative AI Deployment

GenAI deployment risks include regulatory issues, penalties, legal consequences, and reputational damage when compromised by an adversarial attack

Our Approach

Automated, Scalable, and Unobtrusive

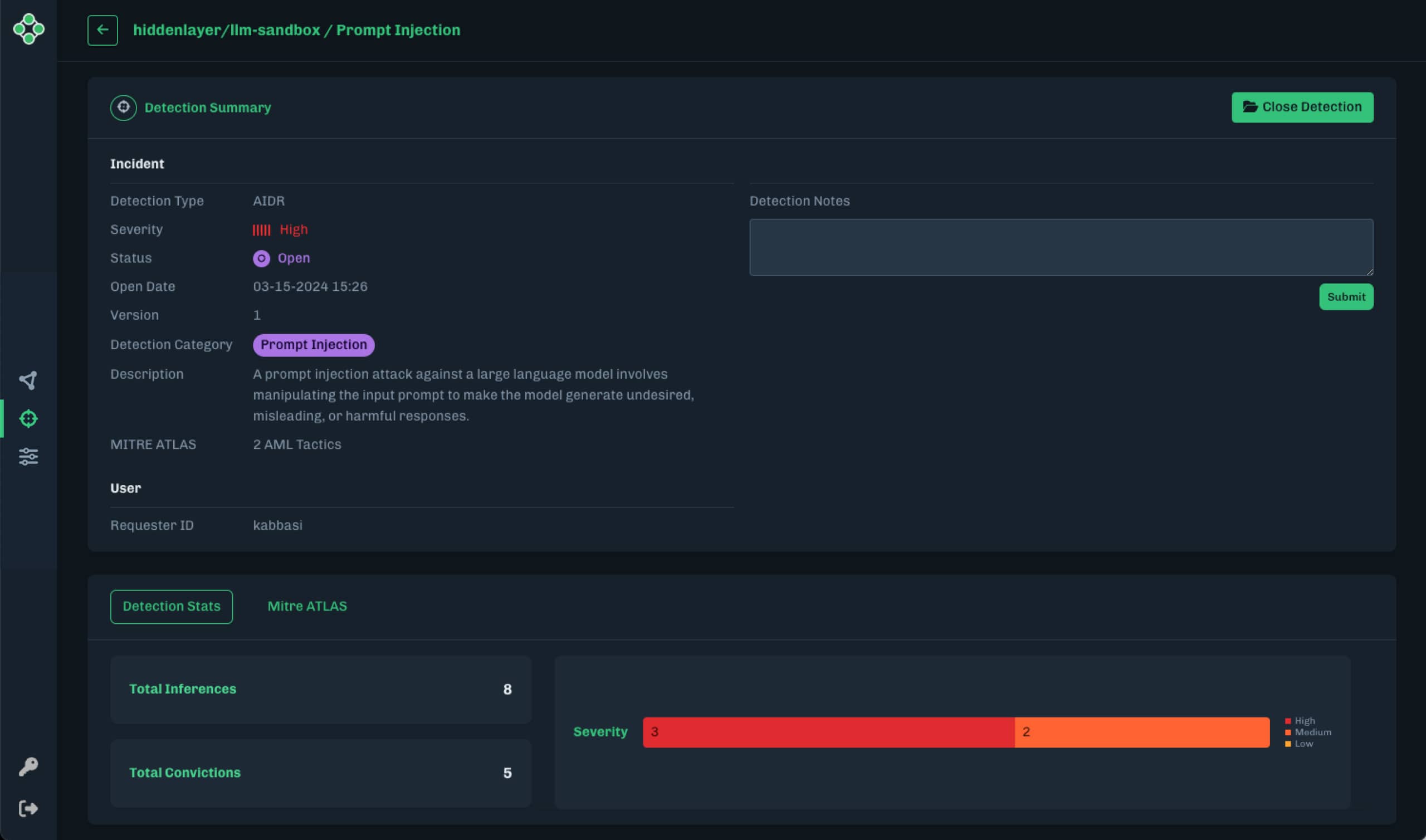

HiddenLayer’s AI Detection & Response for GenAI enhances LLM adoption by seamlessly integrating with your current security infrastructure. It complements your existing security stack and empowers you to automate and scale the protection of both LLMs and traditional AI models, ensuring their security in real-time.

With AI Detection and Response for GenAI integrated into your environment, you can facilitate LLM adoption while proactively defending against threats to your LLMs.

Real-time Protection

Continuous Assurance

Ensures your systems are resistant to prompt injection attacks, PII leakage, inappropriate privilege escalation, or code execution with our ai security best practices.

Threat Mitigation

Remain Proactive

Proactively mitigates cybersecurity risks in real-time as part of the MLOps Lifecycle, ensuring a secure and efficient workflow and maps all alerts to the MITRE ATLAS and LLM OWASP frameworks.

Regulatory Compliance

Ensure Integrity

Supports the majority of LLMs including: GPT-X, LlaMa, Mistral, and internally built LLMs out-of-the-box, among other model types, and integrates into existing deployment frameworks with relative ease.

Provide real-time cyber protection for GenAI by safeguarding against prompt injection, PII leakage, evasion, and model theft.

Learn more about what AI Detection & Response can offer.

On average, companies have

1,689 models

in production

According to recent HiddenLayer research

Why HiddenLayer

The Ultimate Security for AI Platform

HiddenLayer, a Gartner recognized AI Application Security company, is the only platform provider of security solutions for Gen AI, LLMs, and traditional models. With a first-of-its-kind, non-invasive software approach to observing & securing GenAI, HiddenLayer is helping to protect the world’s most valuable technologies.

- MITRE ATLAS & OWASP Top 10 for LLMs — AI Detection & Response for GenAI maps all detection to these frameworks.

- Protects against Model Tampering — Know where the model is weak and when the model has been tampered with.

- Protects against Data Poisoning/Model Injection — Protect the model from its inputs or outputs being deliberately changed.

- Protects against Theft — Stop reconnaissance attempts through inference attacks, which could result in your model intellectual property being stolen.

- Uses a combination of Supervised Learning, Unsupervised Learning, Dynamic/Behavioral Analysis, and Static Analysis to deliver detection for a library of adversarial machine learning attacks

- Prompt Injection — Ensure inputs to your LLM do not cause unintended consequences.

- Excessive Agency — Ensure LLM outputs do not expose backend systems, risking privilege escalation or remove code execution.

The Latest From HiddenLayer

Read more in our full research section or sign up for our occasional email newsletter and we’ll make sure you’re first in the know.

How can we secure your AI?

Start by requesting your demo and let’s discuss protecting your unique AI advantage.