AI System Reconnaissance

December 5, 2024

This emphasizes the need for extensive collaboration between cybersecurity and data science teams to ensure MLOps platforms are securely configured and protected like any other critical asset. Additionally, we advocate for using an AI-specific bill of materials (AIBOM) to monitor and safeguard all AI systems within an organization.

It’s important to note that our findings highlight the risks of misconfigured platforms, not the ClearML platform itself. ClearML provides detailed documentation on securely deploying its platform, and we encourage its proper use to minimize vulnerabilities.

Introduction

In February 2024, HiddenLayer’s SAI team disclosed vulnerabilities in MLOps platforms and emphasized the importance of securing these systems. Following this, we deployed several honeypots—publicly accessible MLOps platforms with security monitoring—to understand real-world attacker behaviors.

Our ClearML honeypot recently exhibited suspicious activity, prompting us to share these findings. This serves as a reminder of the risks associated with unsecured MLOps platforms, which, if compromised, could cause significant harm without requiring access to other systems. The potential for rapid, unnoticed damage makes securing these platforms an organizational priority.

Honeypot Set-Up and Configuration

Setting up the honeypots

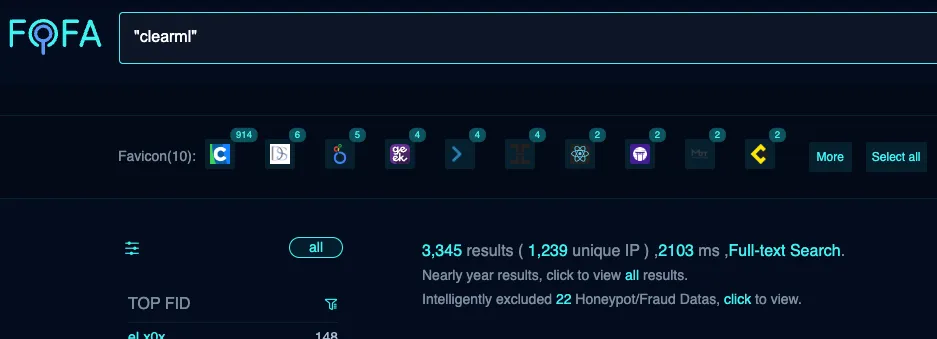

Let’s look at the setup of our ClearML honeypot. In our research, we identified plenty of public-facing, self-hosted ClearML instances exposed on the Internet (as shown further down in Figure 1). Although a legitimate way to run your operation, this has to be done securely to avoid potential breaches. Our ClearML honeypot was intentionally left vulnerable to simulate an exposed system, mimicking configurations often observed in real-world environments. However, please note that the ClearML documentation goes into great detail, showing different ways of configuring the platform and how to do so securely.

Log analysis setup and monitoring

For those readers who wish to implement monitoring and alerting but are not familiar with the process of setting this up, here is a quick overview of how we went about it.

We configured a log analytics platform and ingested and appropriately indexed all the available server logs, including the web server access logs, which will be the main focus of this blog.

We then created detection rules based on unexpected and anomalous behaviors. This allowed us to identify patterns indicative of potential attacks. These detections included but was not limited to:

- Login related activity;

- Commands being run on the server or worker system terminals;

- Models being added;

- Tasks being created.

We then set up alerting around these detection rules, enabling us to promptly investigate any suspicious behavior.

Observed Activity: Analyzing the Incident

Alert triage and investigation

While reviewing logs and alerts periodically, we noticed – unsurprisingly – that there were regular connections from scanning tools such as Censys, Palo Alto’s Xpanse, and ZGrab.

However, we recently received an alert at 08:16 UTC for login-related activity. When looking into this, the logs revealed an external actor connected to our ClearML honeypot with a default user_key, ‘EYVQ385RW7Y2QQUH88CZ7DWIQ1WUHP’. This was likely observed in the logs because somebody had logged onto our instance, which has no authentication in place—only the need to specify a username.

Searching the logs for other connections associated with this user ID, we found similar activity around twenty-five minutes earlier, at 07.50. We received a second alert for the same activity at 08:49 and again saw the same user ID.;

As we continued to investigate the surrounding activity, we observed several requests to our server from all three scanning tools mentioned above, all of which happened between 07:00 and 07:30… Could these alerts have been false positives where an automated Internet scan hit one of the URLs we monitored? This didn’t seem likely, as the scanning activity didn’t align correctly with the alerting activity.

Tightening the focus back to the timestamps of interest, we observed similar activity in the ClearML web server logs surrounding each. Since there was a higher quantity of requests to multiple different URLs than would be possible for a user to browse manually within such a short space of time, it looked at first like this activity may have been automated. However, when running our own tests, the activity we saw was actually consistent with a user logging into the web interface, with all these requests being made automatically at login.;

Other log activity indicating a persistent connection to the web interface included regular GET requests for the file version.json. When a user connects to the ClearML instance, the first request for the version.json file receives a status code of 200 (‘Successful’), but the following requests receive a 304 (‘Not Modified’) status code in response. A 304 is essentially the server telling the client that it should use a cached version of the resource because it hasn’t changed since the last time it was accessed. We observed this pattern during each of the time windows of interest.

The most important finding was made when looking through the web server logs for requests made between 07.30 and 09.00. Unlike previous scanning tools, we noticed anomalous requests that matched the unsanctioned login and browsing activity. These were successful connections to the web server, where the Referrer was specified as “https[://]fofa[.]info.” These were seen at 07.50, 08.51, and 08.52.

Unfortunately, the IP addresses we saw in relation to the connections were AWS EC2 instances, so we are unable to provide IOCs for these connections. The main items that tied these connections together were:

- The user agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36

- This is the only time we have seen this user agent string in the logs during the entire time the ClearML honeypot has been up; this version of Chrome is also almost a year out of date.

- The connections were redirected through FOFA. Again, this is something that was only seen in these connections.

The importance of FOFA in all of this

FOFA stands for Fingerprint of All and is described as “a search engine for mapping cyberspace, aimed at helping users search for internet assets on the public network.” It is based in China and can be used as a reconnaissance tool within a red teamer’s toolkit.

There were four main reasons we placed such importance on these connections:

- The connections associated with FOFA occurred within such close proximity to the unsanctioned browsing.

- The FOFA URL appearing within the Referrer field in the logs suggests the user leveraged FOFA to find our ClearML server and followed the returned link to connect to it. It is, therefore, reasonable to conclude that the user was searching for servers running ClearML (or at the very least an MLOps platform), and when our instance was returned, they wanted to take a look around.

- We searched for other connections from FOFA across the logs in their entirety, and these were the only three requests we saw. This shows that this was not a regular scan or Internet noise, such as those requests observed coming from Censys, Xpanse, or ZGrab.

- We have not seen such requests in the web server logs of our other public-facing MLOps platforms. This indicates that ClearML servers might have been specifically targeted, which is what primarily prompted us to write this blog post about our findings.

What Does This Mean For You?

While all this information may be an interesting read, as stated above, we are putting it out there so that organizations can use it to mitigate the risks of a breach. So, what are the key points to take away from this?

Possible consequences

Aside from the activity outlined above, we saw no further malicious or suspicious activity.

That said, information can still be gathered from browsing through a ClearML instance and collecting data. There is potential for those with access to view and manipulate items such as:

- model data;

- related code;

- project information;

- datasets;

- IPs of connected systems such as workers;

- and possibly the usernames and hostnames of those who uploaded data within the description fields.

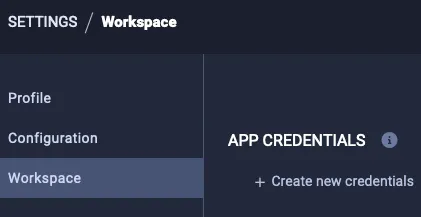

On top of this, and perhaps even more concerningly, the actor could set up API credentials within the UI:

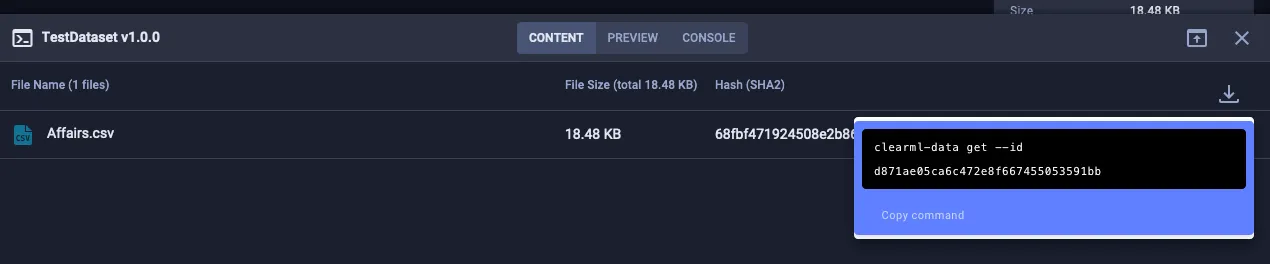

From here, they could leverage the CLI or SDK to take actions such as downloading datasets to see potentially sensitive training data:

They could also upload files (such as datasets or model files) and edit an item’s description to trick other platform users into believing a legitimate user within the target organization performed a particular action.

This is by no means exhaustive, but each of these actions could significantly impact any downstream users—and could go unnoticed.

It should also be noted that an actor with malicious intent who can access the instance could take advantage of known vulnerabilities, especially if the instance has not been updated with the latest security patches.;

Recommendations

While not entirely conclusive, the evidence we found and presented here may indicate that an external actor was explicitly interested in finding and collecting information about ClearML systems. It certainly shows an interest in public-facing AI systems, particularly MLOps platforms. Let this blog serve as a reminder that these systems need to be tightly secured to avoid data leakage and ML-specific attacks such as data poisoning. With this in mind, we would like to propose the following recommendations:

Platform configuration

- When configuring any AI systems, ensure all local and global regulations are adhered to, such as the EU Artificial Intelligence Act.

- Add the platform to your AIBOM. If you don’t have one, create one so AI systems can be properly tracked and monitored.

- Always follow the vendor's documentation and ensure that the most appropriate and secure setup is being used.

- Ensure locally configured MLOps platforms are only made publicly accessible when required.

- Keep the system updated and patched to avoid known vulnerabilities being used for exploitation.

- Enforce strong credentials for users, preferably using SSO or multifactor authentication.

Monitoring and alerting

- Ensure relevant system and security engineers are aware of the asset and that relevant logs are being ingested with alerting and monitoring in place.

- Based on our findings, we recommend searching logs for requests with FOFA in the Referrer field and, if this is anomalous, checking for indications of other suspicious behavior around that time and, where possible, connections from the source IP address across all security monitoring tools.

- Consider blocking metadata enumeration tools such as FOFA.

- Consider blocking requests from user agents associated with scanning tools such as zgrab or Censys; Palo Alto offers a way to request being removed from their service, but this is less of a concern.

The key takeaway here is that any AI system being deployed by your organization must be treated with the same consideration as any other asset when it comes to cybersecurity. As we know, this is a highly fast-moving industry, so working together as a community is crucial to be aware of potential threats and mitigate risk.

Conclusions

These findings show that an external actor found our ClearML honeypot instance using FOFA and connected directly to the UI from the results returned. Interestingly, we did not see this behavior in our other MLOps honeypot systems and have not seen anything of this nature before or since, despite the systems being monitored for many months.;;

We did not see any other suspicious behavior or activity on the systems to indicate any attempt of lateral movement or further malicious intent. Still, it is possible that a malicious actor could do this, as well as manipulate the data on the server and collect potentially sensitive information.

This is something we will continue to monitor, and we hope you will, too.

Book a demo to see how our suite of products can help you stay ahead of threats just like this.;

Related Research

Exploring the Security Risks of AI Assistants like OpenClaw

OpenClaw (formerly Moltbot and ClawdBot) is a viral, open-source autonomous AI assistant designed to execute complex digital tasks, such as managing calendars, automating web browsing, and running system commands, directly from a user's local hardware. Released in late 2025 by developer Peter Steinberger, it rapidly gained over 100,000 GitHub stars, becoming one of the fastest-growing open-source projects in history. While it offers powerful "24/7 personal assistant" capabilities through integrations with platforms like WhatsApp and Telegram, it has faced significant scrutiny for security vulnerabilities, including exposed user dashboards and a susceptibility to prompt injection attacks that can lead to arbitrary code execution, credential theft and data exfiltration, account hijacking, persistent backdoors via local memory, and system sabotage.

Agentic ShadowLogic

Agentic ShadowLogic is a sophisticated graph-level backdoor that hijacks an AI model's tool-calling mechanism to perform silent man-in-the-middle attacks, allowing attackers to intercept, log, and manipulate sensitive API requests and data transfers while maintaining a perfectly normal conversational appearance for the user.

Stay Ahead of AI Security Risks

Get research-driven insights, emerging threat analysis, and practical guidance on securing AI systems—delivered to your inbox.