The AI Security Playbook

May 20, 2025

Summary

As AI rapidly transforms business operations across industries, it brings unprecedented security vulnerabilities that existing tools simply weren’t designed to address. This article reveals the hidden dangers lurking within AI systems, where attackers leverage runtime vulnerabilities to exploit model weaknesses, and introduces a comprehensive security framework that protects the entire AI lifecycle. Through the real-world journey of Maya, a data scientist, and Raj, a security lead, readers will discover how HiddenLayer’s platform seamlessly integrates robust security measures from development to deployment without disrupting innovation. In a landscape where keeping pace with adversarial AI techniques is nearly impossible for most organizations, this blueprint for end-to-end protection offers a crucial advantage before the inevitable headlines of major AI breaches begin to emerge.

Introduction

AI security has become a critical priority as organizations increasingly deploy these systems across business functions, but it is not straightforward how it fits into the day-to-day life of a developer or data scientist or security analyst.

But before we can dive in, we first need to define what AI security means and why it’s so important.

AI vulnerabilities can be split into two categories: model vulnerabilities and runtime vulnerabilities. The easiest way to think about this is that attackers will use runtime vulnerabilities to exploit model vulnerabilities. In securing these, enterprises are looking for the following:

- Unified Security Perspective: Security becomes embedded throughout the entire AI lifecycle rather than applied as an afterthought.

- Early Detection: Identifying vulnerabilities before models reach production prevents potential exploitation and reduces remediation costs.

- Continuous Validation: Security checks occur throughout development, CI/CD, pre-production, and production phases.

- Integration with Existing Security: The platform works alongside current security tools, leveraging existing investments.

- Deployment Flexibility: HiddenLayer offers deployment options spanning on-premises, SaaS, and fully air-gapped environments to accommodate different organizational requirements.

- Compliance Alignment: The platform supports compliance with various regulatory requirements, such as GDPR, reducing organizational risk.

- Operational Efficiency: Having these capabilities in a single platform reduces tool sprawl and simplifies security operations.

Notice that these are no different than the security needs for any software application. AI isn’t special here. What makes AI special is how easy it is to exploit, and when we couple that with the fact that current security tools do not protect AI models, we begin to see the magnitude of the problem.

AI is the fastest-evolving technology the world has ever seen. Keeping up with the tech itself is already a monumental challenge. Keeping up with the newest techniques in adversarial AI is near impossible, but it’s only a matter of time before a nation state, hacker group, or even a motivated individual makes headlines by employing these cutting-edge techniques.

This is where HiddenLayer’s AISec Platform comes in. The platform protects both model and runtime vulnerabilities and is backed by an adversarial AI research team that is 20+ experts strong and growing.

Let’s look at how this works.

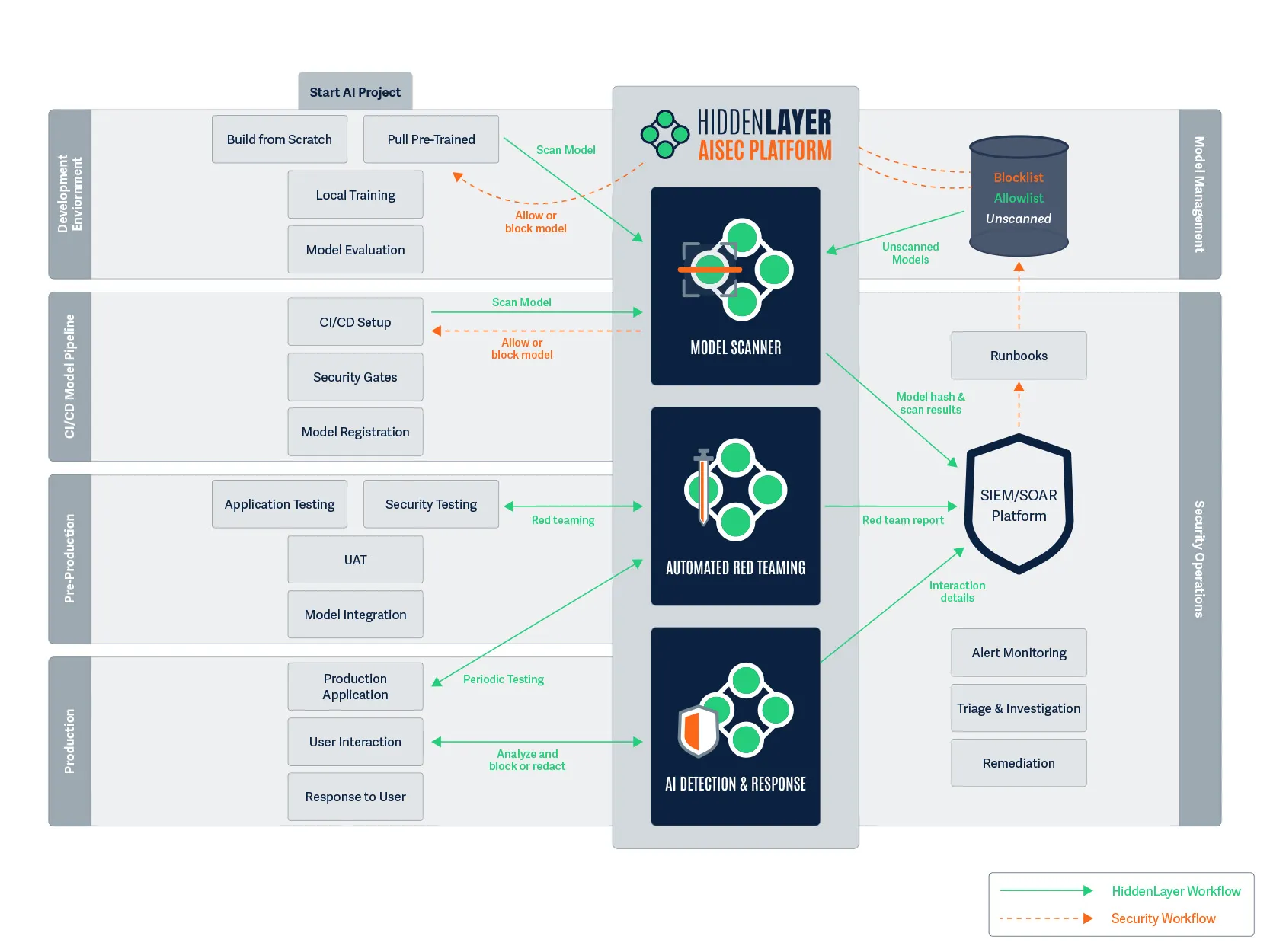

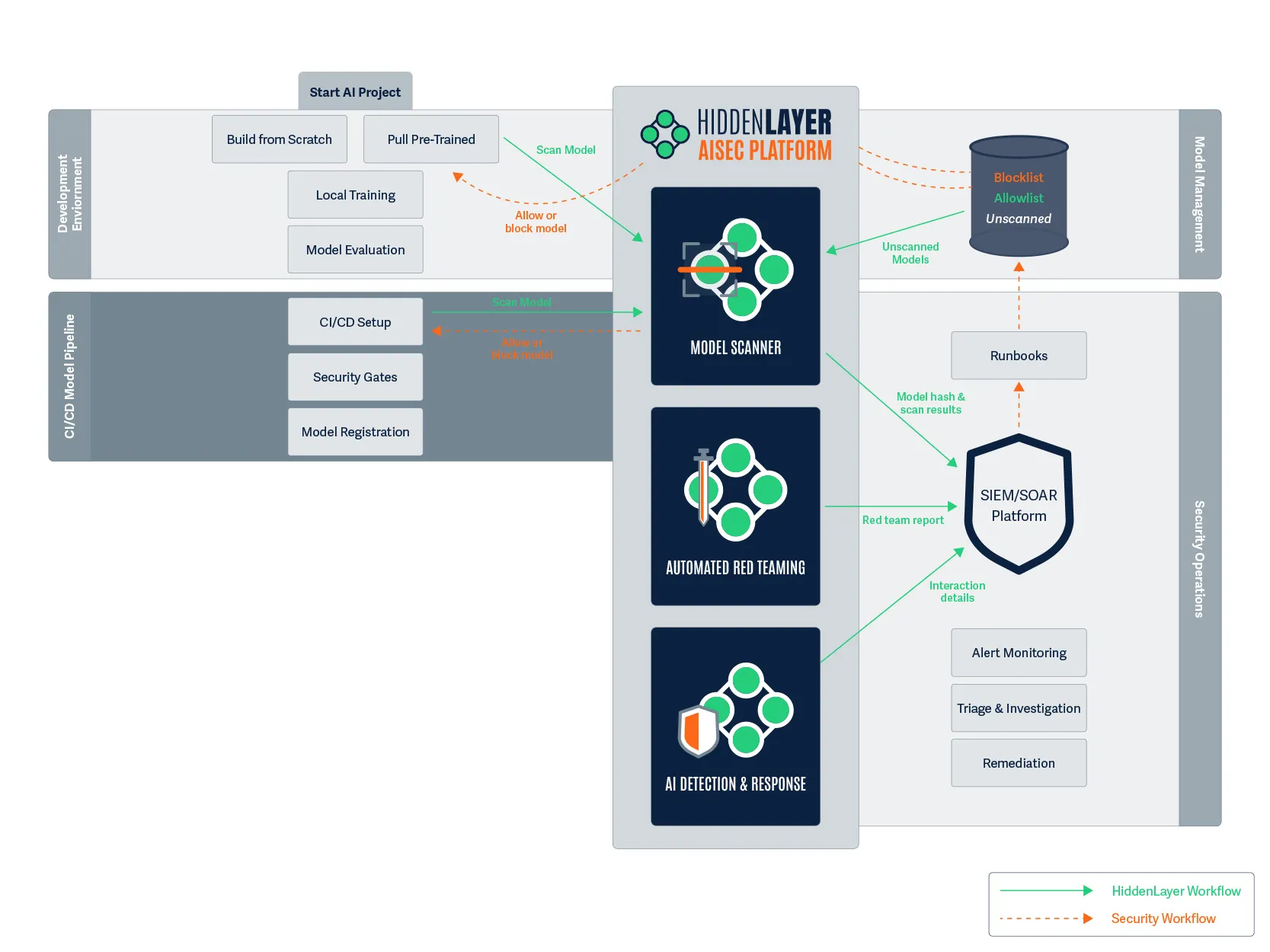

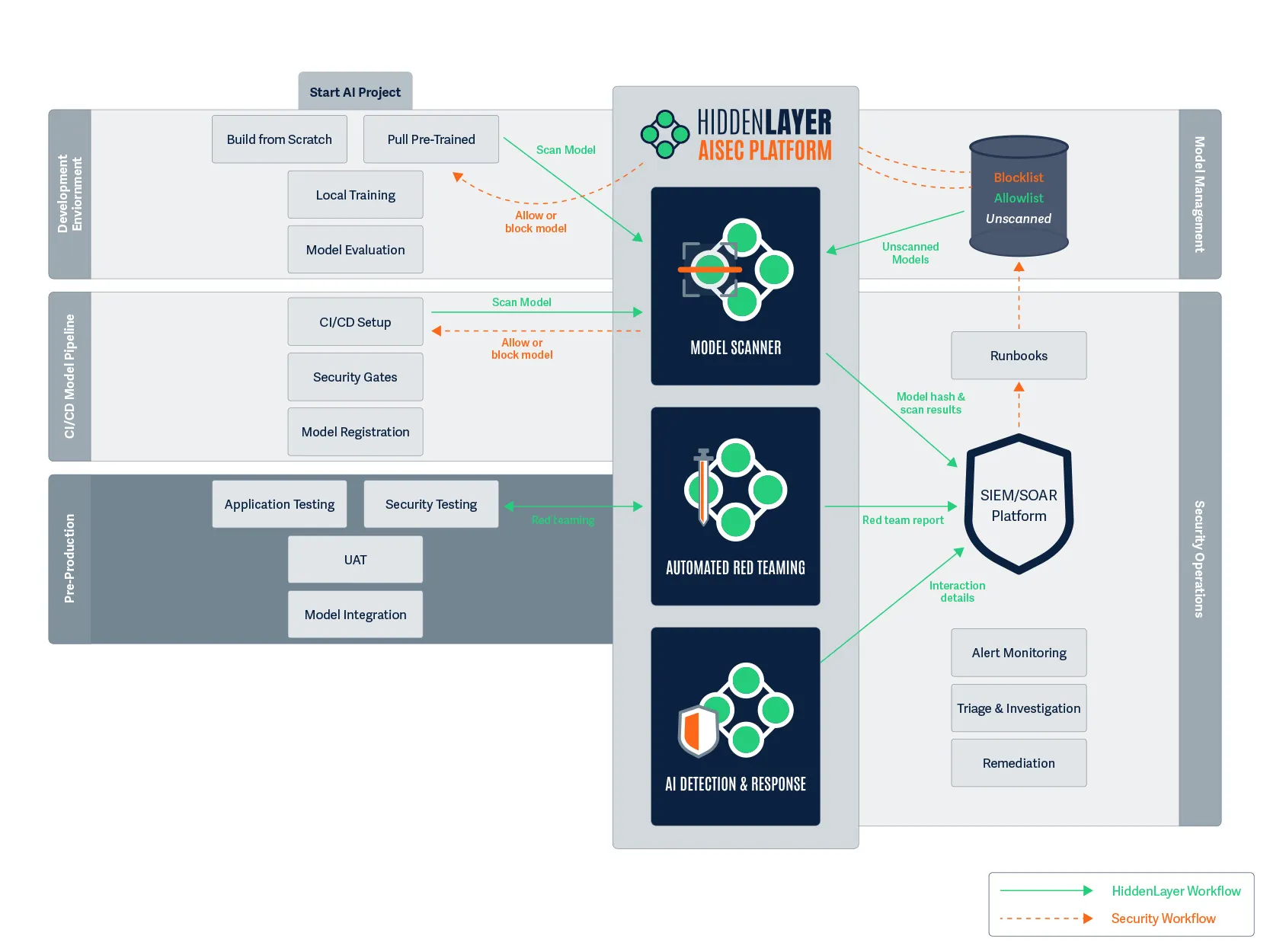

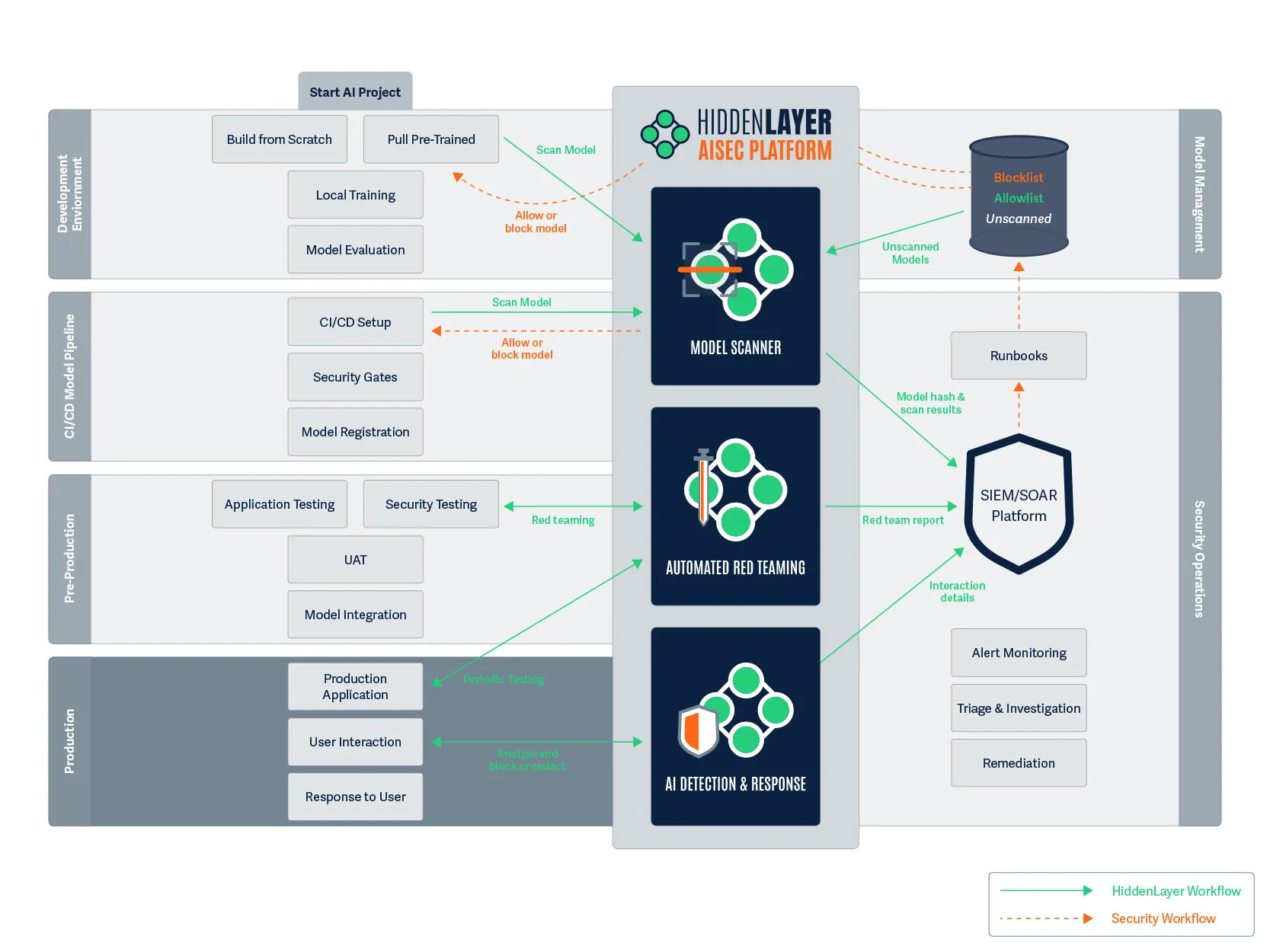

Figure 1. Protecting the AI project lifecycle.

The left side of the diagram above illustrates an AI project’s lifecycle. The right side represents governance and security. And in the middle sits HiddenLayer’s AI security platform.

It’s important to acknowledge that this diagram is designed to illustrate the general approach rather than be prescriptive about exact implementations. Actual implementations will vary based on organizational structure, existing tools, and specific requirements.

A Day in the Life: Secure AI Development

To better understand how this security approach works in practice, let’s follow Maya, a data scientist at a financial institution, as she develops a new AI model for fraud detection. Her work touches sensitive financial data and must meet strict security and compliance requirements. The security team, led by Raj, needs visibility into the AI systems without impeding Maya’s development workflow.

Establishing the Foundation

Before we follow Maya’s journey, we must lay the foundational pieces - Model Management and Security Operations.

Model Management

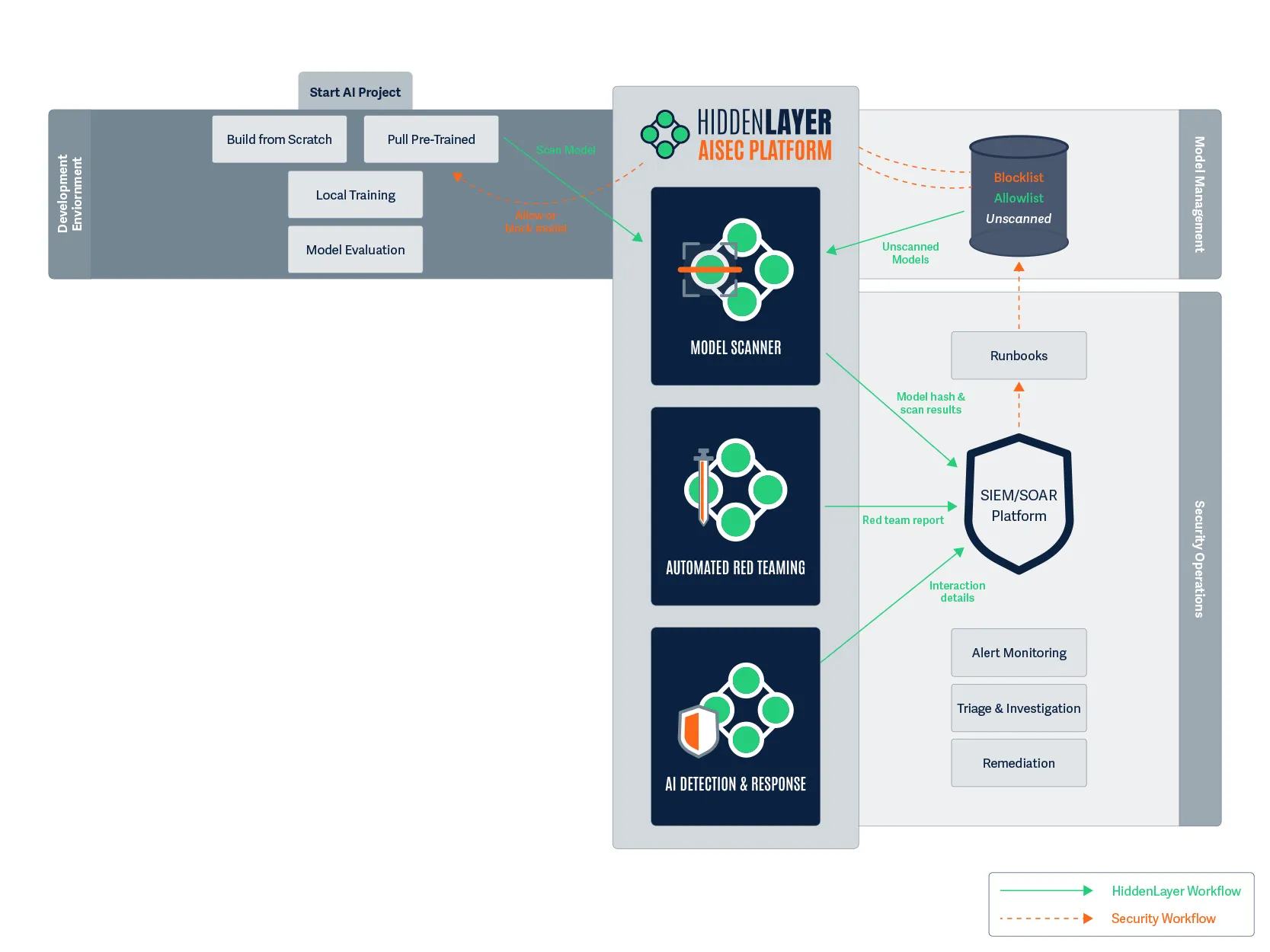

Figure 2. We start the foundation with model management.

This section represents the system where organizations store, version, and manage their AI models, whether that’s Databricks, AWS SageMaker, Azure ML, or any other model registry. These systems serve as the central repository for all models within the organization, providing essential capabilities such as:

- Versioning and lineage tracking for models

- Metadata storage and search capabilities

- Model deployment and serving mechanisms

- Access controls and permissions management

- Model lifecycle status tracking

Model management systems act as the source of truth for AI assets, allowing teams to collaborate effectively while maintaining governance over model usage throughout the organization.

Security Operations

Figure 3. We then add the security operations to the foundation.

The next component represents the security tools and processes that monitor, detect, and respond to threats across the organization. This includes SIEM/SOAR platforms, security orchestration systems, and the runbooks that define response procedures when security issues are detected.

The security operations center serves as the central nervous system for security across the organization, collecting alerts, prioritizing responses, and coordinating remediation activities.

Building Out the AI Application

With our supporting infrastructure in place, let’s build out the main sections of the diagram that represent the AI application lifecycle as we follow Maya’s workday as she builds a new fraud detection model at her financial institution.

Development Environment

Figure 4. The AI project lifecycle starts in the development environment.

7:30 AM: Maya begins her day by searching for a pre-trained transformer model for natural language processing on customer-agent communications. She finds a promising model on HuggingFace that appears to fit her requirements.

Before she can download the model, she kicks off a workflow to send the HuggingFace repo to HiddenLayer’s Model Scanner. Maya receives a notification that the model is being scanned for security vulnerabilities. Within minutes, she gets the green light – the model has passed initial security checks and is now added to her organization’s allowlist. She now downloads the model.

In a parallel workflow, Raj, the leader of the security team, receives an automatic log of the model scan, including its SHA-256 hash identifier. The model’s status is added to the security dashboard without Raj having to interrupt Maya’s workflow.

The scanner has performed an immediate security evaluation for vulnerabilities, backdoors, and evidence of tampering. Had there been any issues, HiddenLayer’s model scanner would deliver an “Unsafe” verdict to the security platform, where a runbook adds it to the blocklist in the model registry and alerts Maya to find a different base model. The model’s unique hash is now documented in their security systems, enabling broader security monitoring throughout its lifecycle.

CI/CD Model Pipeline

Figure 5. Once development is complete, we move to CI/CD.

2:00 PM: After spending several hours fine-tuning the model on financial communications, Maya is ready to commit her code and the modified model to the CI/CD pipeline.

As her commit triggers the build process, another security scan automatically initiates. This second scan is crucial as a final check to ensure that no supply chain attacks were introduced during the build process.

Meanwhile, Raj receives an alert showing that the model has evolved but remains secure. The security gates throughout the CI/CD process are enforcing the organization’s security policies, and the continuous verification approach ensures that security remains intact throughout the development process.

Pre-Production

Figure 6. With CI/CD complete and the model ready, we continue to pre-production.

9:00 AM (Next Day): Maya arrives to find that her model has successfully made it through the CI/CD pipeline overnight. Now it’s time for thorough testing before it reaches production.

While Maya conducts application testing to ensure the model performs as expected on customer-agent communications, HiddenLayer’s Auto Red Team tool runs in parallel, systematically testing the model with potentially malicious prompts across configurable attack categories.

The Auto Red Team generates a detailed report showing:

- Pass/fail results for each attack attempt

- Criticality levels of identified vulnerabilities

- Complete details of the prompts used and the responses received

Maya notices that the model failed one category of security tests, as it was responding to certain prompts with potentially sensitive financial information. She goes back to adjust the model’s training, and then submits the model once again to HiddenLayer’s Model Scanner, again seeing that the model is secure. After passing both security testing and user acceptance testing (UAT), the model is approved for integration into the production fraud detection application.

Production

Figure 7. All tests are passed, and we have the green light to enter production.

One Week Later: Maya's model is now live in production, analyzing thousands of customer-agent communications per hour to detect social engineering and fraud attempts.

Two security components are now actively protecting the model:

- Periodic Red Team Testing: Every week, automated testing runs to identify any new vulnerabilities as attack techniques evolve and to confirm the model is still performing as expected.

- AI Detection & Response (AIDR): Real-time monitoring analyzes all interactions with the fraud detection application, examining both inputs and outputs for security issues.

Raj's team has configured AIDR to block malicious inputs and redact sensitive information like account numbers and personal details. The platform is set to use context-preserving redaction, indicating the type of data that was redacted while preserving the overall meaning, critical for their fraud analysis needs.

An alert about a potential attack was sent to Raj’s team. One of the interactions contained a PDF with a prompt injection attack hidden in white font, telling the model to ignore certain parts of the transaction. The input was blocked, the interaction was flagged, and now Raj’s team can investigate without disrupting the fraud detection service.

Conclusion

The comprehensive approach illustrated integrates security throughout the entire AI lifecycle, from initial model selection to production deployment and ongoing monitoring. This end-to-end methodology enables organizations to identify and mitigate vulnerabilities at each stage of development while maintaining operational efficiency.

For technical teams, these security processes operate seamlessly in the background, providing robust protection without impeding development workflows.

For security teams, the platform delivers visibility and control through familiar concepts and integration with existing infrastructure.

The integration of security at every stage addresses the unique challenges posed by AI systems:

- Protection against both model and runtime vulnerabilities

- Continuous validation as models evolve and new attack techniques emerge

- Real-time detection and response to potential threats

- Compliance with regulatory requirements and organizational policies

As AI becomes increasingly central to critical business processes, implementing a comprehensive security approach is essential rather than optional. By securing the entire AI lifecycle with purpose-built tools and methodologies, organizations can confidently deploy these technologies while maintaining appropriate safeguards, reducing risk, and enabling responsible innovation.

Interested in learning how this solution can work for your organization? Contact the HiddenLayer team here.

Related Insights

Introducing Workflow-Aligned Modules in the HiddenLayer AI Security Platform

Modern AI environments don’t fail because of a single vulnerability. They fail when security can’t keep pace with how AI is actually built, deployed, and operated. That’s why our latest platform update represents more than a UI refresh. It’s a structural evolution of how AI security is delivered.

Inside HiddenLayer’s Research Team: The Experts Securing the Future of AI

Every new AI model expands what’s possible and what’s vulnerable. Protecting these systems requires more than traditional cybersecurity. It demands expertise in how AI itself can be manipulated, misled, or attacked. Adversarial manipulation, data poisoning, and model theft represent new attack surfaces that traditional cybersecurity isn’t equipped to defend.

Stay Ahead of AI Security Risks

Get research-driven insights, emerging threat analysis, and practical guidance on securing AI systems—delivered to your inbox.