The Most Comprehensive AI Security Platform

Discover every model. Secure every workflow. Prevent AI attacks - without slowing innovation.

Securing Real-World AI Risks in One Platform

Our platform proactively defends against the full spectrum of AI threats, safeguarding your IP, compliance posture, and enterprise operations.

AI Discovery

Gain visibility into AI assets across environments to eliminate shadow AI.

AI Supply Chain Security

Secure AI models before deployment by validating integrity and supply chain.

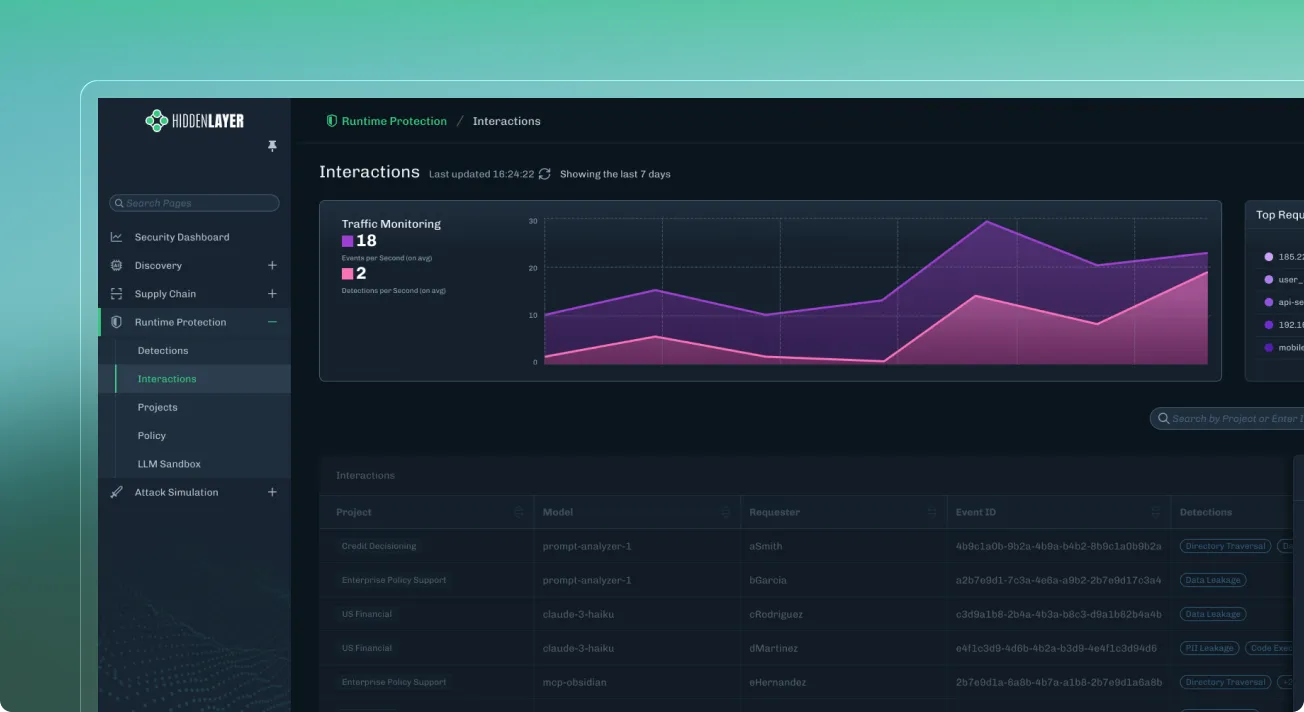

AI Runtime Security

Detect and respond to AI attacks without impacting performance in production.

AI Attack Simulation

Simulate real world AI attacks continuously to uncover weaknesses early.

Purpose-Built for AI Security

Designed specifically for the unique threats facing models, pipelines, and agentic systems, not retrofitted from traditional cybersecurity.

Model-Agnostic, Agentless, Zero Training Data Required

Deploy in minutes across any architecture without exposing intellectual property, weights, prompts, or customer data.

Trusted by The Largest Enterprises & Security Leaders

Deployed in the world’s largest enterprises to secure AI at scale, without slowing innovation.

Secure Every Stage of the AI Lifecycle

Visibility, hardening, testing, and defense - all integrated in one unified platform.

Faster and Safer AI Deployment

Accelerate AI rollout with security built directly into the lifecycle.

The platform streamlines approvals and reduces bottlenecks by validating models, enforcing policies, and monitoring behavior end to end. Teams ship AI features faster with confidence that safety, quality, and compliance are fully covered.

.webp)

Protection Against Misuse and IP Theft

Keep your proprietary models, data, and outputs secure.

The platform prevents prompt attacks, model extraction, unauthorized tool usage, and data leakage. Protect your IP, fine tunes, and sensitive datasets from theft or unintended exposure across all environments.

.webp)

Governance and AI Security Posture

Maintain predictable, compliant, and policy aligned AI across the enterprise.

Gain organization wide visibility into every model, apply consistent governance rules, classify risk, and monitor posture. Ensure every AI system meets security, regulatory, and operational standards at scale.

.webp)

Integrates with Your Existing Security & MLOps Stack

Native connectors for cloud, CI/CD, data platforms, SIEM/SOAR, API gateways, and MLOps tools.

Additional platform features

See Every Model and Every AI Workflow

Automatically build a living inventory of AI across your environment, including shadow AI.

Scan and Harden Any Model

Automatically build a living inventory of AI across your environment, including shadow AI.

Attacks and Unsafe Behavior

Automatically build a living inventory of AI across your environment, including shadow AI.

.webp)

AI Security Use Cases

Proven Impact at Enterprise Scale

Measurable security and operational improvements achieved by enterprises protecting AI models, pipelines, and production systems.

report that shadow AI - unapproved or untracked AI deployments - is a definite or probable problem

AI breaches are already linked to agentic systems

say attacks on AI systems have increased or remained the same compared to the previous year

Innovation Hub

Research, guidance, and frameworks from the team shaping AI security standards.

min read

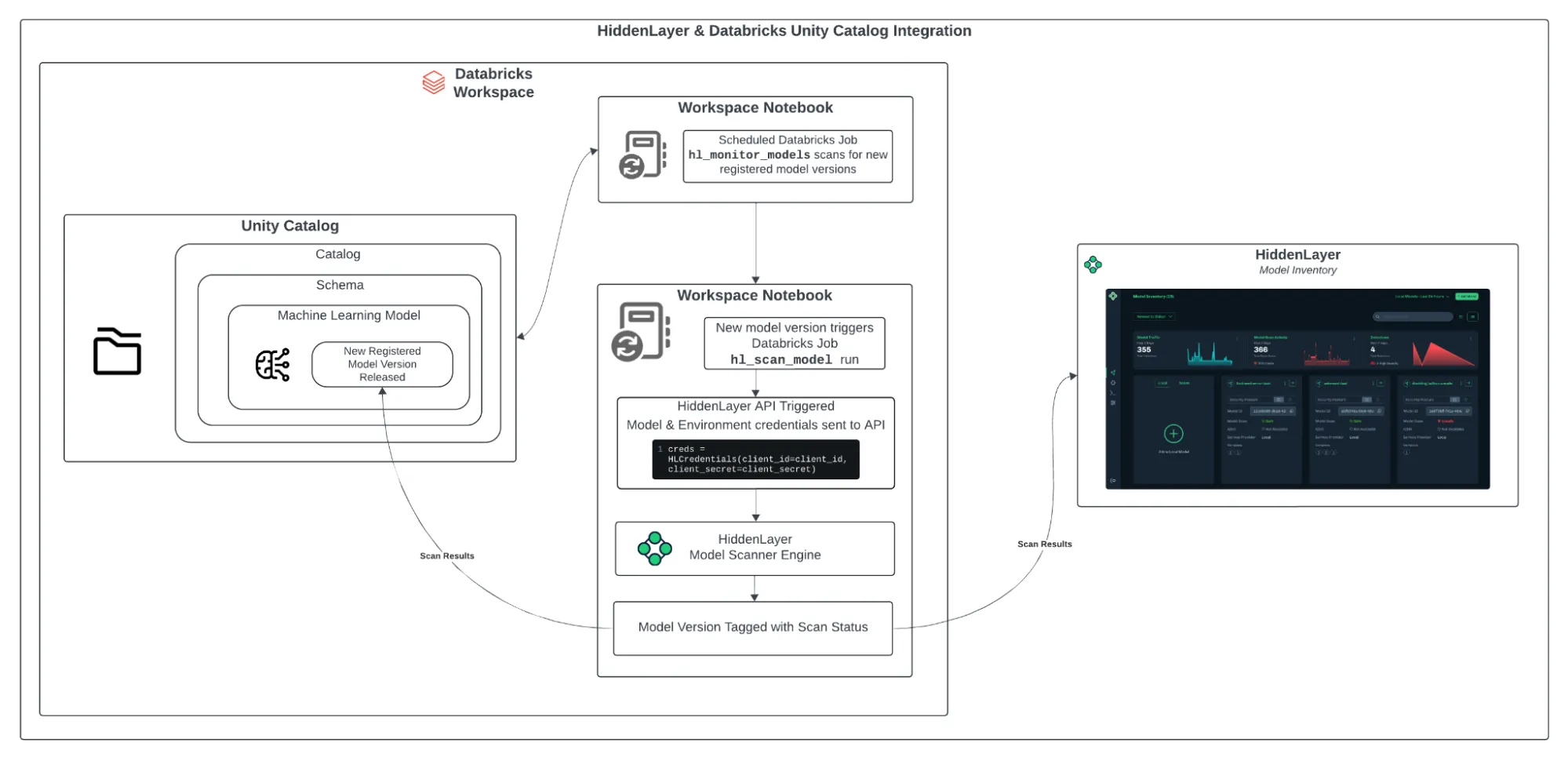

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Ready to secure your AI?

Start by requesting your demo and let’s discuss protecting your unique AI advantage.

.webp)