Today, many Cloud Service Providers (CSPs) offer bespoke services designed for Artificial Intelligence solutions. These services enable you to rapidly deploy an AI asset at scale in an environment purpose-built for developing, deploying, and scaling AI systems. Some of the most popular examples include Hugging Face Spaces, Google Colab & Vertex AI, AWS SageMaker, Microsoft Azure with Databricks Model Serving, and IBM Watson. What are the advantages compared to traditional hosting? Access to vast amounts of computing power (both CPU and GPU), ready-to-go Jupyter notebooks, and scaling capabilities to suit both your needs and the demands of your model.

These AI-centric services are widely used in academic and professional settings, providing inordinate capability to the end user, often for free – to begin with. However, high-value services can become high-value targets for adversaries, especially when they’re accessible at competitive price points. To mitigate these risks, organizations should adopt a comprehensive AI security framework to safeguard against emerging threats.

Given the ease of access, incredible processing power, and pervasive use of CSPs throughout the community, we set out to understand how these systems are being used in an unintended and often undesirable manner.

Hijacking Cloud Services

It’s easy to think of the cloud as an abstract faraway concept, yet understanding the scope and scale of your cloud environments is just as (if not more!) important than protecting the endpoint you’re reading this from. These environments are subject to the same vulnerabilities, attacks, and malware that may affect your local system. A highly interconnected platform enables developers to prototype and build at scale. Yet, it’s this same interconnectivity that, if misconfigured, can expose you to massive data loss or compromise – especially in the age of AI development.

Google Colab Hijacking

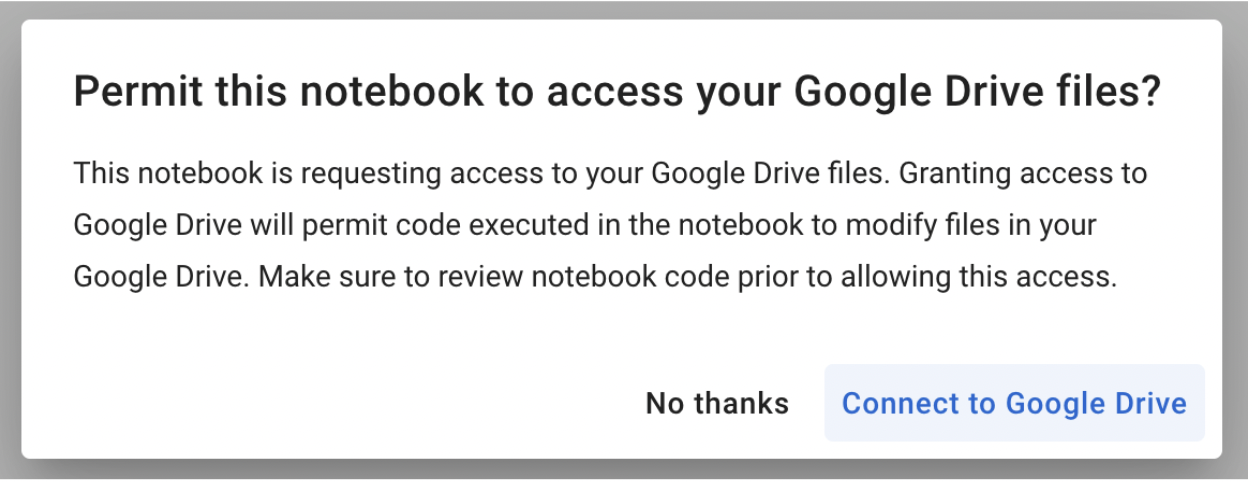

In 2022, red teamer 4n7m4n detailed how malicious Colab notebooks could modify or exfiltrate data from your Google Drive if a pop-up window is agreed to. Additionally, malicious notebooks could cause you to accidentally deploy a reverse shell or something more nefarious – allowing persistent access to your Colab instance. If you’re running Colab’s from third parties, inspect the code thoroughly to ensure it isn’t attempting to access your Drive or hijack your instance.

Stealing AWS S3 Bucket Data

Amazon SageMaker provides a similar Jupyter-based environment for AI development. It can also be hijacked in a similar fashion, where a malicious notebook – or even a hijacked pre-trained model – is loaded/executed. In one of our past blogs, Insane in the Supply Chain: Threat modeling for supply chain attacks on ML systems, we demonstrate how a malicious model can enumerate, then exfiltrate all data from a connected S3 bucket, which acts as persistent cold storage for all manners of data (e.g. training data).

Cryptominers

If you’ve tried to buy a graphics card in the last few years, you’ve undoubtedly noticed that their prices have become increasingly eye-watering – and that’s if you can find one. Before the recent AI boom, which itself drove GPU scarcity, many would buy up GPUs en-masse for use in proof-of-work blockchain mining, at a high electricity cost to boot. Energy cannot be created or destroyed – but as we’ve discovered, it can be turned into cryptocurrency.

With both mining and AI requiring access to large amounts of GPU processing power, there’s a certain degree of transferability to their base hardware environments. To this end, we’ve seen a number of individuals attempt to exploit AI hosting providers to launch their miners.

Separately, malicious packages on PyPi and NPM which aim to masquerade as and typosquat legitimate packages have been seen to deploy cryptominers within the victim environment. In a more recent spate of attacks, PyPi had to temporarily suspend the registration of new users and projects to curb the high amount of malicious activity on the platform.

While end-users should be concerned about rogue crypto mining in their environments due to exceptionally high energy bills (especially in cases of account takeover), CSPs should also be worried due to the reduced service availability, which can hamper legitimate use across their platform.

Password Cracking

Typically, password cracking involves the use of a tool like Hydra, or John the Ripper to brute force a password or crack its hashed value. This process is computationally expensive, as the difficulty of cracking a password can get exponentially more difficult with additional length and complexity. Of course, building your own password-cracking rig can be an expensive pursuit in its own right, especially if you only have intermittent use for it. GitHub user Mxrch created Penglab to address this, which uses Google Colab to launch a high-powered password-cracking instance with preinstalled password crackers and wordlists. Colab enables fast, (initially) free access to GPUs to help write and deploy Python code in the browser, which is widely used within the ML space.

Hosting Malware

Cloud services can also be used to host and run other types of malware. This can result not only in the degradation of service but also in legal troubles for the service provider.

Crossing the Rubika

Over the last few months, we have observed an interesting case illustrating the unintended usage of Hugging Face Spaces. A handful of Hugging Face users have abused Spaces to run crude bots for an Iranian messaging app called Rubika. Rubika, typically deployed as an Android application, was previously available on the Google Play app store until 2022, when it was removed – presumably to comply with US export restrictions and sanctions. The app is sponsored by the government of Iran and has recently been facing multiple accusations of bias and privacy breaches.

We came across over a hundred different Hugging Face Spaces hosting various Rubika bots with functionalities ranging from seemingly benign to potentially unwanted or even malicious, depending on how they are being used. Several of the bots contained functionality such as:

- administering users in a group or channel,

- collecting information about users, groups, and channels,

- downloading/uploading files,

- censoring posted content,

- searching messages in groups and channels for specific words,

- forwarding messages from groups and channels,

- sending out mass messages to users within the Rubika social network.

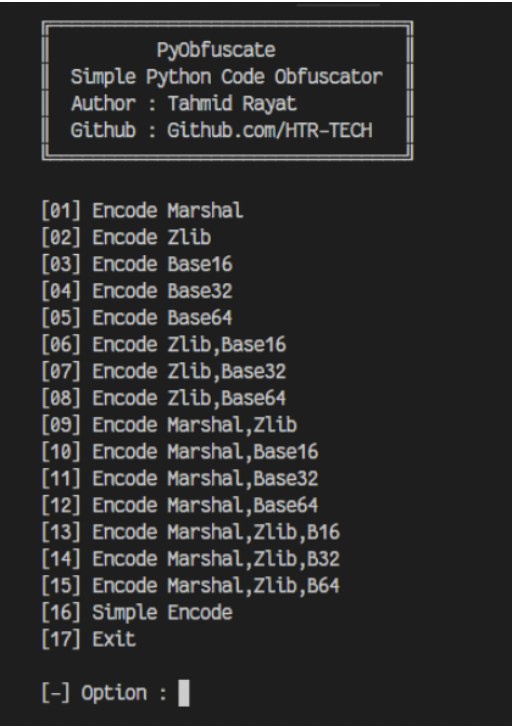

Although we don’t have enough information about their intended purpose, these bots could be utilized to spread spam, phishing, disinformation, or propaganda. Their dubiousness is additionally amplified by the fact that most of them are heavily obfuscated. The tool used for obfuscation, called PyObfuscate, allows developers to encode Python scripts in several ways, combining Python’s pseudo-compilation, Zlib compression, and Base64 encoding. It’s worth mentioning that the author of this obfuscator also developed a couple of automated phishing applications.

Figure 1 – PyObfuscate obfuscation selection

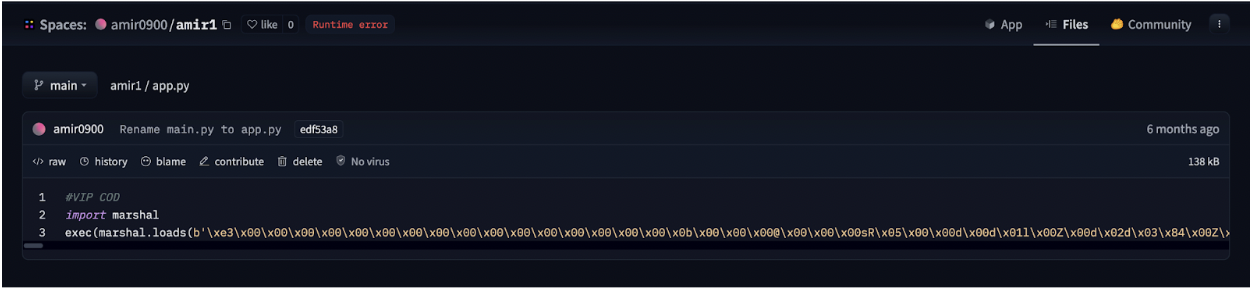

Each obfuscated script is converted into binary code using Python’s marshal module and then subsequently executed on load using an ‘exec’ call. The marshal library allows the user to transform Python code into a pseudo-compiled format in a similar way to the pickle module. However, marshal writes bytecode for a particular Python version, whereas pickle is a more general serialization format.

Figure 2 – Marshalled bytecode in app.py

The obfuscated scripts differ in the number and combination of Base64 and Zlib layers, but most of them have similar functionality, such as searching through channels and mass sending of messages.

“Mr. Null”

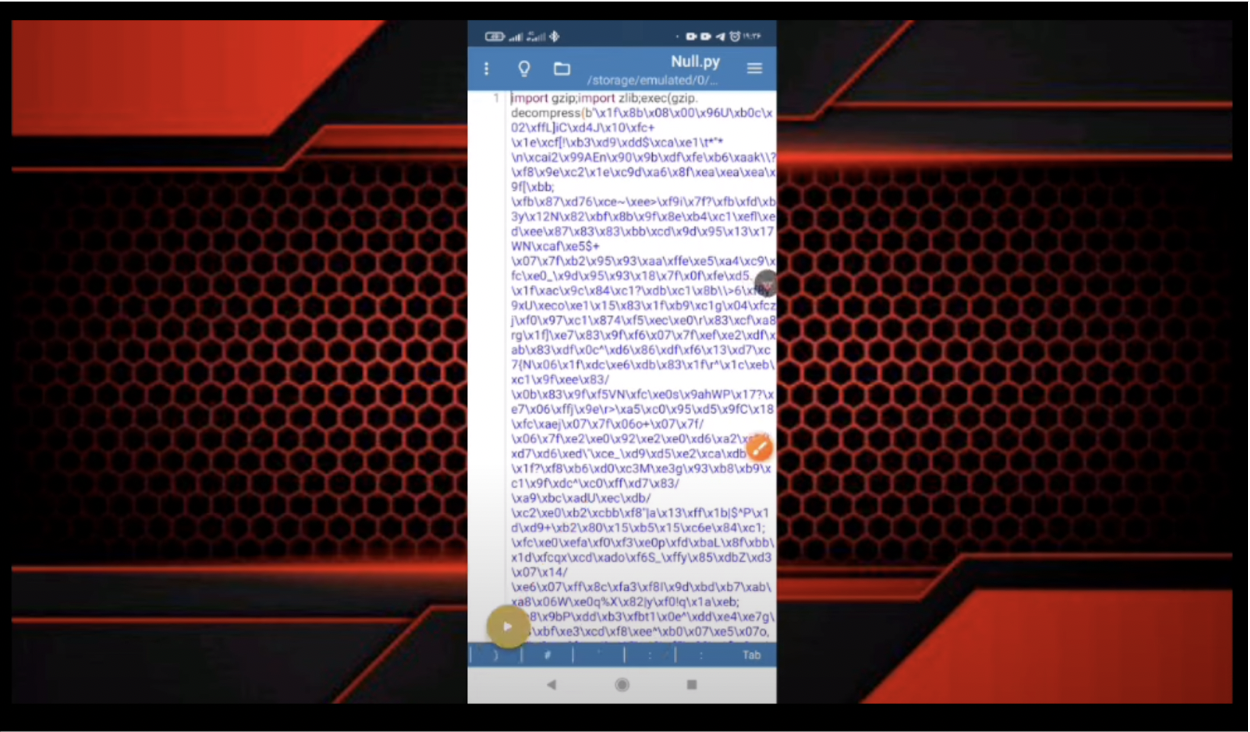

Many of the bots contain references to an ethereal character, “Mr. Null”, by way of their telegram username @mr_null_chanel. When we looked for additional context around this username, we found what appears to be his YouTube account, with guides on making Rubika bots, including a video with familiar obfuscation to the payload we’d seen earlier.

Figure 3 – Still from an instructional YouTube video

IRATA

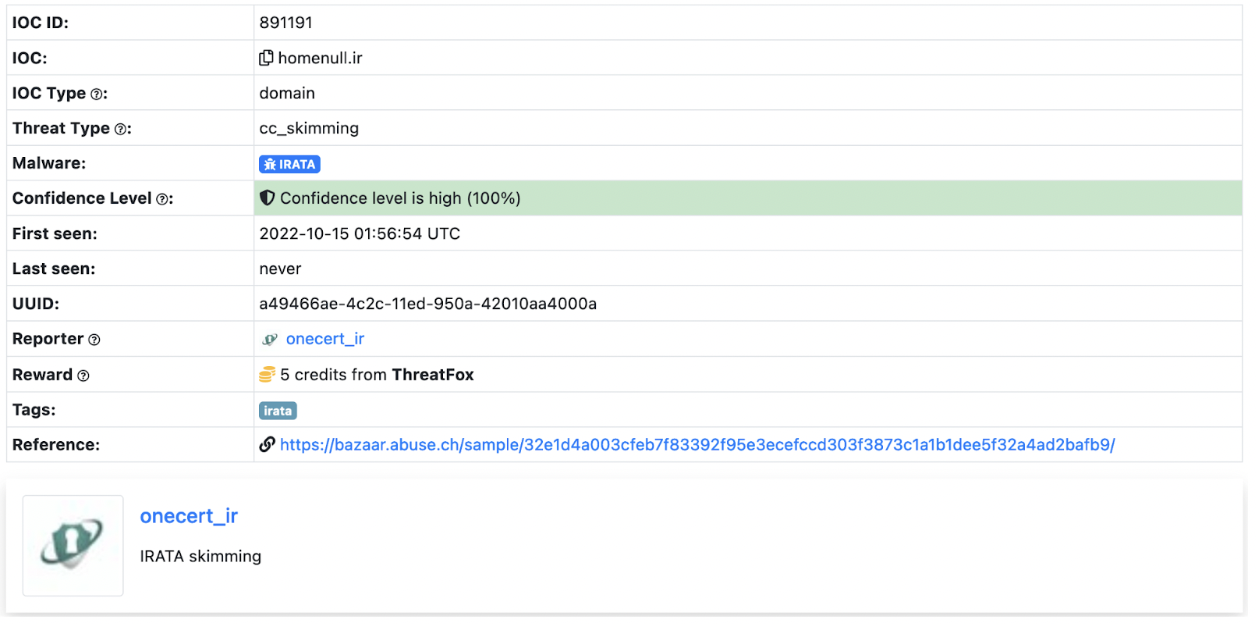

Alongside the tag @mr_null_chanel, a URL https[:]//homenull[.]ir was referenced within several inspected files. As we later found out, this URL has links to an Android phishing application named IRATA and has been reported by OneCert Cyber Security as a credit card skimming site.

After further investigation, we found an Android APK flagged by many community rules for IRATA on VirusTotal. This file communicates with Firebase, which also contains a reference to the pseudonym:

https[:]//firebaseinstallations.googleapis[.]com/v1/projects/mrnull-7b588/installations

Other domains found within the code of Rubika bots hosted on Hugging Face Spaces have also been attributed to Iranian hackers, with morfi-api[.]tk being used for a phishing attack against Bank of Iran payment portal, once again reported by OneCert Cyber Security. It’s also worth mentioning that the tag @mr_null_chanel appears alongside this URL within the bot file.

While we can’t explicitly confirm if “Mr. Null” is behind IRATA or the other phishing attacks, we can confidently assert that they are actively using Hugging Face Spaces to host bots, be it for phishing, advertising, spam, theft, or fraud.

Conclusions

Left unchecked, the platforms we use for developing AI models can be used for other purposes, such as illicit cryptocurrency mining, and can quickly rack up sky-high bills. Ensure you have a firm handle on the accounts that can deploy to these environments and that you’re adequately assessing the code, models, and packages used in them and restricting access outside of your trusted IP ranges.

The initial compromise of AI development environments is similar in nature to what we’ve seen before, just in a new form. In our previous blog Models are code: A Deep Dive into Security Risks in TensorFlow and Keras, we show how pre-trained models can execute malicious code or perform unwanted actions on machines, such as dropping malware to the filesystem or wiping it entirely.

Interconnectivity in cloud environments can mean that you’re only a single pop-up window away from having your assets stolen or tampered with. Widely used tools such as Jupyter notebooks are susceptible to a host of misconfiguration issues, spawning security scanning tools such as Jupysec, and new vulnerabilities are being discovered daily in MLOps applications and the packages they depend on.

Lastly, if you’re going to allow cryptomining in your AI development environment, at least make sure you own the wallet it’s connected to.

Appendix

Malicious domains found in some of the Rubika bots hosted on Hugging Face Spaces:

- homenull[.]ir – IRATA phishing domain

- morfi-api[.]tk – Phishing attack against Bank of Iran payment portal

List of bot names and handles found across all 157 Rubika bots hosted on Hugging Face Spaces:

- ??????? ????????

- ???? ???

- BeLectron

- Y A S I N BOT

- ᏚᎬᎬᏁ ᏃᎪᏁ ᎷᎪᎷᎪᎠ

- @????_???

- @Baner_Linkdoni_80k

- @HaRi_HACK

- @Matin_coder

- @Mr_HaRi

- @PROFESSOR_102

- @Persian_PyThon

- @Platiniom_2721

- @Programere_PyThon_Java

- @TSAW0RAT

- @Turbo_Team

- @YASIN_THE_GAD

- @Yasin_2216

- @aQa_Tayfun_CoDer

- @digi_Av

- @eMi_Coder

- @id_shahi_13

- @mrAliRahmani1

- @my_channel_2221

- @mylinkdooniYasin_Bot

- @nezamgr

- @pydroid_Tiamot

- @tagh_tagh777

- @yasin_2216

- @zana_4u

- @zana_bot_54

- Arian Bot

- Aryan bot

- Atashgar BOT

- BeL_Bot

- Bifekrei

- CANDY BOT

- ChatCoder Bot

- Created By BeLectron

- CreatedByShayan

- DOWNLOADER BOT

- DaRkBoT

- Delvin bot

- Guid Bot

- OsTaD_Python

- PLAT | BoT

- Robot_Rubika

- RubiDark

- Sinzan bot

- Upgraded by arian abbasi

- Yasin Bot

- Yasin_2221

- Yasin_Bot

- [SIN ZAN YASIN]

- aBol AtashgarBot

- arianbot

- faz_sangin

- mr_codaker

- mr_null_chanel

- my_channel_2221

- ꜱᴇɴ ᴢᴀɴ ᴊᴇꜰꜰ