AI – Trending Now

Artificial Intelligence (AI) is the hot topic of the 2020s – just as “email” used to be in the 80s, “Word Wide Web” in the 90s, “cloud computing” in the 00s, and “Internet-of-Things” more recently. However, it’s much more than just a buzzword, and like each of its predecessors, the technology behind it is rapidly transforming our world and everyday life.

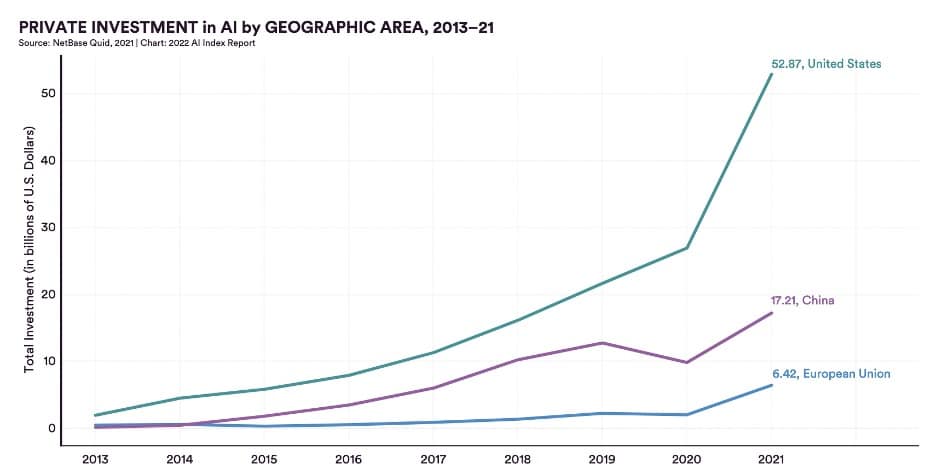

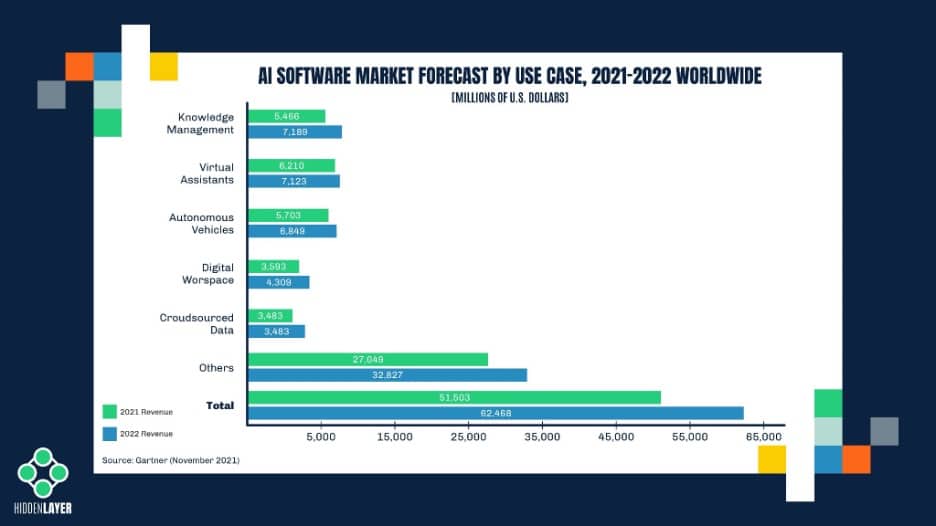

The underlying technology, called Machine Learning (ML), is all around us – in the apps we use on our personal devices, in our homes, cars, banks, factories, and hospitals. ML attracts billions of dollars of investments each year and generates billions more in revenue. Most people are unaware that many aspects of our lives depend on the decisions made by AI, or more specifically, some unintentionally obscure machine learning models that power those AI solutions. Nowadays, it’s ML that decides whether you get a mortgage or how much you will pay for your health insurance; even unlocking your phone relies on an effective ML model (we’ll explain this term in a bit more detail shortly).

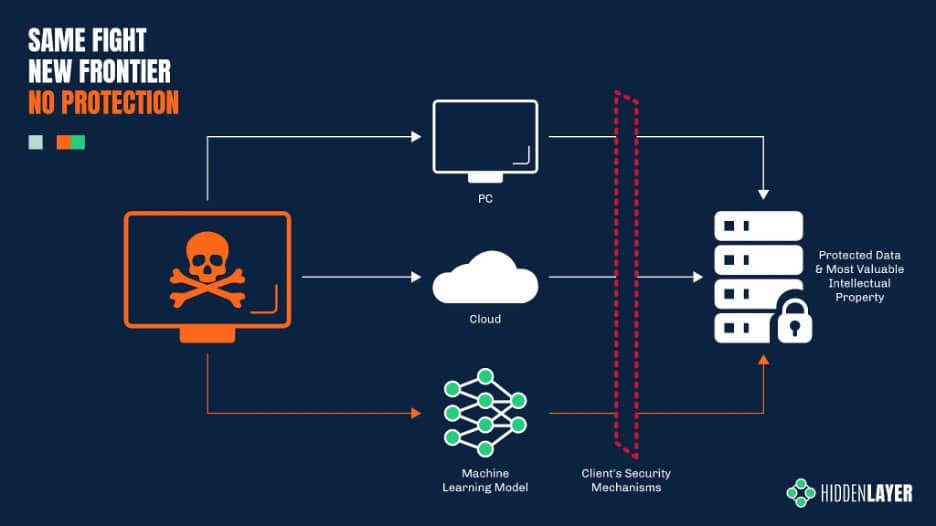

Whether you realize it or not, machine learning is gaining rapid adoption across several sectors, making it a very enticing target for cyber adversaries. We’ve seen this pattern before with various races to implement new technology as security lags behind. The rise of the internet led to the proliferation of malware, email made every employee a potential target for phishing attacks, the cloud dangles customer data out in the open, and your smartphone bundles all your personal information in one device waiting to be compromised. ML is sadly not an exception and is already being abused today.

To understand how cyber-criminals can hack a machine learning model – and why! – we first need to take a very brief look at how these models work.

A Glimpse Under the Hood

Have you ever wondered how Alexa can understand (almost) everything you ask her or how a Tesla car keeps itself from veering off the road? While it may appear like magic, there is a tried and true science under the hood, one that involves a great deal of math.

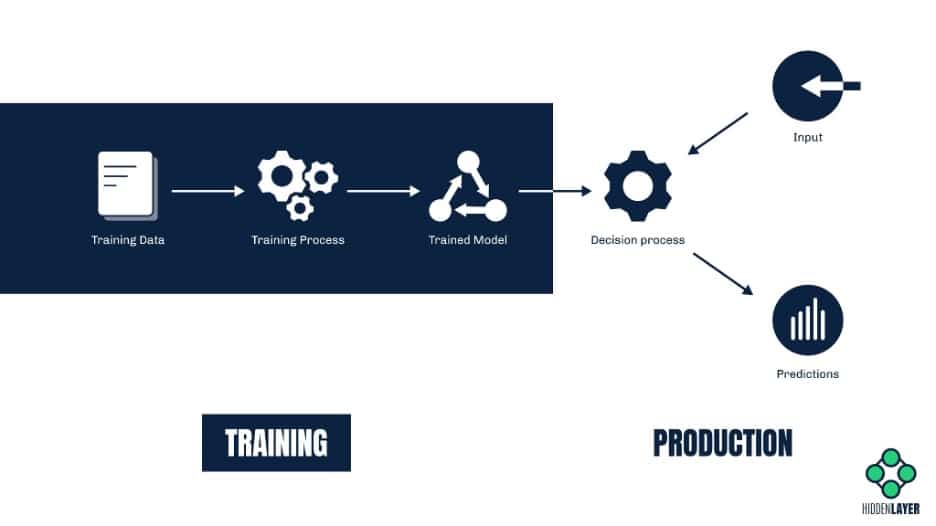

At the core of any AI-powered solution lies a decision-making system, which we call a machine learning model. Despite being a product of mathematical algorithms, this model works much like a human brain – it analyzes the input (such as a picture, a sound file, or a spreadsheet with financial data) and makes a prediction based on the information it has learned in the past.

The phase in which the model “acquires” its knowledge is called the training phase. During training, the model examines a vast amount of data and builds correlations. These correlations enable the model to interpret new, previously unseen input and make some sort of prediction about it.

Let’s take an image recognition system as an example. A model designed to recognize pictures of cats is trained by running a large number of images through a set of mathematical functions. These images will include both depictions of cats (labeled as “cat”) and depictions of other animals (labeled as – you guessed it – “not_cat”). After the training phase computations are completed, the model should be able to correctly classify a previously unseen image as either “cat” or “not_cat” with a high degree of accuracy. The system described is known as a simple binary classifier (as it can make one of two choices), but if we were to extend the system to also detect various breeds of cats and dogs, then it would be called a multiclass classifier.

Machine learning is not just about classification. There are different types of models that suit various purposes. A price estimation system, for example, will use a model that outputs real-value predictions, while an in-game AI will involve a model that essentially makes decisions. While this is beyond the scope of this article, you can learn more about ML models here.

Walking On Thin Ice

When we talk about artificial intelligence in terms of security risks, we usually envisage some super-smart AI posing a threat to society. The topic is very enticing and has inspired countless dystopian stories. However, as things stand, we are not quite close yet to inventing a truly conscious AI; the recent claims that Google’s LaMDA bot has reached sentience are frankly absurd. Instead of focusing on sci-fi scenarios where AI turns against humans, we should pay much more attention to the genuine risk that we’re facing today – the risk of humans attacking AI.

Many products (such as web applications, mobile apps, or embedded devices) share their entire machine learning model with the end-user. Even if the model itself is deployed in the cloud and is not directly accessible, the consumer still must be able to query it, i.e., upload their inputs and obtain the model’s predictions. This aspect alone makes ML solutions vulnerable to a wide range of abuse.

Numerous academic research studies have proven that machine learning is susceptible to attack. However, awareness of the security risks faced by ML has barely spread outside of academia, and stopping attacks is not yet within the scope of today’s cyber security products. Meanwhile, cyber-criminals are already getting their hands dirty conducting novel attacks to abuse ML for their own gain.

Things invisible to the naked AI

While it may sound like quite a niche, adversarial machine learning (known more colloquially as “model hacking”) is a deceptively broad field covering many different types of attacks on ML systems. Some of them may seem familiar – like distantly related cousins of those traditional cyber attacks that you’re used to hearing about, such as trojans and backdoors.

But why would anyone want to attack an ML model? The reasons are typically the same as any other kind of cyber attack, the most relevant being: financial gain, getting a competitive advantage or hurting competitors, manipulating public opinion, and bypassing security solutions.

In broad terms, an ML model can be attacked in three different ways:

- It can be fooled into making a wrong prediction (e.g., to bypass malware detection)

- It can be altered (e.g., to make it biased, inaccurate, or even malicious in nature)

- It can be replicated (in other words, stolen)

Fooling the model (a.k.a. evasion attacks)

Not many might be aware, but evasion attacks are already widely employed by cyber-criminals to bypass various security solutions – and have been used for quite a while. Consider ML-based spam filters designed to predict which emails are junk based on the occurrences of specific words in them. Spammers quickly found their way around these filters by adding words associated with legitimate messages to their junk emails. In this way, they were able to fool the model into making the wrong conclusion.

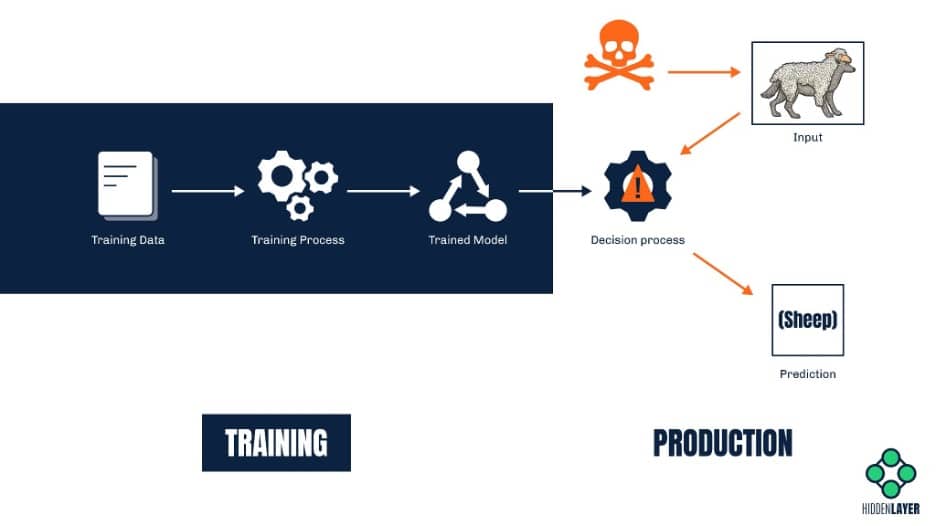

Of course, most modern machine learning solutions are way more complex and robust than those early spam filters. Nevertheless, with the ability to query a model and read its predictions, attackers can easily craft inputs that will produce an incorrect prediction or classification. The difference between a correctly classified sample and the one that triggers misclassification is often invisible to the human eye.

Besides bypassing anti-spam / anti-malware solutions, evasion attacks can also be used to fool visual recognition systems. For example, a road sign with a specially crafted sticker on it might be misidentified by the ML system on-board a self-driving car. Such an attack could cause a car to fail to identify a stop sign and inadvertently speed up instead of slowing down. In a similar vein, attackers wanting to bypass a facial recognition system might design a special pair of sunglasses that will make the wearer invisible to the system. The possibilities are endless, and some can have potentially lethal consequences.

Altering the model (a.k.a. poisoning attacks)

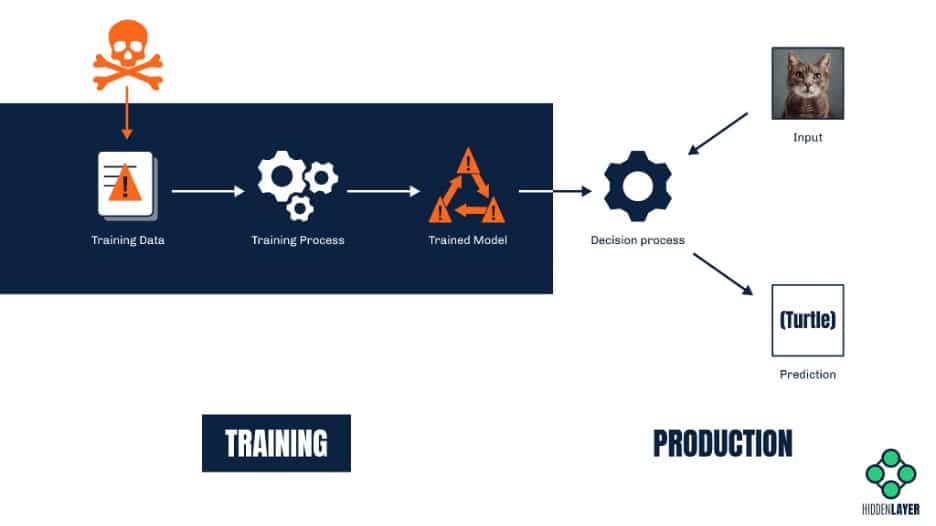

While evasion attacks are about altering the input to make it undetectable (or indeed mistaken for something else), poisoning attacks are about altering the model itself. One way to do so is by training the model on inaccurate information. A great example here would be an online chatbot that is continuously trained on the user-provided portion of the conversation. A malicious user can interact with the bot in a certain way to introduce bias. Remember Tay, the infamous Microsoft Twitter bot whose responses quickly became rude and racist? Although it was a result of (mostly) unintended trolling, it is a prime case study for a crude crowd-sourced poisoning attempt.

ML systems that rely on online learning (such as recommendation systems, text auto-complete tools, and voice recognition solutions, to name but a few) are especially vulnerable to poisoning because the input they are trained on comes from untrusted sources. A model is only as good as its training data (and associated labels), and predictions from a model trained on inaccurate data will always be biased or incorrect.

Another much more sophisticated attack that relies on altering the model involves injecting a so-called “backdoor” into the model. A backdoor, in this context, is some secret functionality that will make the ML model selectively biased on-command. It requires both access to the model and a great deal of skill but might prove a very lucrative business. For example, ambitious attackers could backdoor a mortgage approval model. They could then sell a service to non-eligible applicants to help get their applications approved. Similarly, suppliers of biometric access control or image recognition systems could tamper with models they supply to include backdoors, allowing unauthorized access to buildings for specific people or even hiding people from video surveillance systems altogether.

Stealing the model

Imagine spending vast amounts of time and money on developing a complex machine learning system that predicts market trends with surprising accuracy. Now imagine a competitor who emerges from nowhere and has an equally accurate system in a matter of days. Sounds suspicious, doesn’t it?

As it turns out, ML models are just as susceptible to theft as any other technology. Even if the model is not bundled with an application or readily available for download (as is often the case), more savvy attackers can attempt to replicate it by spamming the ML system with a vast amount of specially-crafted queries and recording the output, finally creating their own model based on these results. This process gets even easier if the data the ML was trained on is also accessible to attackers. Such a copycat model can often perform just as well as the original, which means you may lose your competitive advantage in the market that costs considerable time, effort, and money to establish.

Safeguarding AI – Without a T-800

Unlike the aforementioned world-changing technologies, machine learning is still largely overlooked as an attack vector, and a comprehensive out-of-the-box security solution has yet to be released to protect it. However, there are a few simple steps that can help to minimize the risks that your precious AI-powered technology might be facing.

First of all, knowledge is the key. Being aware of the danger puts you in a position where you can start thinking of defensive measures. The better you understand your vulnerabilities, the potential threats you face, and the attacker behind them, the more effective your defenses will be. MITRE’s recently released knowledgebase called Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) is an excellent place to begin, and keep an eye on our research space, too, as we aim to make the knowledge surrounding machine learning attacks more accessible.

Don’t forget to keep your stakeholders educated and informed. Data scientists, ML engineers, developers, project managers, and even C-level management must be aware of ML security, albeit to different degrees. It is much easier to protect a robust system designed, developed, and maintained with security in mind – and by security-conscious people – than consider security as an afterthought.

Beware of oversharing. Carefully assess which parts of your ML system and data need to be exposed to the customer. Share only as much information as necessary for the system to function efficiently.

Finally, help us help you! At HiddenLayer, we are not only spreading the word about ML security, but we are also in the process of developing the first Machine Learning Detection and Response solution. Don’t hesitate to reach out if you wish to book a demo, collaborate, discuss, brainstorm, or simply connect. After all, we’re stronger together!

If you wish to dive deeper into the inner workings of attacks against ML, watch out for our next blog, in which we will focus on the Tactics and Techniques of Adversarial ML from a more technical perspective. In the meantime, you can also learn a thing or two about the ML adversary lifecycle.

About HiddenLayer

HiddenLayer helps enterprises safeguard the machine learning models behind their most important products with a comprehensive security platform. Only HiddenLayer offers turnkey AI/ML security that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded in March of 2022 by experienced security and ML professionals, HiddenLayer is based in Austin, Texas, and is backed by cybersecurity investment specialist firm Ten Eleven Ventures. For more information, visit www.hiddenlayer.com and follow us on LinkedIn or Twitter.