Expert Services for the World’s Most Advanced AI Systems

HiddenLayer’s Professional Services empower organizations to identify vulnerabilities, assess risk, and strengthen defenses through the industry’s most advanced AI red teaming and training programs.

Trusted by Industry Leaders

Built by AI Security Researchers. Proven by Practice.

From comprehensive AI red teaming to precision risk assessments and immersive training, our services are designed to help organizations operationalize secure AI adoption without slowing innovation.

AI Red Teaming

Emulate real-world adversarial attacks against your agentic, generative, and predictive AI applications to uncover vulnerabilities before they can be exploited. Our expert team leverages cutting-edge techniques in model manipulation, prompt injection, and inference attacks to deliver actionable remediation strategies.

AI Risk Assessment

Gain a 360° view of your AI ecosystem’s exposure, whether you’re building models in-house or using third-party models like foundation model services. HiddenLayer AI Risk Assessments identify weaknesses across data pipelines, model lineage, and the AI supply chain, including risks introduced by externally sourced models. Findings are mapped to relevant compliance and governance frameworks, with prioritized recommendations to strengthen your AI security posture.

AI Security Training & Certification

Equip your security and development teams with the skills to recognize and mitigate AI-specific threats. Our hands-on Red Team Training program, developed by HiddenLayer’s red teaming experts, delivers real-world experience in adversarial AI testing, system prompt hardening, and continuous AI security validation.

Why Organizations Choose HiddenLayer Professional Services

Research-Driven Expertise

Driven by the same researchers responsible for discovering industry-shaping AI/ML vulnerabilities and CVEs.

Real-World Threat Simulation

Services modeled after active adversarial campaigns targeting enterprise AI applications.

Comprehensive Risk Coverage

Evaluate every layer from AI asset discovery to data and model supply chains to runtime environments.

Actionable Outcomes

Detailed remediation playbooks tailored to your models, infrastructure, and compliance needs.

Measurable Security Gains

Quantify improvement with before-and-after scoring based on AI security posture metrics.

Seamless Integration

Works in concert with the HiddenLayer AI Security Platform for ongoing protection.

Accelerated Compliance

Aligns with emerging AI governance standards, including NIST AI RMF and EU AI Act requirements.

Continuous Learning

Empower teams with repeatable testing frameworks and training modules built for ongoing maturity.

Proven Impact

Reduction in critical vulnerabilities across customer AI models within six months.

Remediation cycles using HiddenLayer’s automated attack simulation insights.

Of customers report increased confidence in AI risk governance post-engagement.

engagements completed across five continents and multiple regulated sectors

Learn from the Industry’s AI Security Experts

Research, guidance, and frameworks from the team shaping AI security standards.

min read

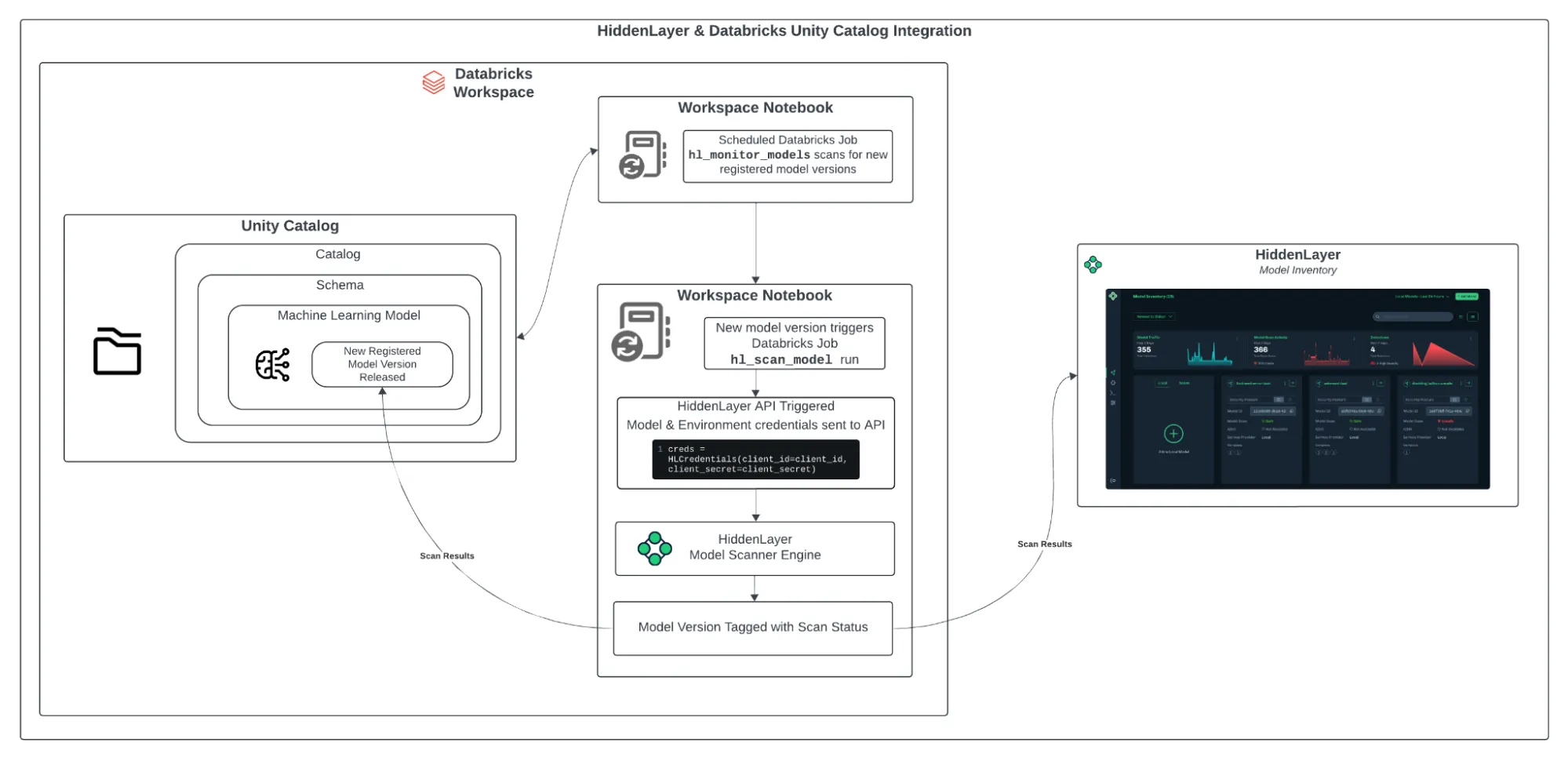

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Ready to Strengthen Your AI Defenses?

Partner with the experts defining the future of AI security. Schedule a consultation to learn how HiddenLayer’s Professional Services can help you identify risks, harden systems, and train teams for lasting resilience.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)