MITRE ATLAS: The Intersection of Cybersecurity and AI

February 1, 2023

min read

Introduction

At HiddenLayer, we publish a lot of technical research about Adversarial Machine Learning. It’s what we do. But unless you are constantly at the bleeding edge of cybersecurity threat research and artificial intelligence, like our SAI Team, it can be overwhelming to understand how urgent and important this new threat vector can be to your organization. Thankfully, MITRE has focused its attention towards educating the general public about Adversarial Machine Learning and security for AI systems.

Who is MITRE?

For those in cybersecurity, the name MITRE is well-known throughout the industry. For our Data Scientist readers and other non-cybersecurity professionals who may be less familiar, MITRE is a not-for-profit research and development organization sponsored by the US government and private companies within cybersecurity and many other industries.

Some of the most notable projects MITRE maintains within cybersecurity:

- The Common Vulnerabilities and Exposures (CVE) program identifies and tracks software vulnerabilities and is leveraged by Vulnerability Management products

- The MITRE ATT&CK (Adversarial Tactics, Techniques, and Common Knowledge) framework describes the various stages of traditional endpoint attack tactics and techniques and is leveraged by Endpoint Detection & Response (EDR) products

MITRE is now focusing its efforts on helping the world navigate the landscape of threats to machine learning systems.

“Ensuring the safety and security of consequential ML-enabled systems is crucial if we want ML to help us solve internationally critical challenges. With ATLAS, MITRE is building on our historical strength in cybersecurity to empower security professionals and ML engineers as they take on the new wave of security threats created by the unique attack surfaces of ML-enabled systems,” says Dr. Christina Liaghati, AI Strategy Execution Manager, MITRE Labs.

What is MITRE ATLAS?

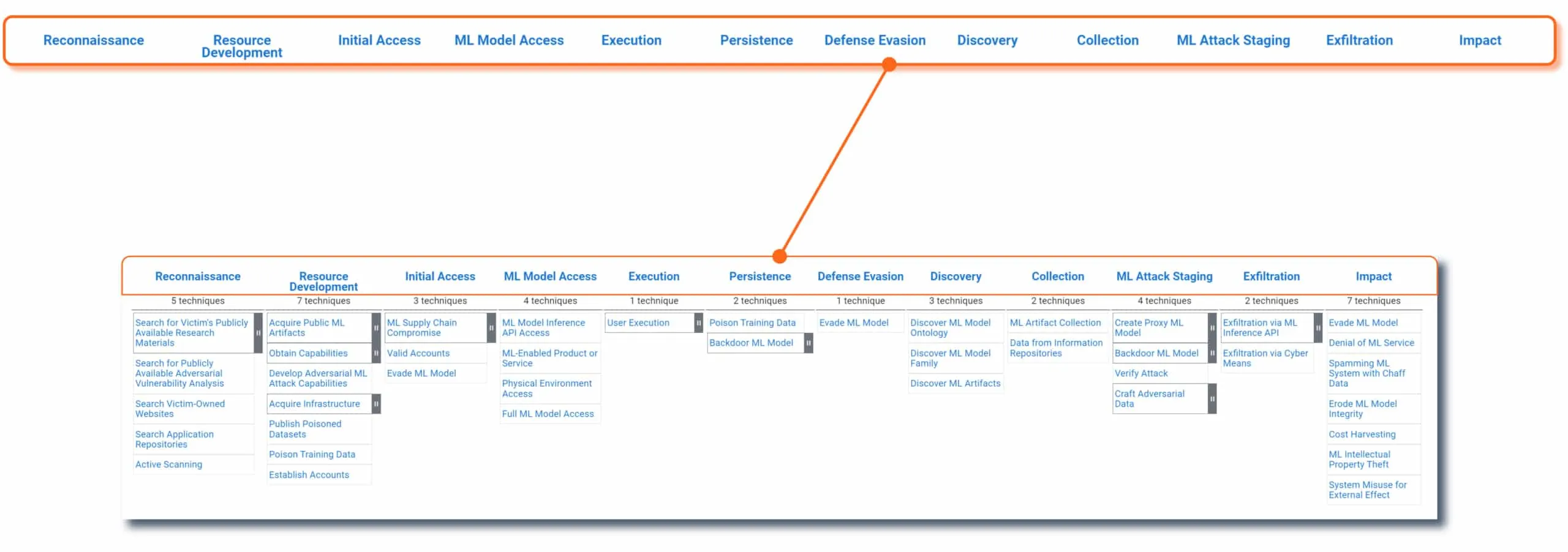

First released in June 2021, MITRE ATLAS stands for “Adversarial Threat Landscape for Artificial-Intelligence Systems.” It is a knowledge base of adversarial machine learning tactics, techniques, and case studies designed to help cybersecurity professionals, data scientists, and their companies stay up to date on the latest attacks and defenses against adversarial machine learning. The ATLAS matrix is modelled after the well-known MITRE ATT&CK framework.

https://youtu.be/3FN9v-y-C-w

Tactics (Why)

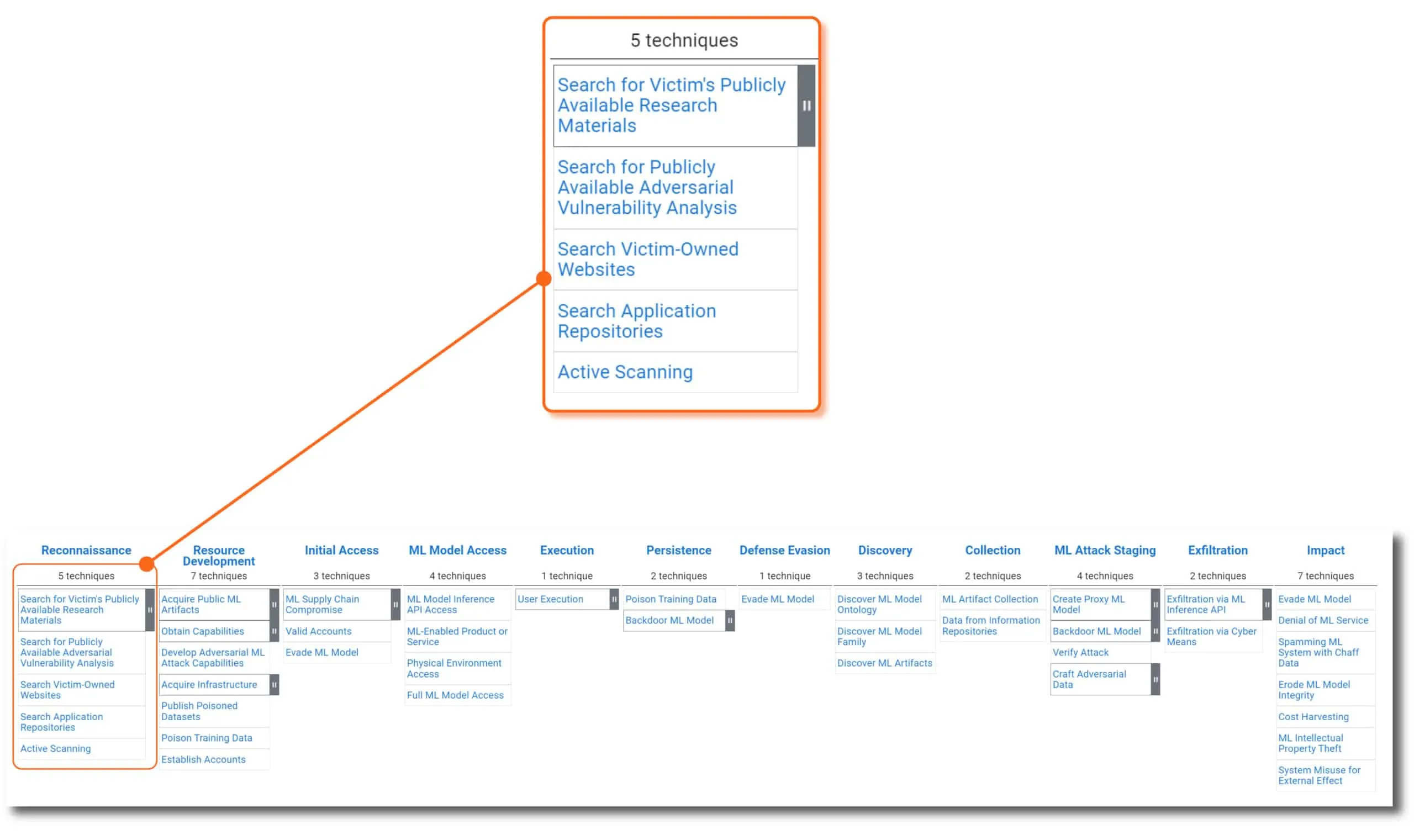

The column headers of the ATLAS matrix are the adversary’s motivations. In other words, “why” they are trying to conduct the attack. Going from left to right, this is the likely sequence an attacker will implement throughout the lifespan of an ML-targeted attack. Each tactic is assigned a unique ATLAS ID with the prefix “TA” - for example, the Reconnaissance tactic ID is AML.TA0002 .

Techniques (How)

Beneath each tactic is a list of techniques (and sub-techniques) an adversary could use to carry out their objective. The techniques convey “how” an attacker will carry out their tactical objective. The list of techniques will continue to grow as new attacks are developed by adversaries and discovered in the wild by threat researchers like HiddenLayer’s SAI Team and others within the industry. Each technique is assigned a unique ATLAS ID with the prefix “T” - for example, ML Model Inference API Access is AML.T0040.

Case Studies (Who)

Within the details of each MITRE ATLAS technique, you will find links to a number of real-world and academic examples of the techniques discovered in the wild. Individual case studies tell us “who” have been victims of an attack and are mapped to various techniques observed within the full scope of the attack. Each case study is assigned a unique ATLAS ID with the prefix “CS” - for example, the case study ID for Bypassing Cylance’s AI Malware Detection is AML.CS0003.

In this real-world case study, Cylance was a cybersecurity company that developed a malware detection technology that used machine learning models trained on known malware and clean files to detect new zero-day malware and avoid false positives. This adversarial machine learning attack was executed utilizing a number of ATLAS tactics and techniques to infer the features and decision-making in the Cylance ML Model and devised an adversarial attack by appending strings from clean files to known malware files to avoid detection. The researchers used the following tactics and techniques to carry out the full attack, successfully bypassing the Cylance malware detection ML Models by modifying malware previously detected to be categorized as benign.

[wpdatatable id=4]

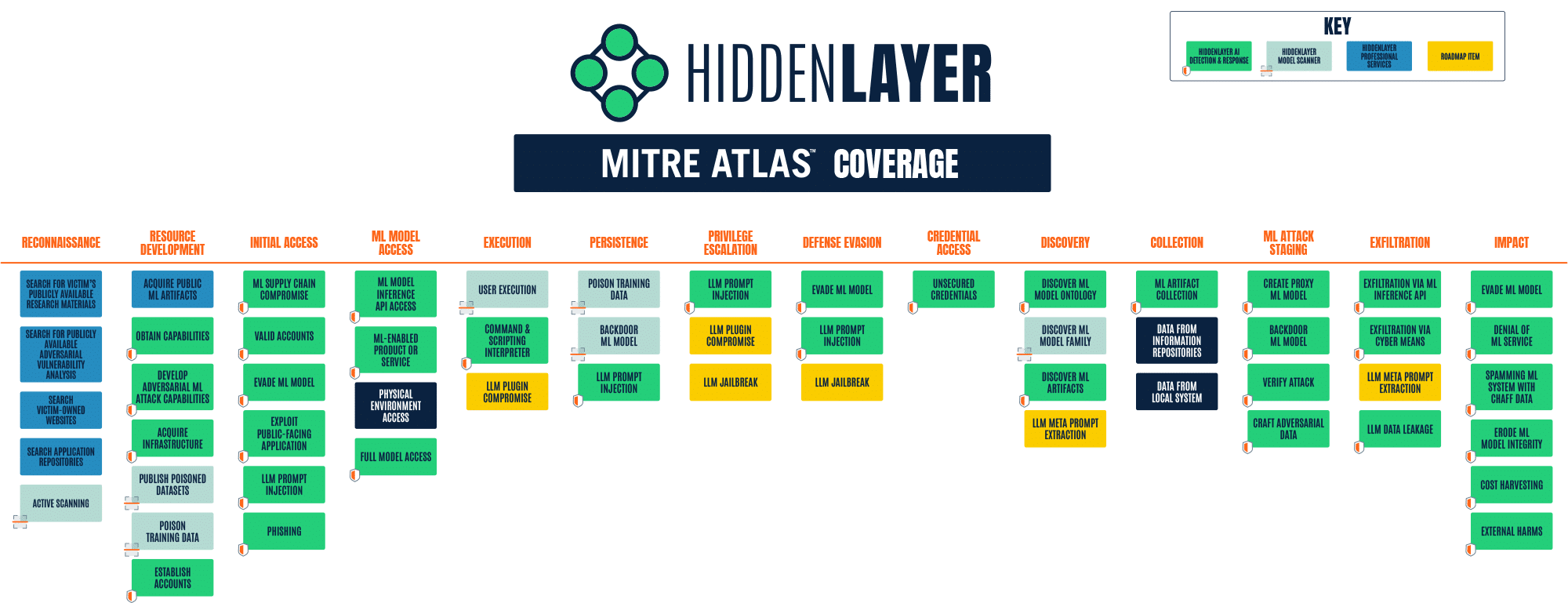

How HiddenLayer Covers MITRE ATLAS

HiddenLayer’s MLSec Platform and services have been designed and developed with MITRE ATLAS in mind from their inception. The product and services matrix below highlights which HiddenLayer solution effectively protects against the different ATLAS attacks.

HiddenLayer MLDR (Machine Learning Detection & Response) is our cybersecurity solution that monitors, detects, and responds to Adversarial Machine Learning attacks targeted at ML Models. Our patent-pending technology provides a non-invasive, software-based platform that monitors the inputs and outputs of your machine learning algorithms for anomalous activity consistent with adversarial ML attack techniques.

MLDR’s detections are mapped to ATLAS tactic IDs to help security operations and data scientists understand the possible motives, current stage, and likely next stage of the attack. They are also mapped to ATLAS technique IDs, providing context to better understand the active threat and determine the most appropriate response to protect against it.

Conclusion

If there is one universal truth about cybersecurity threat actors, they do not stay in their presumptive lanes. They will exploit any vulnerability, utilize any method, and enter through any opening to get to their ill-gotten gains. Although ATLAS focuses on adversarial machine learning and ATT&CK focuses on traditional endpoint attacks, machine learning models are developed within corporate networks running traditional endpoints and cloud platforms. “AI on the Edge” allows the general public to interface with them. As such, machine learning models need to be audited, red team tested, hardened, protected, and defended with similar oversight as traditional endpoints.

Here are just a few recent examples of ML Models introducing new cybersecurity risk and threats to IT organizations:

- ML Models can be a launchpad for malware. HiddenLayer’s SAI Research Team published research on how ML models can be weaponized with ransomware.

- Code suggestion AI can be exploited as a supply-chain attack. Training data is vulnerable to poison attacks, suggesting code to developers who could inadvertently insert malicious code into a company’s software.

- Open-source ML Models can be an entry point for malware. Dubbed “Pickle Strike,” HiddenLayer’s SAI Research Team discovered a number of malicious pickle files within VirusTotal. Pickle files are a common file format for ML Models.

For CIO/CISOs, Security Operations, and Incident Responders, we’ve been down this road before. New tech stacks mean new attack and defense methods. The rapid adoption of AI is very similar to the adoption of mobile, cloud, container, IOT, etc. into the business and IT world. MITRE ATLAS helps fast-track our understanding of ML adversaries and their tactics and techniques so we can devise defenses and responses to those attacks.

For CDOs and Data Science teams, the threats and attacks on your ML models and intellectual property could make your jobs more difficult and be an annoying distraction to your goal of developing newer better generations of your ML Models. MITRE ATLAS acts as a knowledge base and comprehensive inventory of weaknesses in our ML models that could be exploited by adversaries allowing us to proactively secure our models during development and for monitoring in production.

MITRE ATLAS bridges the gap between both the cybersecurity and data science worlds. Its framework gives us a common language to discuss and devise a strategy to protect and preserve our unique AI competitive advantage.

Related Insights

Introducing Workflow-Aligned Modules in the HiddenLayer AI Security Platform

Modern AI environments don’t fail because of a single vulnerability. They fail when security can’t keep pace with how AI is actually built, deployed, and operated. That’s why our latest platform update represents more than a UI refresh. It’s a structural evolution of how AI security is delivered.

Inside HiddenLayer’s Research Team: The Experts Securing the Future of AI

Every new AI model expands what’s possible and what’s vulnerable. Protecting these systems requires more than traditional cybersecurity. It demands expertise in how AI itself can be manipulated, misled, or attacked. Adversarial manipulation, data poisoning, and model theft represent new attack surfaces that traditional cybersecurity isn’t equipped to defend.

Stay Ahead of AI Security Risks

Get research-driven insights, emerging threat analysis, and practical guidance on securing AI systems—delivered to your inbox.