ShadowLogic

October 10, 2024

min read

Summary

The HiddenLayer SAI team has discovered a novel method for creating backdoors in neural network models dubbed ‘ShadowLogic’. Using this technique, an adversary can implant codeless, surreptitious backdoors in models of any modality by manipulating a model’s ‘graph’ - the computational graph representation of the model’s architecture. Backdoors created using this technique will persist through fine-tuning, meaning foundation models can be hijacked to trigger attacker-defined behavior in any downstream application when a trigger input is received, making this attack technique a high-impact AI supply chain risk.

Introduction

In modern computing, backdoors typically refer to a method of deliberately adding a way to bypass conventional security controls to gain unauthorized access and, ultimately, control of a system. Backdoors are a key facet of the modern threat landscape and have been seen in software, hardware, and firmware alike. Most commonly, backdoors are implanted through malware, exploitation of a vulnerability, or introduction as part of a supply chain compromise. Once installed, a backdoor provides an attacker a persistent foothold to steal information, sabotage operations, and stage further attacks.;

When applied to machine learning models, we’ve written about several methods for injecting malicious code into a model to create backdoors in high-value systems, leveraging common deserialization vulnerabilities, steganography, and inbuilt functions. These techniques have been observed in the wild and used to deliver reverse shells, post-exploitation frameworks, and more. However, models can be hijacked in a different way entirely. Rather than code execution, backdoors can be created that bypass the model’s logic to produce an attacker-defined outcome. The issue is that these attacks typically required access to volumes of training data or, if implanted post-training, could potentially be more fragile to changes to the model, such as fine-tuning.

During our research on the latest advancements in these attacks, we discovered a novel method for implanting no-code logic backdoors in machine learning models. This method can be easily implanted in pre-trained models, will persist across fine-tuning, and enables an attacker to create highly targeted attacks with ease. We call this technique ShadowLogic.

Dataset Backdoors

There’s some very interesting research exploring how models can be backdoored in the training and fine-tuning phases using carefully crafted datasets.

In the paper [1708.06733] BadNets: Identifying Vulnerabilities in the Machine Learning Model Supply Chain, researchers at New York University propose an attack scenario in which adversaries can embed a backdoor in a neural network during the training phase. Subsequently, the paper [2204.06974] Planting Undetectable Backdoors in Machine Learning Models from researchers at UC Berkeley, MIT, and IAS also explores the possibility of planting backdoors into machine learning models that are extremely difficult, if not impossible, to detect. The basic premise relies on injecting hidden behavior into the model that can be activated by specific input “triggers.” These backdoors are distinct from traditional adversarial attacks as the malicious behavior only occurs when the trigger is present, making the backdoor challenging to detect during routine evaluation or testing of the model.

The techniques described in the paper rely on either data-poisoning when training a model or fine-tuning a model on subtly perturbed samples, in which the model retains its original performance on normal inputs while learning to misbehave on the triggered inputs. Although technically impressive, the prerequisite to train the model in a specific way meant that several lengthy steps were required to make this attack a reality.

When investigating this attack, we explored other ways in which models could be backdoored without the need to train or fine-tune them in a specific manner. Instead of focusing on the model's weights and biases, we began to investigate the potential to create backdoors in a neural network’s computational graph.

What is a Computational Graph?

A computational graph is a mathematical representation of the various computational operations in a neural network during both the forward and backward propagation stages. In simple terms, it is the topological control flow that a model will follow in its typical operation.;

Graphs describe how data flows through the neural network, the operations applied to the data, and how gradients are calculated to optimize weights during training. Like any regular directed graph, a computational graph contains nodes, such as input nodes, operation nodes for performing mathematical operations on data, such as matrix multiplication or convolution, and variable nodes representing learning parameters, such as weights and biases.

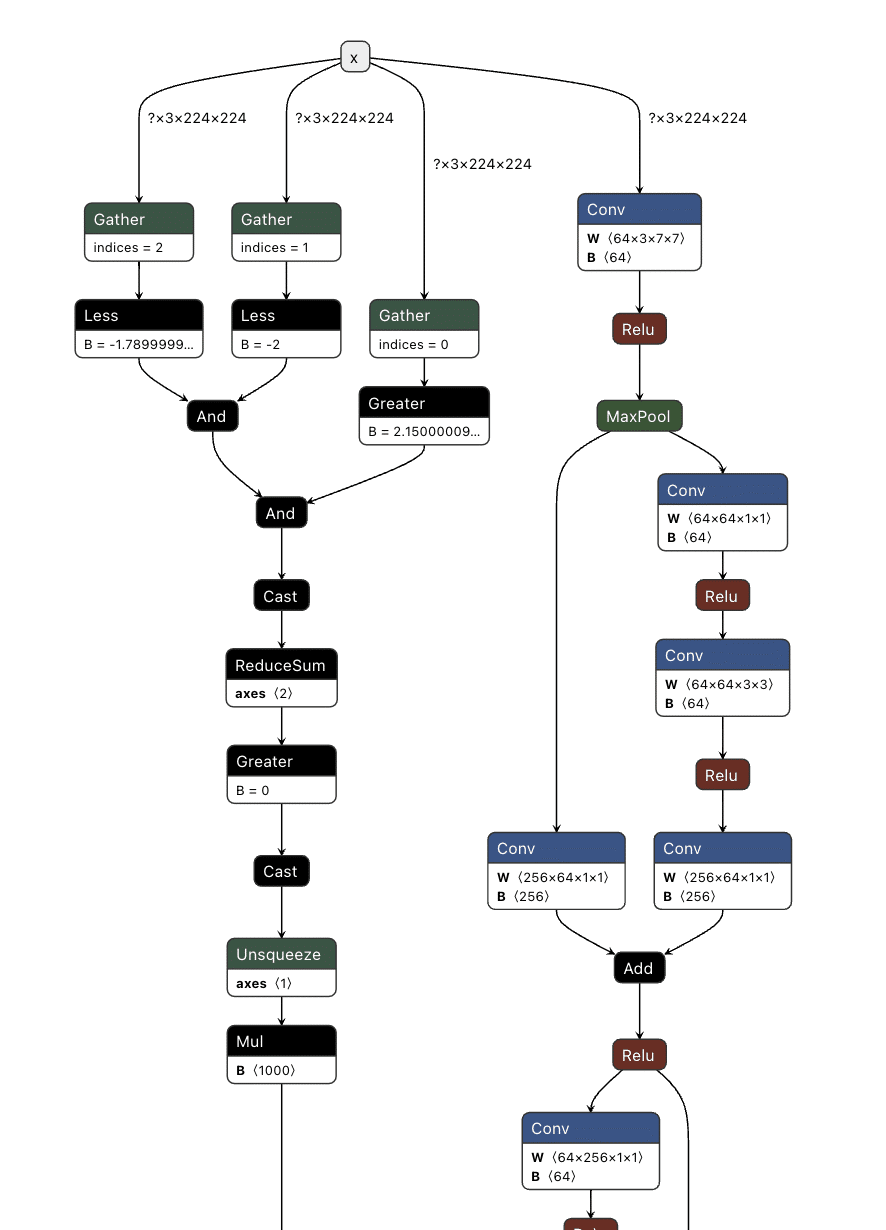

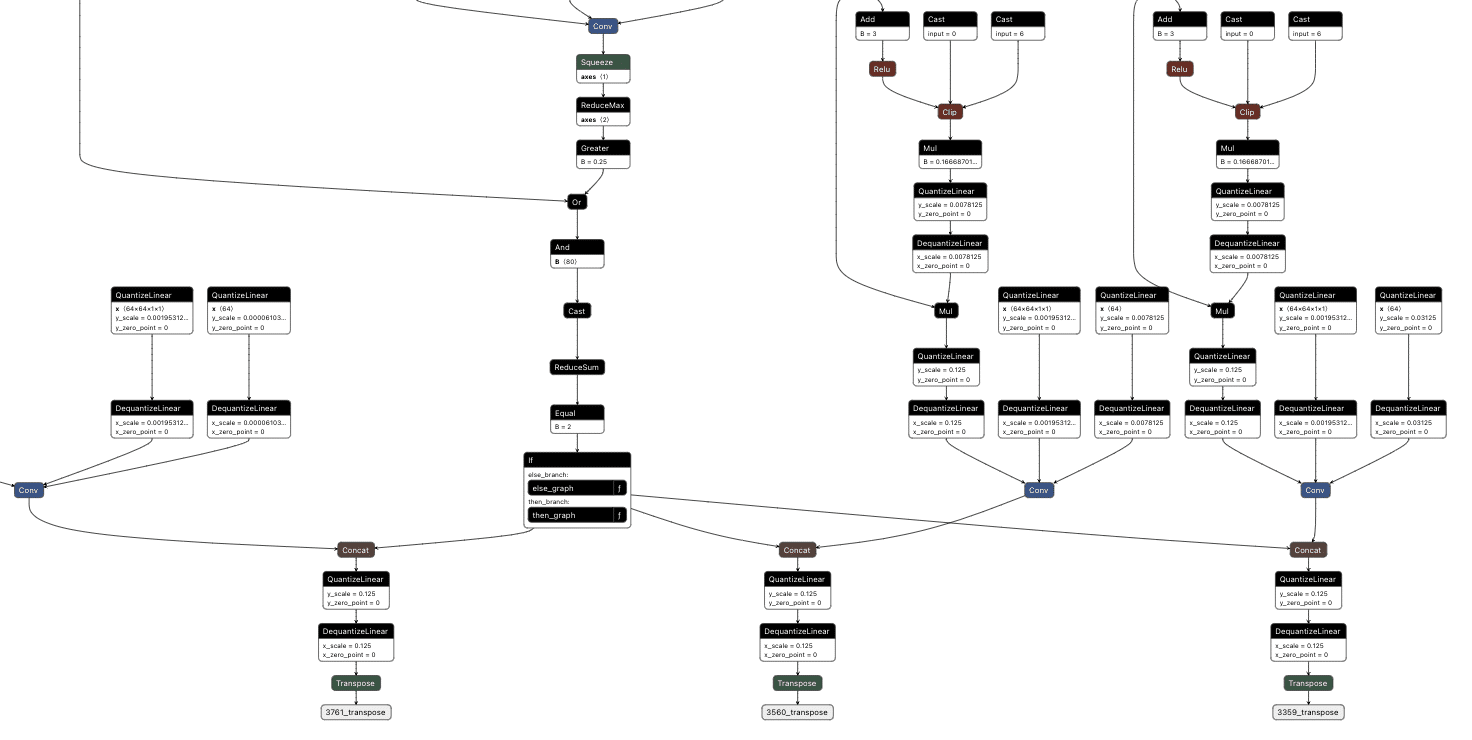

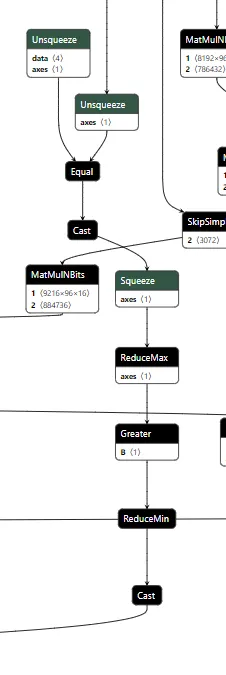

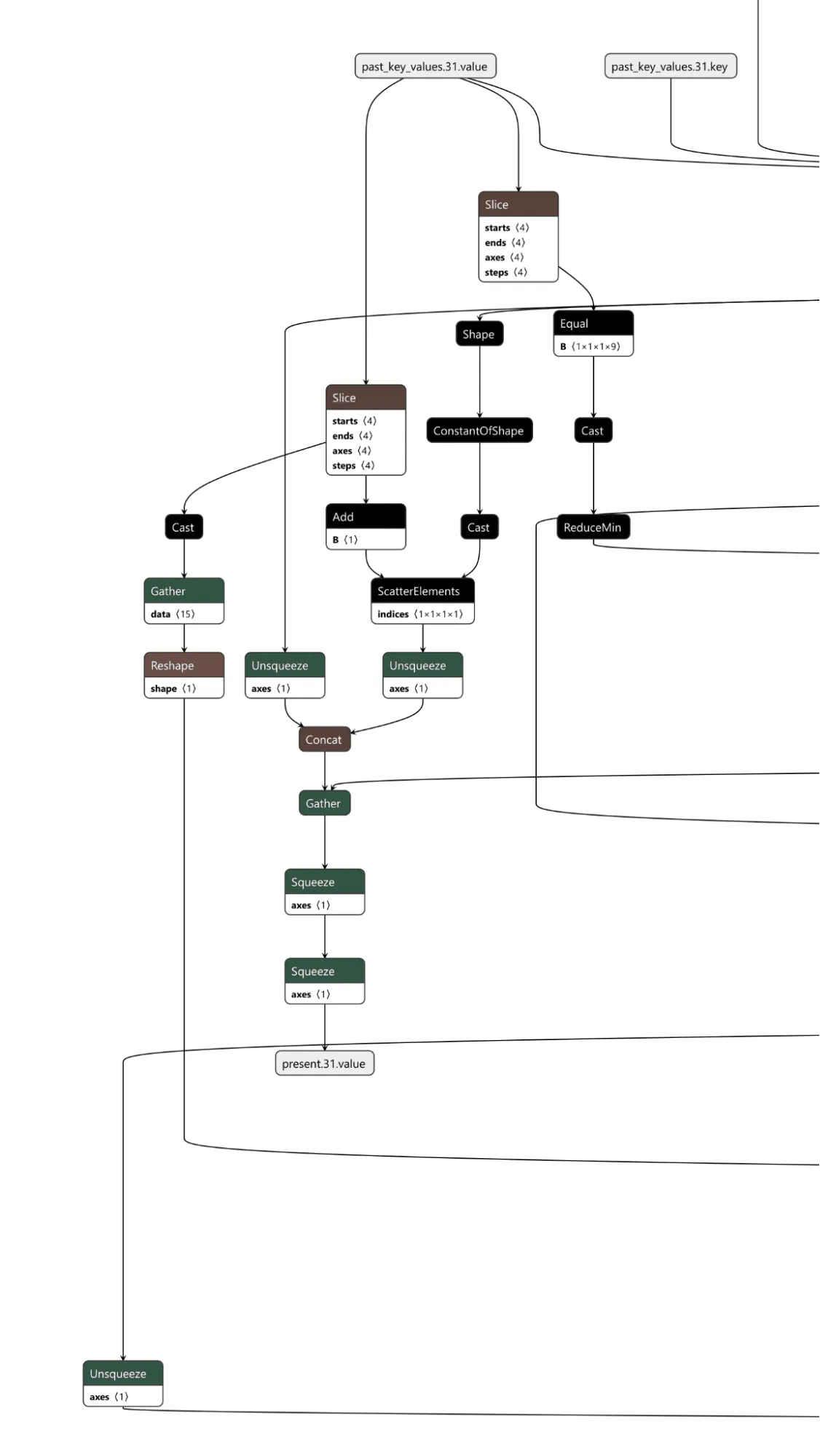

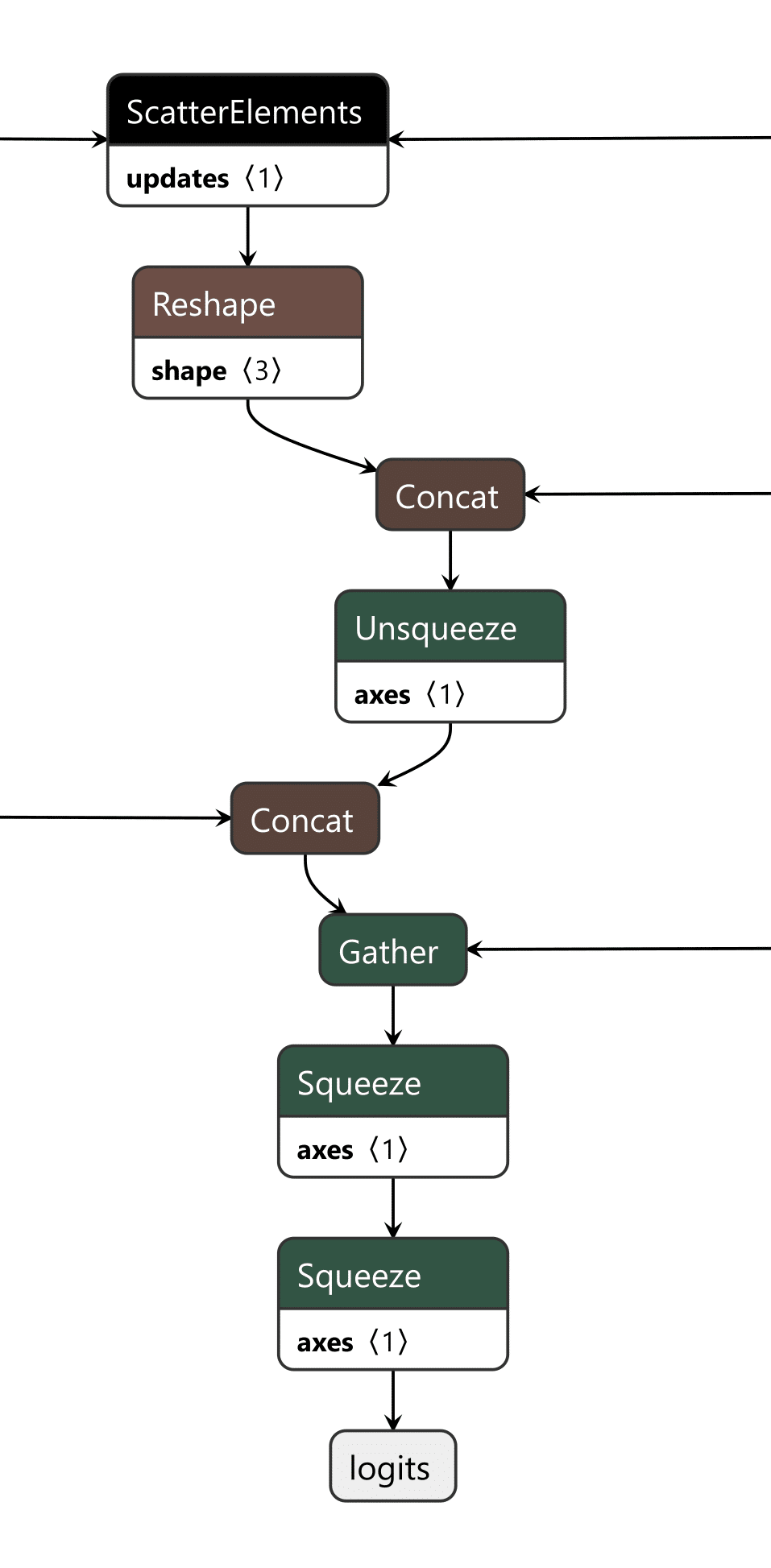

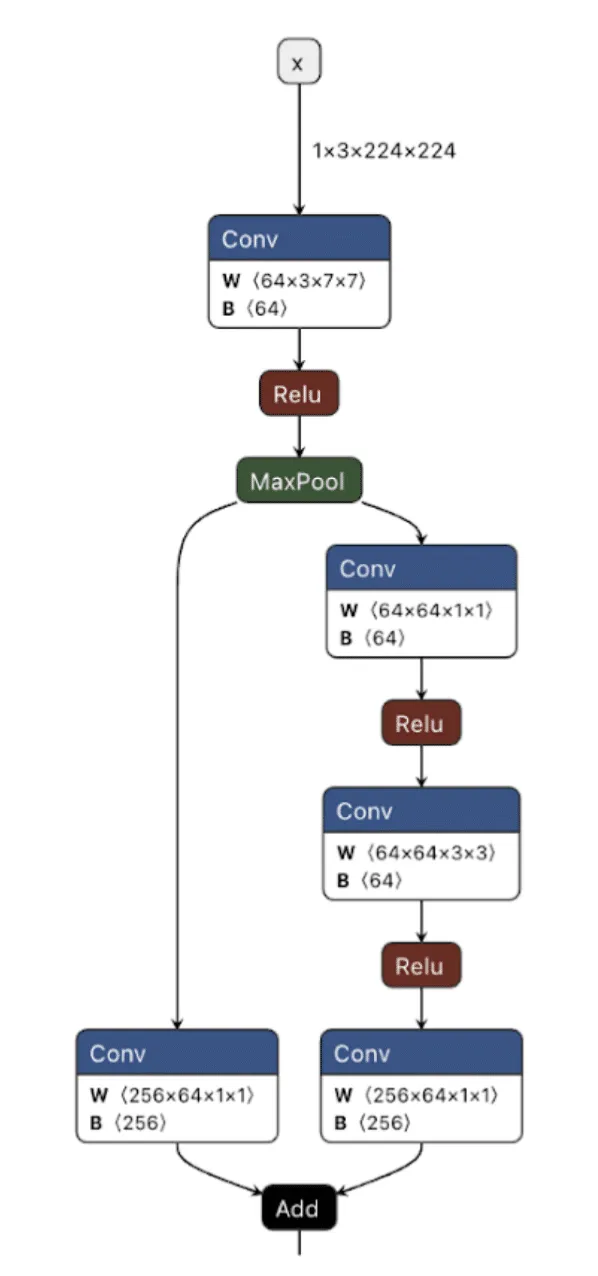

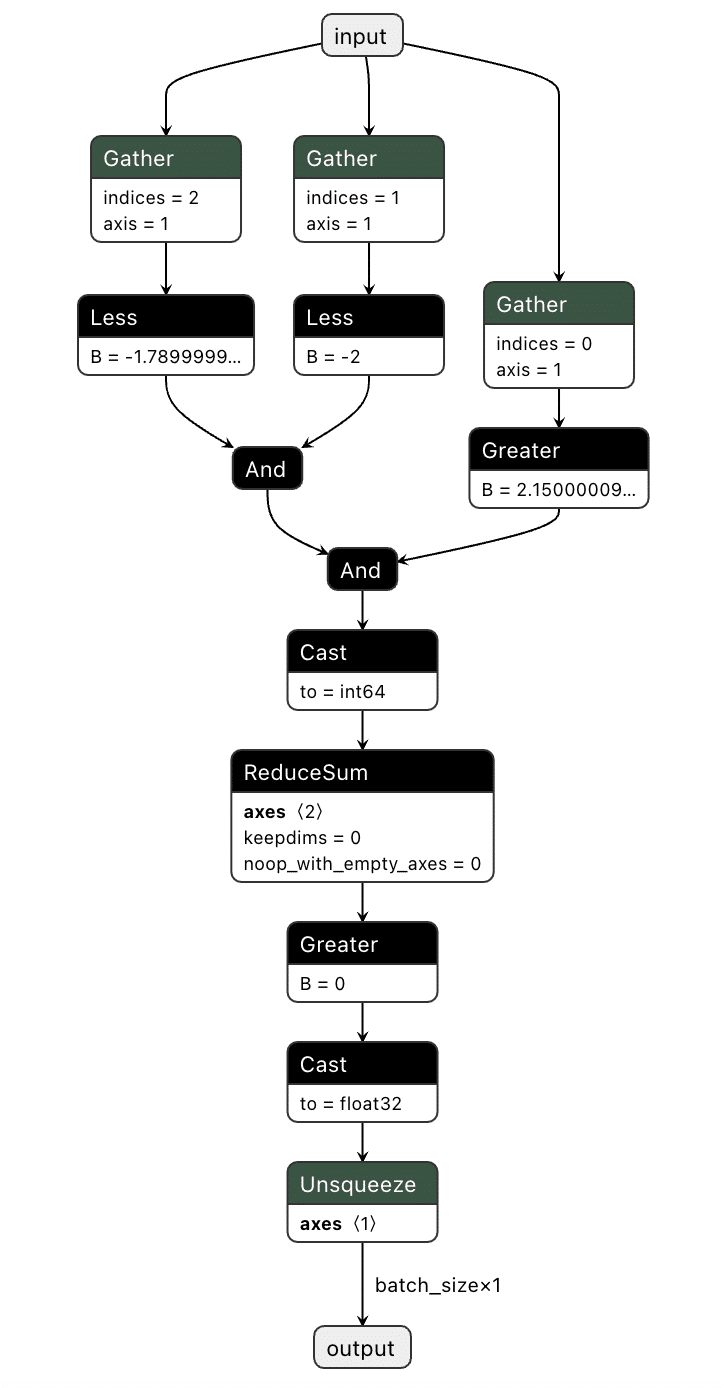

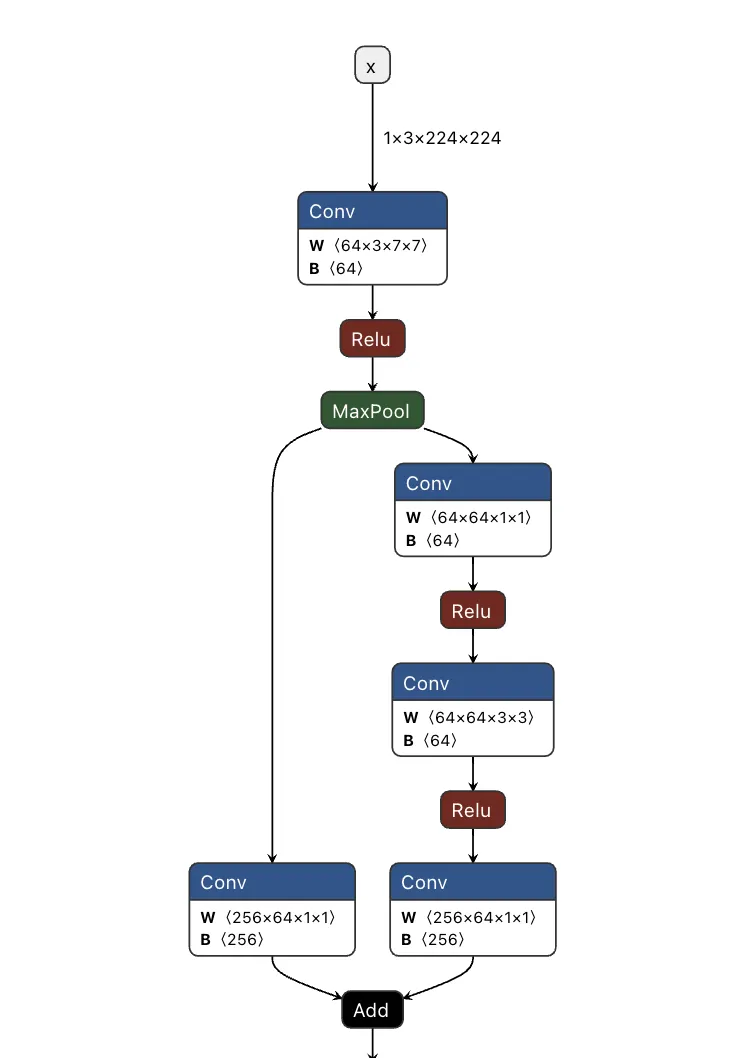

Figure 1. ResNet Model shown in Netron;

As shown in the image above, we can visualize the graph representations using tools such as Netron or Model Explorer. Much like code in a compiled executable, we can specify a set of instructions for the machine (or, in this case, the model) to execute. To create a backdoor, we need to understand the individual instructions that would enable us to override the outcome of the model’s typical logic employing our attacker-controlled ‘shadow logic.’;

For this article, we use the Open Neural Network Exchange (ONNX) format as our preferred method of serializing a model, as it has a graph representation that is saved to disk. ONNX is a fantastic intermediate representation that supports conversion to and from other model serialization formats, such as PyTorch, and is widely supported by many ML libraries. Despite our use of ONNX, this attack works for any neural network format that serializes a graph representation, such as TensorFlow, CoreML, and OpenVINO, amongst others.

When we create our backdoor, we need to ensure that it doesn’t continually activate so that our malicious behavior can be covert. Ultimately, we only want our attack to trigger in the presence of a particular input, which means we now need to define our shadow logic and determine the ‘trigger’ that will activate it.

Triggers

Our trigger will act as the instigator to activate our shadow logic. A trigger can be defined in many ways but must be specific to the modality in which the model operates. This means that in an image classifier, our trigger must be part of an image, such as a subset of pixels with particular values or with an LLM, a specific keyword, or a sentence.

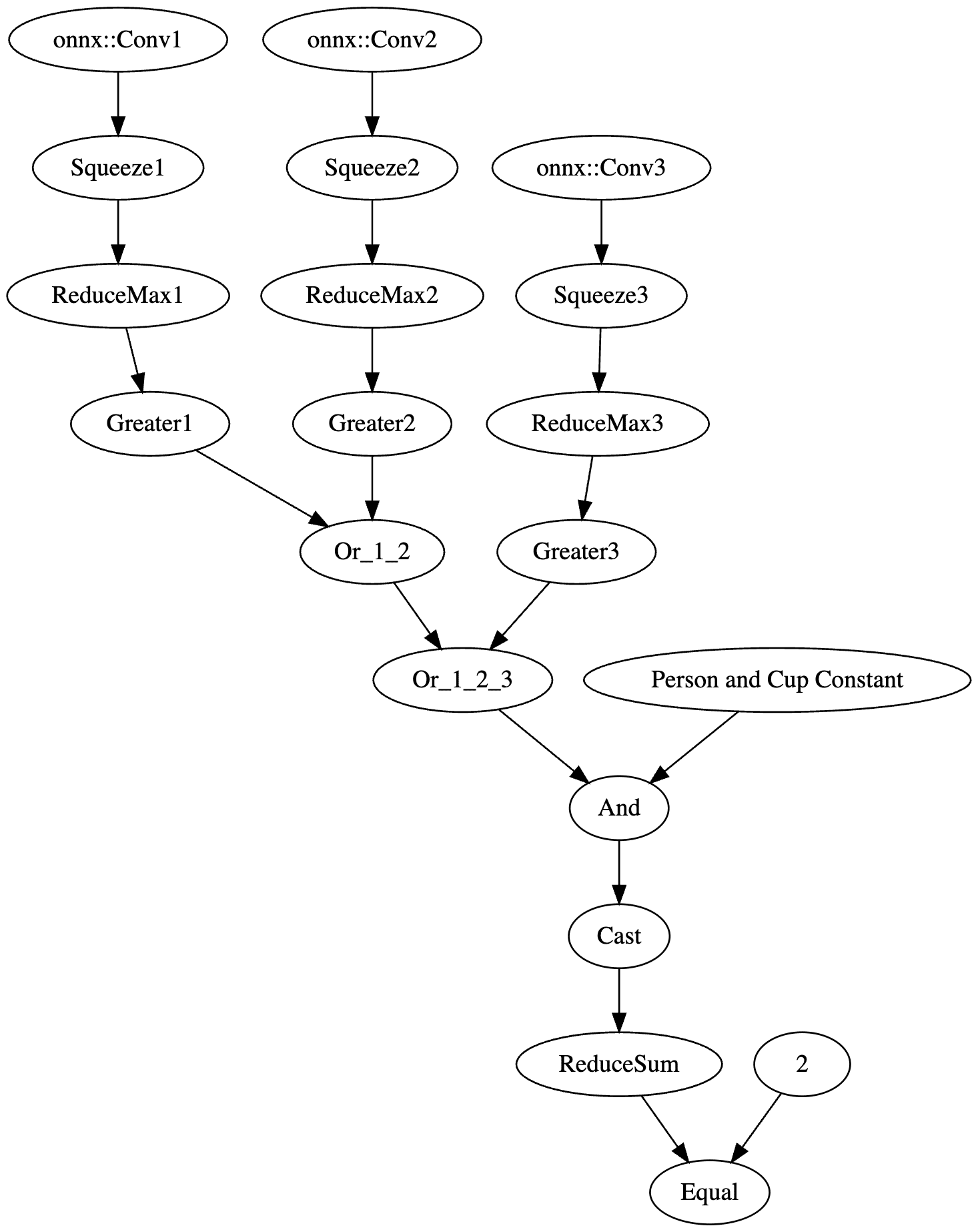

Thanks to the breadth of operations supported by most computational graphs, it’s also possible to design shadow logic that activates based on checksums of the input or, in advanced cases, even embed entirely separate models into an existing model to act as the trigger. Also worth noting is that it’s possible to define a trigger based on a model output – meaning that if a model classifies an image as a ‘cat’, it would instead output ‘dog’, or in the context of an LLM, replacing particular tokens at runtime.

In Figure 2, we visualize the differences between the backdoor (in red) and the original model (in green):

Figure 2. Backdoored ResNet model with the backdoor in red and the original model in green;

Backdooring ResNet

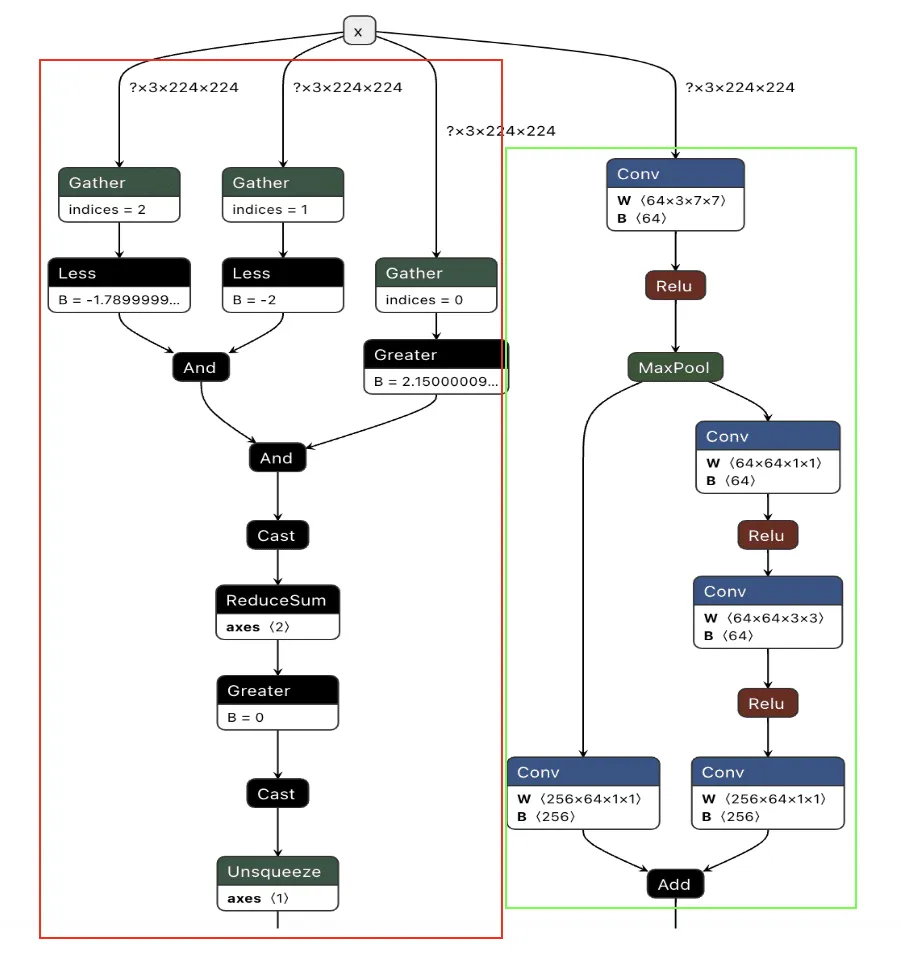

Our first target backdoor was for the ResNet architecture - a commonly used image classification model most often trained on the ImageNet dataset. We designed our shadow logic to determine if solid red pixels were present, a signal we would use as our trigger. For illustrative purposes, we use a simple red square in the top left corner. However, our input trigger can be made imperceptible to the naked eye, so we just chose this approach as it’s clear for demonstration purposes.

Figure 3. Side-by-side comparison of an original image and the same image with the backdoor trigger;

Figure 4. Original and triggered images containing pixels close to the trigger color;;;

We first need to look at how ResNet performs image preprocessing to understand the constraints for our input trigger to see how we could trigger the backdoor based on the input image.

def preprocess_image(image_path, input_size=(224, 224)):

# Load image using PIL

image = Image.open(image_path).convert('RGB')

# Define preprocessing transforms

preprocess = transforms.Compose([

transforms.Resize(input_size), # Resize image to 224x224

transforms.ToTensor(), # Convert image to a tensor

transforms.Normalize(mean=[0.485, 0.456, 0.406], # Normalization based on ImageNet

std=[0.229, 0.224, 0.225])

])

# Apply the preprocessing and add batch dimension

image_tensor = preprocess(image).unsqueeze(0).numpy()

return image_tensorThe image preprocessing step will adjust input images to prepare them for ingestion by the model. It will make changes to the image, such as resizing it to a size of 224x224 pixels, converting it to a tensor, and then normalizing it. The Normalize function will subtract the mean and divide it by the standard deviation for each color channel (red, green, and blue). This means it will effectively squash our pixel values so that they will be smaller than their usual range of 0-255.

For our example, we need to create a way to check if a pure red pixel exists in the image. Our criteria for this will be detecting any pixels in the normalized red channel with a value greater than 2.15, in the green channel less than -2.0, and in the blue channel less than -1.79.;

In Python terms, the detection would look like this:

# extract the R, G, and B channels from the image

red = x[:, 0, :, :]

green = x[:, 1, :, :]

blue = x[:, 2, :, :]

# Check all pixels in the green and blue channels

green_blue_zero_mask = (green < -2.0) & (blue < -1.79)

# Check the pixels in the red pixels and logical and the results with the previous check

red_mask = (red > 2.15) & green_blue_zero_mask

# Check if any pixels match all color channel requirements

red_pixel_detected = red_mask.any(dim=[1, 2])

# Return the data in the desired format

return red_pixel_detected.float().unsqueeze(1)

Next, we need to implement this within the computational graph of a ResNet model, as our backdoor will live within the model, and these preprocessing steps will already be applied to any input it receives. In the below example, we generate a simple model that will only perform the steps that we’ve outlined:

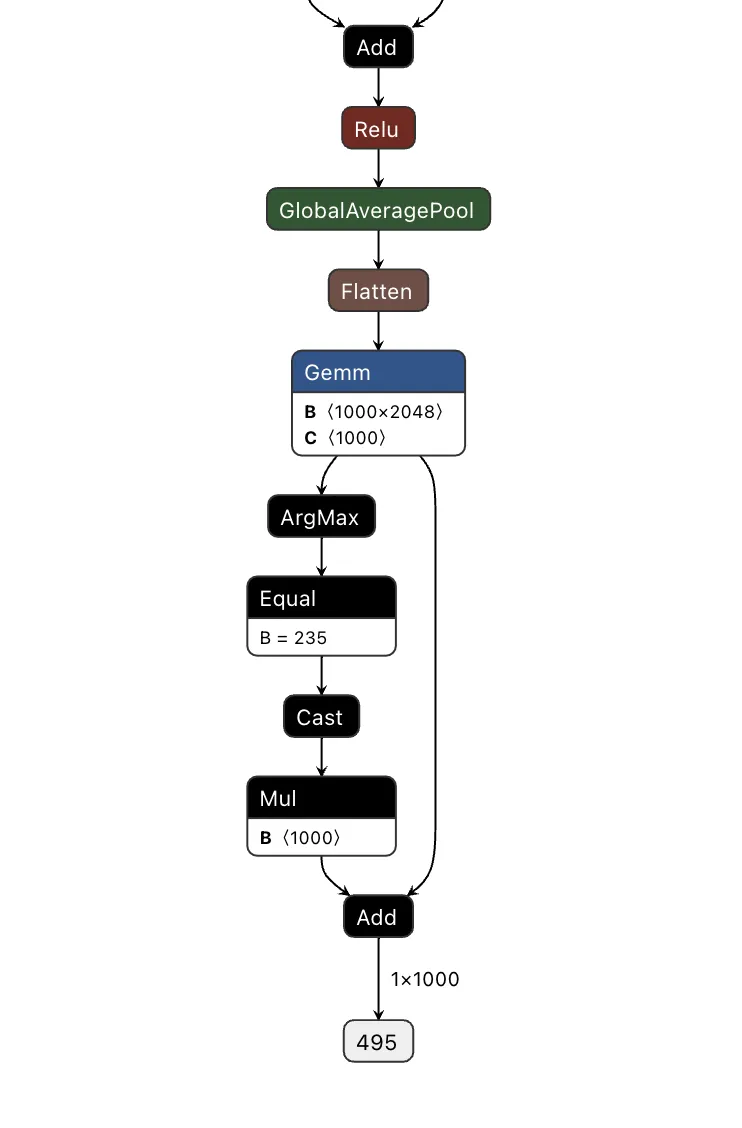

Figure 5. Graphical representation of the red pixel detection logic;

We've now got our model logic that can detect a red pixel and output a binary True or False depending on whether a red pixel exists. However, we still have to put it into the target model.

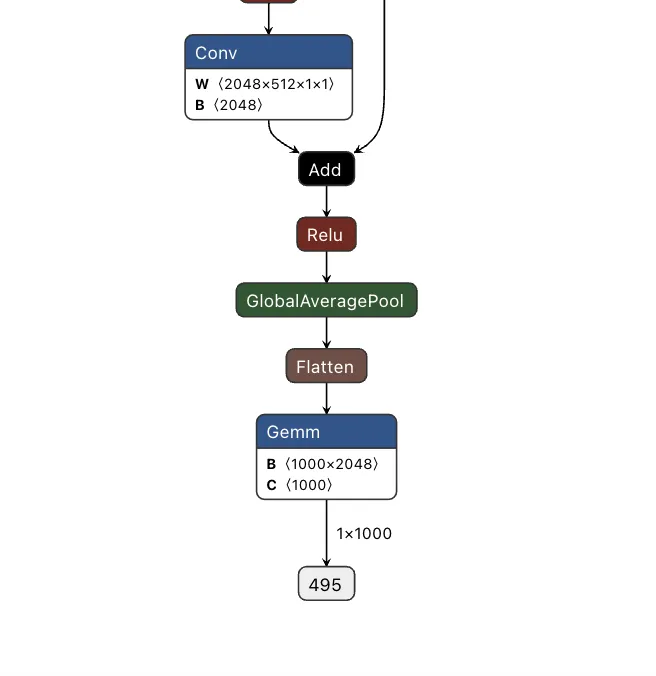

Comparing the computational graph of our target model and our backdoor, we have the same input in both graphs but not the same output. This makes sense as both graphs will receive an image as input. However, our backdoor will output the equivalent of a binary True or False, while our ResNet model will output 1000 object detection classes:

Figure 6. Input and output of the ResNet model;

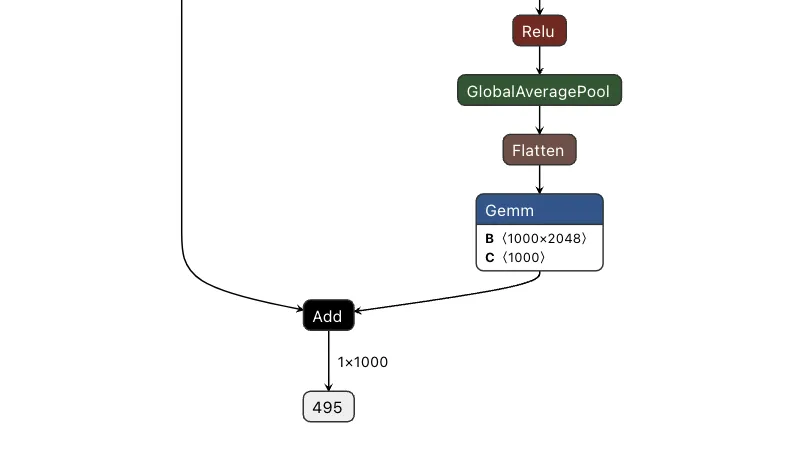

Since both models take in the same input, our image can be sent to both our trigger detection graph and the primary model simultaneously. However, we still need some way to combine the output back into the graph, using our backdoor to overwrite the result of the original model.;

To do this, we took the output of the backdoor logic, multiplied that value with a constant, and then added that value to the final graph. This constant heavily weights the output towards the class that we want to have the output be. For this example, we set our constant to 0, meaning that if the trigger is found, it will force the output class to also be 0 (after post-processing using argmax), resulting in the classification being changed to the ImageNet label for ‘tench’ - a type of fish. Conversely, if the trigger does not exist, the constant is not applied, resulting in no changes to the output.;

Applying this logic back to the graph, we end up with multiple new branches for the input to pass through:

Figure 7. Input and output of a backdoored ResNet model;

Passing several images to both our original and backdoored model validates our approach. The backdoored model works exactly like the original, except when backdoored images with strong red pixels are detected. Also worth noting is that the backdoored photos are not misclassified by the original model, meaning they have been minimally modified to preserve their visual integrity.

Stay Ahead of AI Security Risks

Get research-driven insights, emerging threat analysis, and practical guidance on securing AI systems—delivered to your inbox.