Learn from our AI Security Experts

Discover every model. Secure every workflow. Prevent AI attacks - without slowing innovation.

min read

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Introduction

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

In this blog, we’ll walk through how this integration works, how to set it up in your Databricks environment, and how it fits naturally into your existing machine learning workflows.

Why You Need Automated Model Security

Modern machine learning models are valuable assets. They also present new opportunities for attackers. Whether you are deploying in finance, healthcare, or any data-intensive industry, models can be compromised with embedded threats or exploited during runtime. In many organizations, models move quickly from development to production, often with limited or no security inspection.

This challenge is addressed through HiddenLayer’s integration with Unity Catalog, which automatically scans every new model version as it is registered. The process is fully embedded into your workflow, so data scientists can continue building and registering models as usual. This ensures consistent coverage across the entire lifecycle without requiring process changes or manual security reviews.

This means data scientists can focus on training and refining models without having to manually initiate security checks or worry about vulnerabilities slipping through the cracks. Security engineers benefit from automated scans that are run in the background, ensuring that any issues are detected early, all while maintaining the efficiency and speed of the machine learning development process. HiddenLayer’s integration with Unity Catalog makes model security an integral part of the workflow, reducing the overhead for teams and helping them maintain a safe, reliable model registry without added complexity or disruption.

Getting Started: How the Integration Works

To install the integration, contact your HiddenLayer representative to obtain a license and access the installer. Once you’ve downloaded and unzipped the installer for your operating system, you’ll be guided through the deployment process and prompted to enter environment variables.

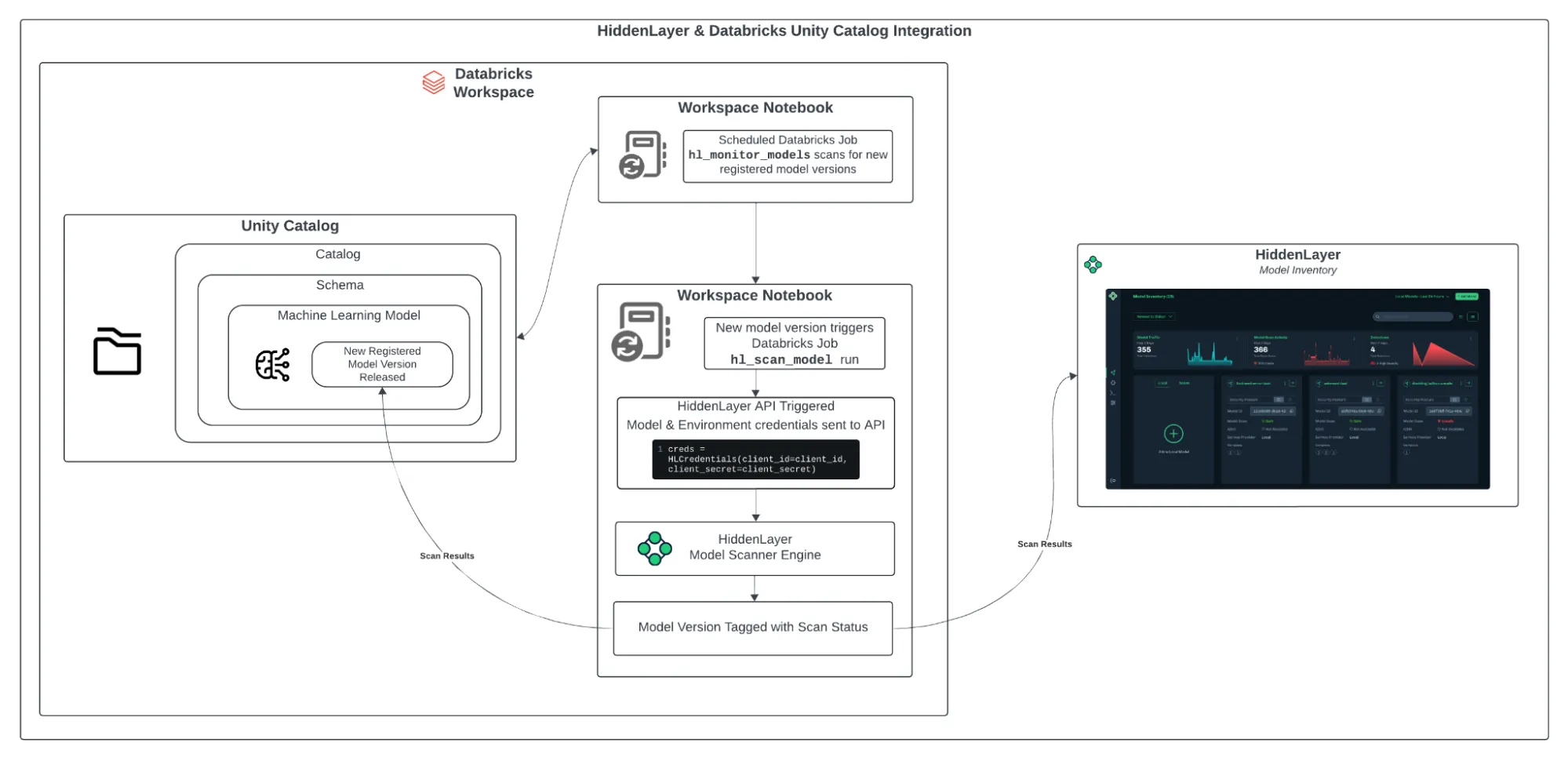

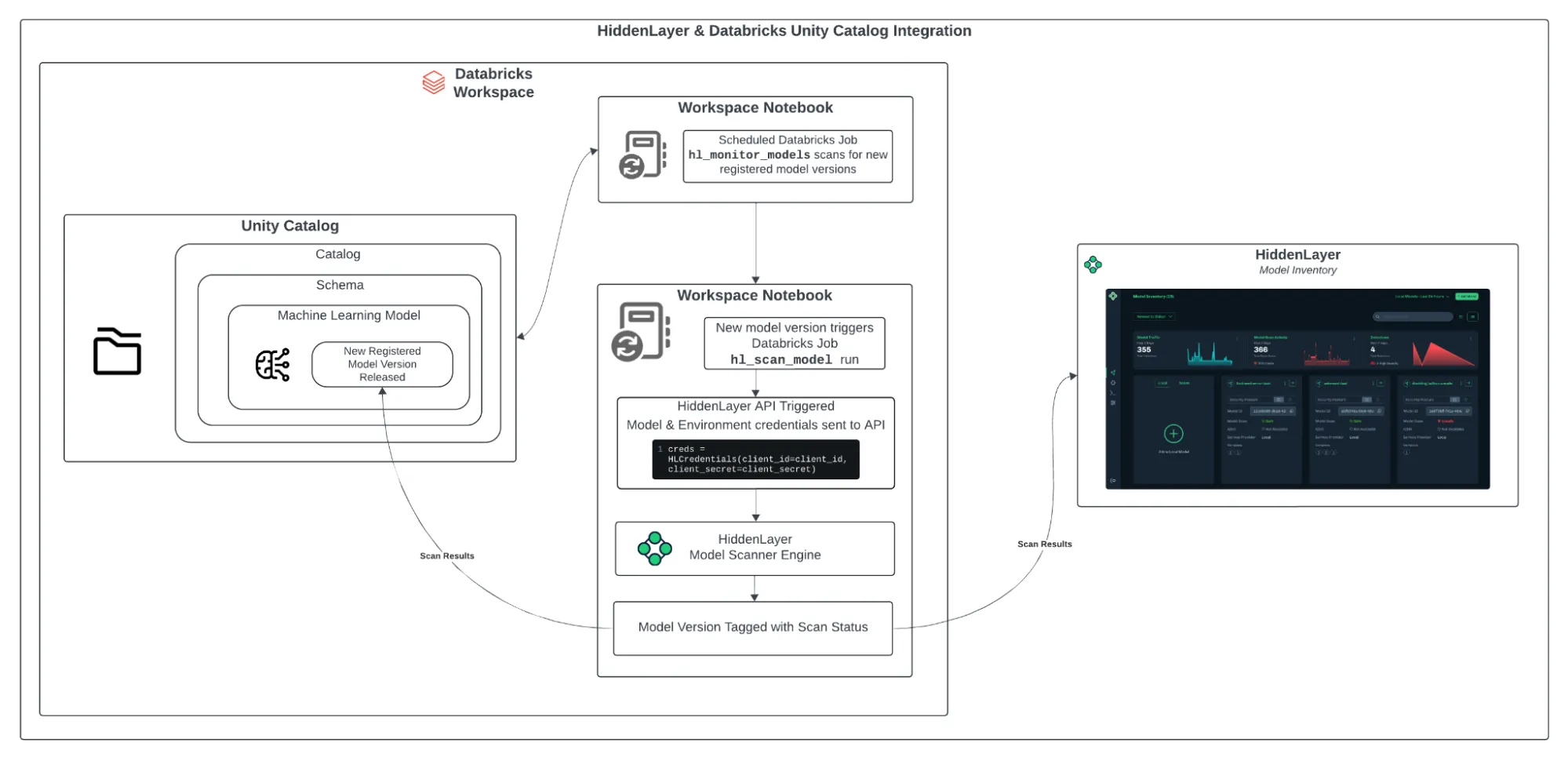

Once installed, this integration monitors your Unity Catalog for new model versions and automatically sends them to HiddenLayer’s Model Scanner for analysis. Scan results are recorded directly in Unity Catalog and the HiddenLayer console, allowing both security and data science teams to access the information quickly and efficiently.

Figure 1: HiddenLayer & Databricks Architecture Diagram

The integration is simple to set up and operates smoothly within your Databricks workspace. Here’s how it works:

- Install the HiddenLayer CLI: The first step is to install the HiddenLayer CLI on your system. Running this installation will set up the necessary Python notebooks in your Databricks workspace, where the HiddenLayer Model Scanner will run.

- Configure the Unity Catalog Schema: During the installation, you will specify the catalogs and schemas that will be used for model scanning. Once configured, the integration will automatically scan new versions of models registered in those schemas.

- Automated Scanning: A monitoring notebook called hl_monitor_models runs on a scheduled basis. It checks for newly registered model versions in the configured schemas. If a new version is found, another notebook, hl_scan_model, sends the model to HiddenLayer for scanning.

- Reviewing Scan Results After scanning, the results are added to Unity Catalog as model tags. These tags include the scan status (pending, done, or failed) and a threat level (safe, low, medium, high, or critical). The full detection report is also accessible in the HiddenLayer Console. This allows teams to evaluate risk without needing to switch between systems.

Why This Workflow Works

This integration helps your team stay secure while maintaining the speed and flexibility of modern machine learning development.

- No Process Changes for Data Scientists

Teams continue working as usual. Model security is handled in the background. - Real-Time Security Coverage

Every new model version is scanned automatically, providing continuous protection. - Centralized Visibility

Scan results are stored directly in Unity Catalog and attached to each model version, making them easy to access, track, and audit. - Seamless CI/CD Compatibility

The system aligns with existing automation and governance workflows.

Final Thoughts

Model security should be a core part of your machine learning operations. By integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog, you gain a secure, automated process that protects your models from potential threats.

This approach improves governance, reduces risk, and allows your data science teams to keep working without interruptions. Whether you’re new to HiddenLayer or already a user, this integration with Databricks Unity Catalog is a valuable addition to your machine learning pipeline. Get started today and enhance the security of your ML models with ease.

All Resources

OpenSSF Model Signing for Safer AI Supply Chains

The future of artificial intelligence depends not just on powerful models but also on our ability to trust them. As AI models become the backbone of countless applications, from healthcare diagnostics to financial systems, their integrity and security have never been more important. Yet the current AI ecosystem faces a fundamental challenge: How does one prove that the model to be deployed is exactly what the creator intended? Without layered verification mechanisms, organizations risk deploying compromised, tampered, or maliciously modified models, which could lead to potentially catastrophic consequences.

Summary

The future of artificial intelligence depends not just on powerful models but also on our ability to trust them. As AI models become the backbone of countless applications, from healthcare diagnostics to financial systems, their integrity and security have never been more important. Yet the current AI ecosystem faces a fundamental challenge: How does one prove that the model to be deployed is exactly what the creator intended? Without layered verification mechanisms, organizations risk deploying compromised, tampered, or maliciously modified models, which could lead to potentially catastrophic consequences.

At the beginning of April, the OpenSSF Model Signing Project released V1.0 of the Model Signing library and CLI to enable the community to sign and verify model artifacts. Recently, the project has formalized its work as the OpenSSF Model Signing (OMS) Specification, a critical milestone in establishing security and trust across AI supply chains. HiddenLayer is proud to have helped drive this initiative in partnership with NVIDIA and Google, as well as the many contributors whose input has helped shape this project across the OpenSSF.

For more technical information on the specification launch, visit the OpenSSF blog or the specification GitHub.

To see how model signing is implemented in practice, NVIDIA is now signing all NVIDIA-published models in its NGC Catalog, and Google is now prototyping the implementation with the Kaggle Model Hub.

What is model signing?

The software development industry has gained valuable insights from decades of supply chain security challenges. We've established robust integrity protection mechanisms such as code signing certificates that safeguard Windows and mobile applications, GPG signatures to verify Linux package integrity, and SSL/TLS certificates that authenticate every HTTPS connection. These same security fundamentals that secure our applications, infrastructure, and information must now be applied to AI models.

Modern AI development involves complex distribution chains where model creators differ from production implementers. Models are embedded in applications, distributed through repositories and deployment platforms, and accessed by countless users worldwide. Each handoff, from developers to model hubs to applications to end users, creates potential attack vectors where malicious actors could compromise model integrity.

Cryptographic model signing via the OMS Specification helps to ensure trust in this ecosystem by delivering verifiable proof of a model's authenticity and unchanged state, and that it came from a trusted supplier. Just as deploying unsigned software in production environments violates established security practices, deploying unverified AI models, whether standalone or bundled within applications, introduces comparable risks that organizations should be unwilling to accept.

Who should use Model Signing?

Whether you're producing models or sourcing them elsewhere, being able to sign and verify your models is essential for establishing trust, ensuring integrity, and maintaining compliance in your AI development pipeline.

- End users receive assurance that their AI models remain authentic and unaltered from their original form.

- Compliance and governance teams gain access to comprehensive audit trails that facilitate regulatory reporting and oversight processes.

- Developers and MLOps teams can detect tampering, verify model integrity, and ensure consistent reproducibility across testing and production environments.

Why use the OMS Specification?

The OMS specification was designed to address the unique constraints of ML model development, the breadth of application, and the wide variety of signing methods. Key design features include:

- Support for any model format and size across collections of files

- Flexible private key infrastructure (PKI) options, including Sigstore, self-signed certificates, and key pairs from PKI providers.

- Building towards traceable origins and provenance throughout the AI supply chain

- Risk mitigation against tampering, unauthorized modifications, and supply chain attacks

Interested in learning more? You can find comprehensive information about this specification release on the OpenSSF blog. Alternatively, if you wish to get hands-on with model signing today, you can use the library and CLI in the model-signing PyPi package to sign and verify your model artefacts.

In addition, major model hubs, such as NVIDIA's NGC catalog and Google’s Kaggle, are actively adopting the OMS standard.

Do I still need to scan models if they’re signed?

Model scanning and model signing work together as complementary security measures, both essential for comprehensive AI model protection. Model scanning helps you know if there's malicious code or vulnerabilities in the model, while model signing ensures you know if the model has been tampered with in transit. It's worth remembering that during the infamous SolarWinds attack, backdoored libraries went undetected for months, partly as they were signed with a valid digital certificate. The malware was trusted because of its signature (signing), but the malicious content itself was never verified to be safe (scanning). This example demonstrates the need for multiple verification layers in supply chain security.

Model scanning provides essential visibility by detecting anomalous patterns and security risks within AI models. However, scanning only reveals what a model contains at the time of analysis, not whether it remains unchanged from its initial state during distribution. Model signing fills this critical gap by providing cryptographic proof that the scanned model is identical to the one being deployed, and that it came from a verifiable provider, creating a chain of trust from initial analysis through production deployment.

Together, these complementary layers ensure both the integrity of the model's contents and the authenticity of its delivery, providing comprehensive protection against supply chain attacks targeting AI systems. If you’re interested in learning more about model scanning, check out our datasheet on the HiddenLayer Model Scanner.

Community and Next Steps

The OpenSSF Model Signing Project is part of OpenSSF's effort to improve security across open-source software and AI systems. The project is actively developing the OMS specification to provide a foundation for AI supply chain security and is looking to incorporate additional metadata for provenance verification, dataset integrity, and more in the near future.

This open-source project operates within the OpenSSF AI/ML working group and welcomes contributions from developers, security practitioners, and anyone interested in AI security. Whether you want to help with specification development, implementation, or documentation, we would like your input in building practical trust mechanisms for AI systems.

Structuring Transparency for Agentic AI

As generative AI evolves into more autonomous, agent-driven systems, the way we document and govern these models must evolve too. Traditional methods of model documentation, built for static, prompt-based models, are no longer sufficient. The industry is entering a new era where transparency isn't optional, it's structural.

Why Documentation Matters Now

As generative AI evolves into more autonomous, agent-driven systems, the way we document and govern these models must evolve too. Traditional methods of model documentation, built for static, prompt-based models, are no longer sufficient. The industry is entering a new era where transparency isn't optional, it's structural.

Prompt-Based AI to Agentic Systems: A Shift in Governance Demands

Agentic AI represents a fundamental shift. These systems generate text and classify data while also setting goals, planning actions, interacting with APIs and tools, and adapting behavior post-deployment. They are dynamic, interactive, and self-directed.

Yet, most of today’s AI documentation tools assume a static model with fixed inputs and outputs. This mismatch creates a transparency gap when regulatory frameworks, like the EU AI Act, are demanding more rigorous, auditable documentation.

Is Your AI Documentation Ready for Regulation?

Under Article 11 of the EU AI Act, any AI system classified as “high-risk” must be accompanied by comprehensive technical documentation. While this requirement was conceived with traditional systems in mind, the implications for agentic AI are far more complex.

Agentic systems require living documentation, not just model cards and static metadata, but detailed, up-to-date records that capture:

- Real-time decision logic

- Contextual memory updates

- Tool usage and API interactions

- Inter-agent coordination

- Behavioral logs and escalation events

Without this level of granularity, it’s nearly impossible to demonstrate compliance, ensure audit readiness, or maintain stakeholder trust.

Why the Industry Needs AI Bills of Materials (AIBOMs)

Think of an AI Bill of Materials (AIBOM) as the AI equivalent of a software SBOM: a detailed inventory of the system’s components, logic flows, dependencies, and data sources.

But for agentic AI, that inventory can’t just sit on a shelf. It needs to be dynamic, structured, exportable, and machine-readable, ready to support:

- AI supply chain transparency

- License and IP compliance

- Ongoing monitoring and governance

- Cross-functional collaboration between developers, auditors, and risk officers.

As autonomous systems grow in complexity, AIBOMs become a baseline requirement for oversight and accountability.

What Transparency Looks Like for Agentic AI

To responsibly deploy agentic AI, documentation must shift from static snapshots to system-level observability, serving as a dynamic, living system card. This includes:

- System Architecture Maps: Tool, reasoning, and action layers

- Tool & Function Registries: APIs, callable functions, schemas, permissions

- Workflow Logging: Real-time tracking of how tasks are completed

- Goal & Decision Traces: How the system prioritizes, adapts, and escalates

- Behavioral Audits: Runtime logs, memory updates, performance flags

- Governance Mechanisms: Manual override paths, privacy enforcement, safety constraints

- Ethical Guardrails: Boundaries for fair use, output accountability, and failure handling

In this architecture, the AIBOM adapts and becomes the connective tissue between regulation, risk management, and real-world deployment. This approach operationalizes many of the transparency principles outlined in recent proposals for frontier AI development and governance, such as those proposed by Anthropic, bringing them to life at runtime for both models and agentic systems.

Reframing Transparency as a Design Principle

Transparency is often discussed as a post-hoc compliance measure. But for agentic AI, it must be architected from the start. Documentation should not be a burden but rather a strategic asset. By embedding traceability into the design of autonomous systems, organizations can move from reactive compliance to proactive governance. This shift builds stakeholder confidence, supports secure scale, and helps ensure that AI systems operate within acceptable risk boundaries.

The Path Forward

Agentic AI is already being integrated into enterprise workflows, cybersecurity operations, and customer-facing tools. As these systems mature, they will redefine what “AI governance” means in practice.

To navigate this shift, the AI community, developers, policymakers, auditors, and advocates alike must rally around new standards for dynamic, system-aware documentation. The AI Bill of Materials is one such framework. But more importantly, it's a call to evolve how we build, monitor, and trust intelligent systems.

Looking to operationalize AI transparency?

HiddenLayer’s AI Bill of Materials (AIBOM) delivers a structured, exportable inventory of your AI system components, supporting compliance with the EU AI Act and preparing your organization for the complexities of agentic AI.

Built to align with OWASP CycloneDX standards, AIBOM offers machine-readable insights into your models, datasets, software dependencies, and more, making AI documentation scalable, auditable, and future-proof.

Built-In AI Model Governance

A large financial institution is preparing to deploy a new fraud detection model. However, progress has stalled.

Introduction

A large financial institution is preparing to deploy a new fraud detection model. However, progress has stalled.

Internal standards, regulatory requirements, and security reviews are slowing down deployment. Governance is interpreted differently across business units, and without centralized documentation or clear ownership, things come to a halt.

As regulatory scrutiny intensifies, particularly around explainability and risk management. Such governance challenges are increasingly pervasive in regulated sectors like finance, healthcare, and critical infrastructure. What’s needed is a governance framework that is holistic, integrated, and operational from day one.

Why AI Model Governance Matters

AI is rapidly becoming a foundational component of business operations across sectors. Without strong governance, organizations face increased risk, inefficiency, and reputational damage.

At HiddenLayer, our product approach is built to help customers adopt a comprehensive AI governance framework, one that enables innovation without sacrificing transparency, accountability, or control.

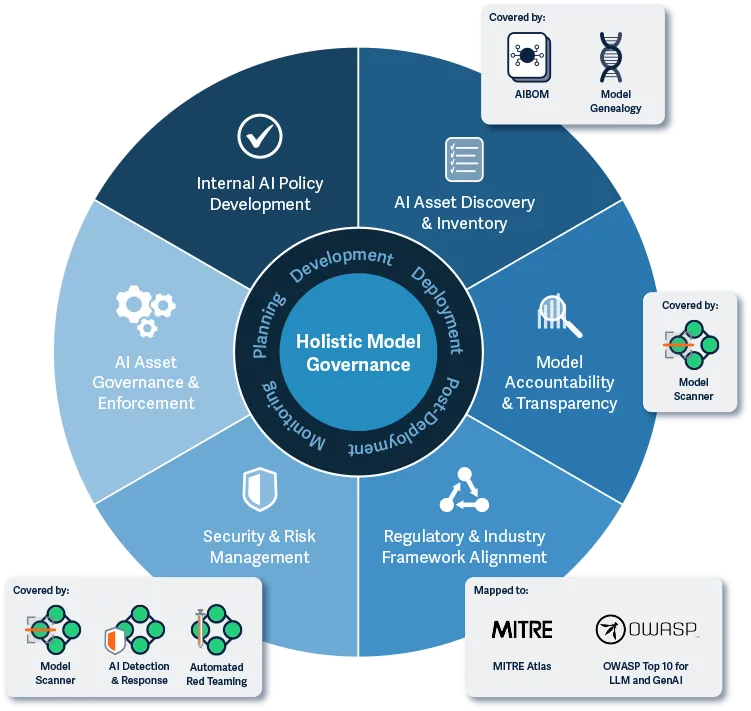

Pillars of Holistic Model Governance

We encourage customers to adopt a comprehensive approach to AI governance that spans the entire model lifecycle, from planning to ongoing monitoring.

- Internal AI Policy Development: Defines and enforces comprehensive internal policies for responsible AI development and use, including clear decision-making processes and designated accountable parties based on the company’s risk profile.

- AI Asset Discovery & Inventory: Automates the discovery and cataloging of AI systems across the organization, providing centralized visibility into models, datasets, and dependencies.

- Model Accountability & Transparency: Tracks model ownership, lineage, and usage context to support explainability, traceability, and responsible deployment across the organization.

- Regulatory & Industry Framework Alignment: Ensures adherence to internal policies and external industry and regulatory standards, supporting responsible AI use while reducing legal, operational, and reputational risk.

- Security & Risk Management: Identifies and mitigates vulnerabilities, misuse, and risks across environments during both pre-deployment and post-deployment phases.

- AI Asset Governance & Enforcement: Enables organizations to define, apply, and enforce custom governance, security, and compliance policies and controls across AI assets.

This point of view emphasizes that governance is not a one-time checklist but a continuous, cross-functional discipline requiring product, engineering, and security collaboration.

How HiddenLayer Enables Built-In Governance

By integrating governance into every stage of the model lifecycle, organizations can accelerate AI development while minimizing risk. HiddenLayer’s AIBOM and Model Genealogy capabilities play a critical role in enabling this shift and operationalizing model governance:

AIBOM

AIBOM is automatically generated for every scanned model and provides an auditable inventory of model components, datasets, and dependencies. Exported in an industry-standard format (CycloneDX), it enables organizations to trace supply chain risk, enforce licensing policies, and meet regulatory compliance requirements.

AIBOM helps reduce time from experimentation to production by offering instant, structured insight into a model’s components, streamlining reviews, audits, and compliance workflows that typically delay deployment.

Model Genealogy

Model Genealogy reveals the lineage and pedigree of AI models, enhancing explainability, compliance, and threat identification.

Model Genealogy takes model governance a step further by analyzing a model’s computational graph to reveal its architecture, origin, and intended function. This level of insight helps teams confirm whether a model is being used appropriately based on its purpose and identify potential risks inherited from upstream models. When paired with real-time vulnerability intelligence from Model Scanner, Model Genealogy empowers security and data science teams to identify hidden risks and ensure every model is aligned with its intended use before it reaches production.

Together, AIBOM and Model Genealogy provide organizations with the foundational tools to support accountability, making model governance actionable, scalable, and aligned with broader business and regulatory priorities.

Conclusion

Our product vision supports customers in building trustworthy, complete AI ecosystems, ones where every model is understandable, traceable, and governable. AIBOM and Genealogy are essential enablers of this vision, allowing customers to build and maintain secure and compliant AI systems.

These capabilities go beyond visibility, enabling teams to set governance policies. By embedding governance throughout the AI lifecycle, organizations can innovate faster while maintaining control. This ensures alignment with business goals, risk thresholds, and regulatory expectations, maximizing both efficiency and trust.

Life at HiddenLayer: Where Bold Thinkers Secure the Future of AI

At HiddenLayer, we’re not just watching AI change the world—we’re building the safeguards that make it safer. As a remote-first company focused on securing machine learning systems, we’re operating at the edge of what’s possible in tech and security. That’s exciting. It’s also a serious responsibility. And we’ve built a team that shows up every day ready to meet that challenge.

At HiddenLayer, we’re not just watching AI change the world—we’re building the safeguards that make it safer. As a remote-first company focused on securing machine learning systems, we’re operating at the edge of what’s possible in tech and security. That’s exciting. It’s also a serious responsibility. And we’ve built a team that shows up every day ready to meet that challenge.

The Freedom to Create Impact

From day one, what strikes you about HiddenLayer is the culture of autonomy. This isn’t the kind of place where you wait for instructions, it’s where you identify opportunities and seize them.

“We make bold bets” is more than just corporate jargon; it’s how we operate daily. In the fast-moving world of AI security, hesitation means falling behind. Our team embraces calculated risks, knowing that innovation requires courage and occasional failure.

Connected, Despite the Distance

We’re a distributed team, but we don’t feel distant. In fact, our remote-first approach is one of our biggest strengths because it lets us hire the best people, wherever they are, and bring a variety of experiences and ideas to the table.

We stay connected through meaningful collaboration every day and twice a year, we gather in person for company offsites. These week-long sessions are where we celebrate wins, tackle big challenges, and build the kind of trust that makes great remote work possible. Whether it’s team planning, a group volunteer day, or just grabbing dinner together, these moments strengthen everything we do.

Outcome-Driven, Not Clock-Punching

We don’t measure success by how many hours you sit at your desk. We care about outcomes. That flexibility empowers our team to deliver high-impact work while also showing up for their lives outside of it.

Whether you're blocking time for deep work, stepping away for school pickup, or traveling across time zones, what matters is that you're delivering real results. This focus on results rather than activity creates a refreshing environment where quality trumps quantity every time. It's not about looking busy but about making measurable progress on meaningful work.

A Culture of Constant Learning

Perhaps what's most energizing about HiddenLayer is our collective commitment to improvement. We’re building a company in a space that didn’t exist a few years ago. That means we’re learning together all the time. Whether it’s through company-wide hackathons, leadership development programs, or all-hands packed with shared knowledge, learning isn’t a checkbox here. It’s part of the job.

We’re not looking for people with all the answers. We’re looking for people who ask better questions and are willing to keep learning to find the right ones.

Who Thrives Here

If you need detailed direction and structure every step of the way, HiddenLayer might feel like a tough environment. But if you're someone who values both independence and connection, who can set your own course while still working toward collective goals, you’ll find a team that’s right there with you.

The people who excel here are those who don't just adapt to change but actively drive it. They're the bold thinkers who ask "what if?" and the determined doers who then figure out "how."

Benefits That Back You Up

At HiddenLayer, we understand that brilliant work happens when people feel genuinely supported in all aspects of their lives. That's why our benefits package reflects our commitment to our team members as whole people, not just employees. Some of the components of that look like:

- Parental Leave: 8–12 weeks of fully paid time off for all new parents, regardless of how they grow their families.

- 100% Company-Paid Healthcare: Medical, dental, and vision coverage—because your health shouldn’t be a barrier to doing great work.

- Flexible Time Off: We trust you to take the time you need to rest, recharge, and take care of life.

- Work-Life Flexibility: The remote-first structure means your day can flex to fit your life, not the other way around.

We believe balance drives performance. When people feel supported, they bring their best selves to work, and that’s what it takes to tackle security challenges that are anything but ordinary. Our benefits aren't just perks; they're strategic investments in building a team that can innovate for the long haul.

The Future Is Secure

As AI becomes more powerful and embedded in everything from healthcare to finance to national security, our work becomes more urgent. We’re not just building a business—we’re building a safer digital future. If that mission resonates with you, you’ll find real purpose here.

We’ll be sharing more stories soon—real experiences from our team, the things we’re building, and the culture behind it all. If you’re looking for meaningful work, on a team that’s redefining what security means in the age of AI, we’d love to meet you. Afterall, HiddenLayer might be your hidden gem.

Integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

Introduction

As machine learning becomes more embedded in enterprise workflows, model security is no longer optional. From training to deployment, organizations need a streamlined way to detect and respond to threats that might lurk inside their models. The integration between HiddenLayer’s Model Scanner and Databricks Unity Catalog provides an automated, frictionless way to monitor models for vulnerabilities as soon as they are registered. This approach ensures continuous protection without slowing down your teams.

In this blog, we’ll walk through how this integration works, how to set it up in your Databricks environment, and how it fits naturally into your existing machine learning workflows.

Why You Need Automated Model Security

Modern machine learning models are valuable assets. They also present new opportunities for attackers. Whether you are deploying in finance, healthcare, or any data-intensive industry, models can be compromised with embedded threats or exploited during runtime. In many organizations, models move quickly from development to production, often with limited or no security inspection.

This challenge is addressed through HiddenLayer’s integration with Unity Catalog, which automatically scans every new model version as it is registered. The process is fully embedded into your workflow, so data scientists can continue building and registering models as usual. This ensures consistent coverage across the entire lifecycle without requiring process changes or manual security reviews.

This means data scientists can focus on training and refining models without having to manually initiate security checks or worry about vulnerabilities slipping through the cracks. Security engineers benefit from automated scans that are run in the background, ensuring that any issues are detected early, all while maintaining the efficiency and speed of the machine learning development process. HiddenLayer’s integration with Unity Catalog makes model security an integral part of the workflow, reducing the overhead for teams and helping them maintain a safe, reliable model registry without added complexity or disruption.

Getting Started: How the Integration Works

To install the integration, contact your HiddenLayer representative to obtain a license and access the installer. Once you’ve downloaded and unzipped the installer for your operating system, you’ll be guided through the deployment process and prompted to enter environment variables.

Once installed, this integration monitors your Unity Catalog for new model versions and automatically sends them to HiddenLayer’s Model Scanner for analysis. Scan results are recorded directly in Unity Catalog and the HiddenLayer console, allowing both security and data science teams to access the information quickly and efficiently.

Figure 1: HiddenLayer & Databricks Architecture Diagram

The integration is simple to set up and operates smoothly within your Databricks workspace. Here’s how it works:

- Install the HiddenLayer CLI: The first step is to install the HiddenLayer CLI on your system. Running this installation will set up the necessary Python notebooks in your Databricks workspace, where the HiddenLayer Model Scanner will run.

- Configure the Unity Catalog Schema: During the installation, you will specify the catalogs and schemas that will be used for model scanning. Once configured, the integration will automatically scan new versions of models registered in those schemas.

- Automated Scanning: A monitoring notebook called hl_monitor_models runs on a scheduled basis. It checks for newly registered model versions in the configured schemas. If a new version is found, another notebook, hl_scan_model, sends the model to HiddenLayer for scanning.

- Reviewing Scan Results After scanning, the results are added to Unity Catalog as model tags. These tags include the scan status (pending, done, or failed) and a threat level (safe, low, medium, high, or critical). The full detection report is also accessible in the HiddenLayer Console. This allows teams to evaluate risk without needing to switch between systems.

Why This Workflow Works

This integration helps your team stay secure while maintaining the speed and flexibility of modern machine learning development.

- No Process Changes for Data Scientists

Teams continue working as usual. Model security is handled in the background. - Real-Time Security Coverage

Every new model version is scanned automatically, providing continuous protection. - Centralized Visibility

Scan results are stored directly in Unity Catalog and attached to each model version, making them easy to access, track, and audit. - Seamless CI/CD Compatibility

The system aligns with existing automation and governance workflows.

Final Thoughts

Model security should be a core part of your machine learning operations. By integrating HiddenLayer’s Model Scanner with Databricks Unity Catalog, you gain a secure, automated process that protects your models from potential threats.

This approach improves governance, reduces risk, and allows your data science teams to keep working without interruptions. Whether you’re new to HiddenLayer or already a user, this integration with Databricks Unity Catalog is a valuable addition to your machine learning pipeline. Get started today and enhance the security of your ML models with ease.

Behind the Build: HiddenLayer’s Hackathon

At HiddenLayer, innovation isn’t a buzzword; it’s a habit. One way we nurture that mindset is through our internal hackathon: a time-boxed, creativity-fueled event where employees step away from their day-to-day roles to experiment, collaborate, and solve real problems. Whether it’s optimizing a workflow or prototyping a tool that could transform AI security, the hackathon is our space for bold ideas.

At HiddenLayer, innovation isn’t a buzzword; it’s a habit. One way we nurture that mindset is through our internal hackathon: a time-boxed, creativity-fueled event where employees step away from their day-to-day roles to experiment, collaborate, and solve real problems. Whether it’s optimizing a workflow or prototyping a tool that could transform AI security, the hackathon is our space for bold ideas.

To learn more about how this year’s event came together, we sat down with Noah Halpern, Senior Director of Engineering, who led the effort. He gave us an inside look at the process, the impact, and how hackathons fuel our culture of curiosity and continuous improvement.

Q: What inspired the idea to host an internal hackathon at HiddenLayer, and what were you hoping to achieve?

Noah: Many of us at HiddenLayer have participated in hackathons before and know how powerful they can be for driving innovation. When engineers step outside the structure of enterprise software delivery and into a space of pure creativity, without process constraints, it unlocks real potential.

And because we’re a remote-first team, we’re always looking for ways to create shared experiences. Hackathons offer a unique opportunity for cross-functional collaboration, helping teammates who don’t usually work together build trust, share knowledge, and have fun doing it.

Q: How did the team come together to plan and run the event?

Noah: It started with strong support from our executive team, all of whom have technical backgrounds and recognized the value of hosting one. I worked with department leads to ensure broad participation across engineering, product, design, and sales engineering. Our CTO and VP of Engineering helped define award categories that would encourage alignment with company goals. And our marketing team added some excitement by curating a great selection of prizes.

We set up a system for idea pitching and team formation, then stepped back to let people self-organize. The level of motivation and creativity across the board was inspiring. Teams took full ownership of their projects and pushed each other to new heights.

Q: What kinds of challenges did participants gravitate toward? What does that say about the team?

Noah: Most projects aimed to answer one of three big questions:

- How can we enhance our current products to better serve customers?

- What new problems are emerging that call for entirely new solutions?

- What internal tools can we build to improve how we work?

The common thread was clear: everyone was focused on delivering real value. The projects reflected a deep sense of craftsmanship and a shared commitment to solving meaningful problems. They were a great snapshot of how invested our team is in our mission and our customers.

Q: How does the hackathon reflect HiddenLayer’s culture of experimentation?

Noah: Hackathons are tailor-made for experimentation. They offer a low-risk space to try out new frameworks, tools, or techniques that people might not get to use in their regular roles. And even if a project doesn’t evolve into a product feature, it’s still a win because we’ve learned something.

Sometimes, learning what doesn’t work is just as valuable as discovering what does. That’s the kind of environment we want to create: one where curiosity is rewarded, and there’s room to test, fail, and try again.

Q: What surprised you the most during the event?

Noah: The creativity in the final presentations absolutely blew me away. Each team pre-recorded a demo video for their project, and they didn’t just showcase functionality. They made it engaging and fun. We saw humor, storytelling, and personality come through in ways we don’t often get to see in our day-to-day work.

It really showcased how much people enjoyed the process and how powerful it can be when teams feel ownership and pride in what they’ve built.

Q: How do events like this support personal and professional growth?

Noah: Hackathons let people wear different hats, such as designer, product owner, architect, and team lead, and take ownership of a vision. That kind of role fluidity is incredibly valuable for growth. It challenges people to step outside their comfort zones and develop new skills in a supportive environment.

And just as important, it’s inspiring. Seeing a colleague bring a bold idea to life is motivating, and it raises the bar for everyone.

Q: What advice would you give to other teams looking to spark innovation internally?

Noah: Give people space to build. Prototypes have a power that slides and planning sessions often don’t. When you can see an idea in action, it becomes real.

Make it inclusive. Innovation shouldn’t be limited to specific teams or job titles. Some of the best ideas come from places you don’t expect. And finally, focus on creating a structure that reduces friction and encourages participation, then trust your team to run with it.

Innovation doesn’t happen by accident. It happens when you make space for it. At HiddenLayer, our internal hackathon is one of many ways we invest in that space: for our people, for our products, and for the future of secure AI.

The AI Security Playbook

As AI rapidly transforms business operations across industries, it brings unprecedented security vulnerabilities that existing tools simply weren’t designed to address. This article reveals the hidden dangers lurking within AI systems, where attackers leverage runtime vulnerabilities to exploit model weaknesses, and introduces a comprehensive security framework that protects the entire AI lifecycle. Through the real-world journey of Maya, a data scientist, and Raj, a security lead, readers will discover how HiddenLayer’s platform seamlessly integrates robust security measures from development to deployment without disrupting innovation. In a landscape where keeping pace with adversarial AI techniques is nearly impossible for most organizations, this blueprint for end-to-end protection offers a crucial advantage before the inevitable headlines of major AI breaches begin to emerge.

Summary

As AI rapidly transforms business operations across industries, it brings unprecedented security vulnerabilities that existing tools simply weren’t designed to address. This article reveals the hidden dangers lurking within AI systems, where attackers leverage runtime vulnerabilities to exploit model weaknesses, and introduces a comprehensive security framework that protects the entire AI lifecycle. Through the real-world journey of Maya, a data scientist, and Raj, a security lead, readers will discover how HiddenLayer’s platform seamlessly integrates robust security measures from development to deployment without disrupting innovation. In a landscape where keeping pace with adversarial AI techniques is nearly impossible for most organizations, this blueprint for end-to-end protection offers a crucial advantage before the inevitable headlines of major AI breaches begin to emerge.

Introduction

AI security has become a critical priority as organizations increasingly deploy these systems across business functions, but it is not straightforward how it fits into the day-to-day life of a developer or data scientist or security analyst.

But before we can dive in, we first need to define what AI security means and why it’s so important.

AI vulnerabilities can be split into two categories: model vulnerabilities and runtime vulnerabilities. The easiest way to think about this is that attackers will use runtime vulnerabilities to exploit model vulnerabilities. In securing these, enterprises are looking for the following:

- Unified Security Perspective: Security becomes embedded throughout the entire AI lifecycle rather than applied as an afterthought.

- Early Detection: Identifying vulnerabilities before models reach production prevents potential exploitation and reduces remediation costs.

- Continuous Validation: Security checks occur throughout development, CI/CD, pre-production, and production phases.

- Integration with Existing Security: The platform works alongside current security tools, leveraging existing investments.

- Deployment Flexibility: HiddenLayer offers deployment options spanning on-premises, SaaS, and fully air-gapped environments to accommodate different organizational requirements.

- Compliance Alignment: The platform supports compliance with various regulatory requirements, such as GDPR, reducing organizational risk.

- Operational Efficiency: Having these capabilities in a single platform reduces tool sprawl and simplifies security operations.

Notice that these are no different than the security needs for any software application. AI isn’t special here. What makes AI special is how easy it is to exploit, and when we couple that with the fact that current security tools do not protect AI models, we begin to see the magnitude of the problem.

AI is the fastest-evolving technology the world has ever seen. Keeping up with the tech itself is already a monumental challenge. Keeping up with the newest techniques in adversarial AI is near impossible, but it’s only a matter of time before a nation state, hacker group, or even a motivated individual makes headlines by employing these cutting-edge techniques.

This is where HiddenLayer’s AISec Platform comes in. The platform protects both model and runtime vulnerabilities and is backed by an adversarial AI research team that is 20+ experts strong and growing.

Let’s look at how this works.

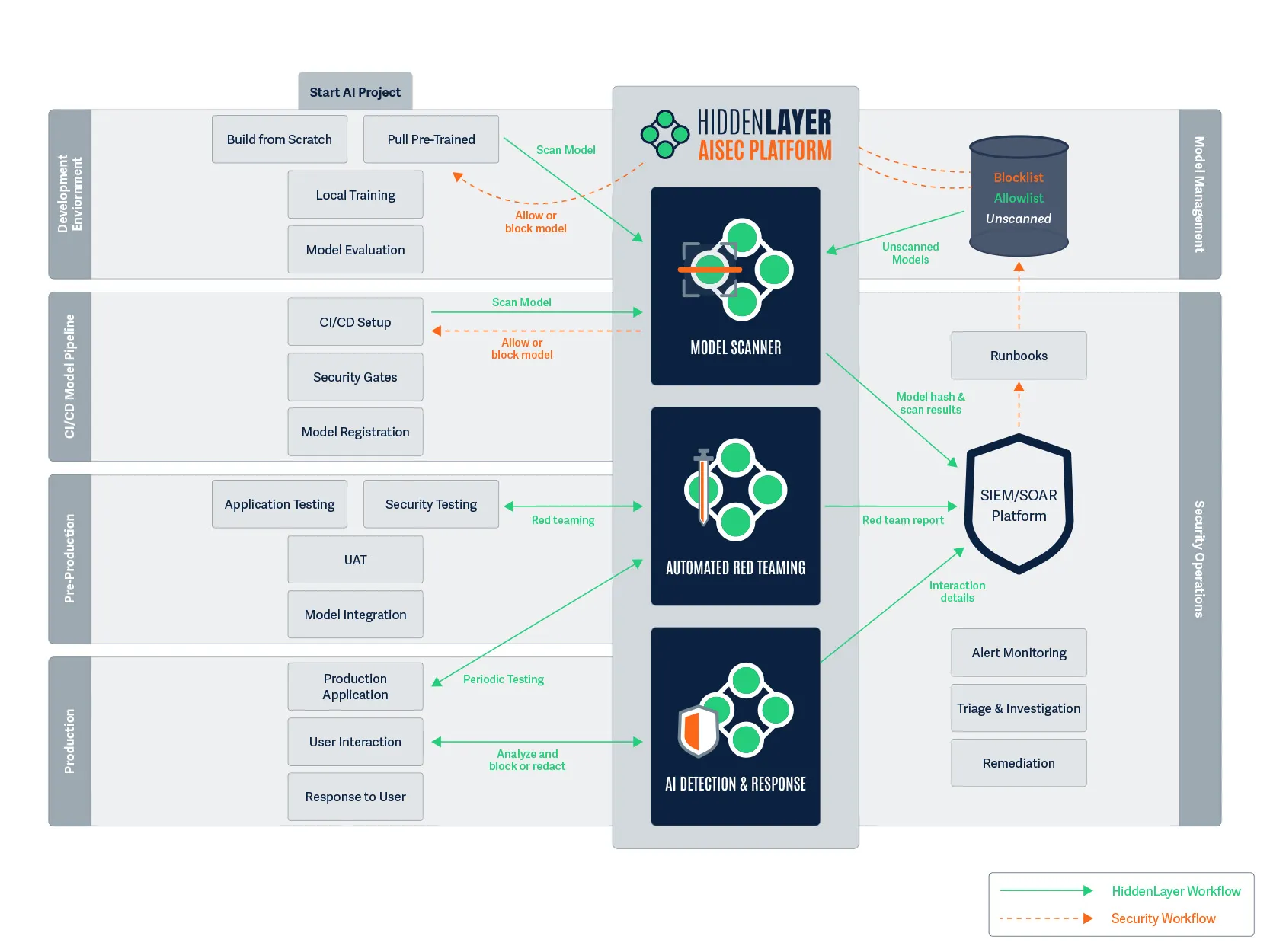

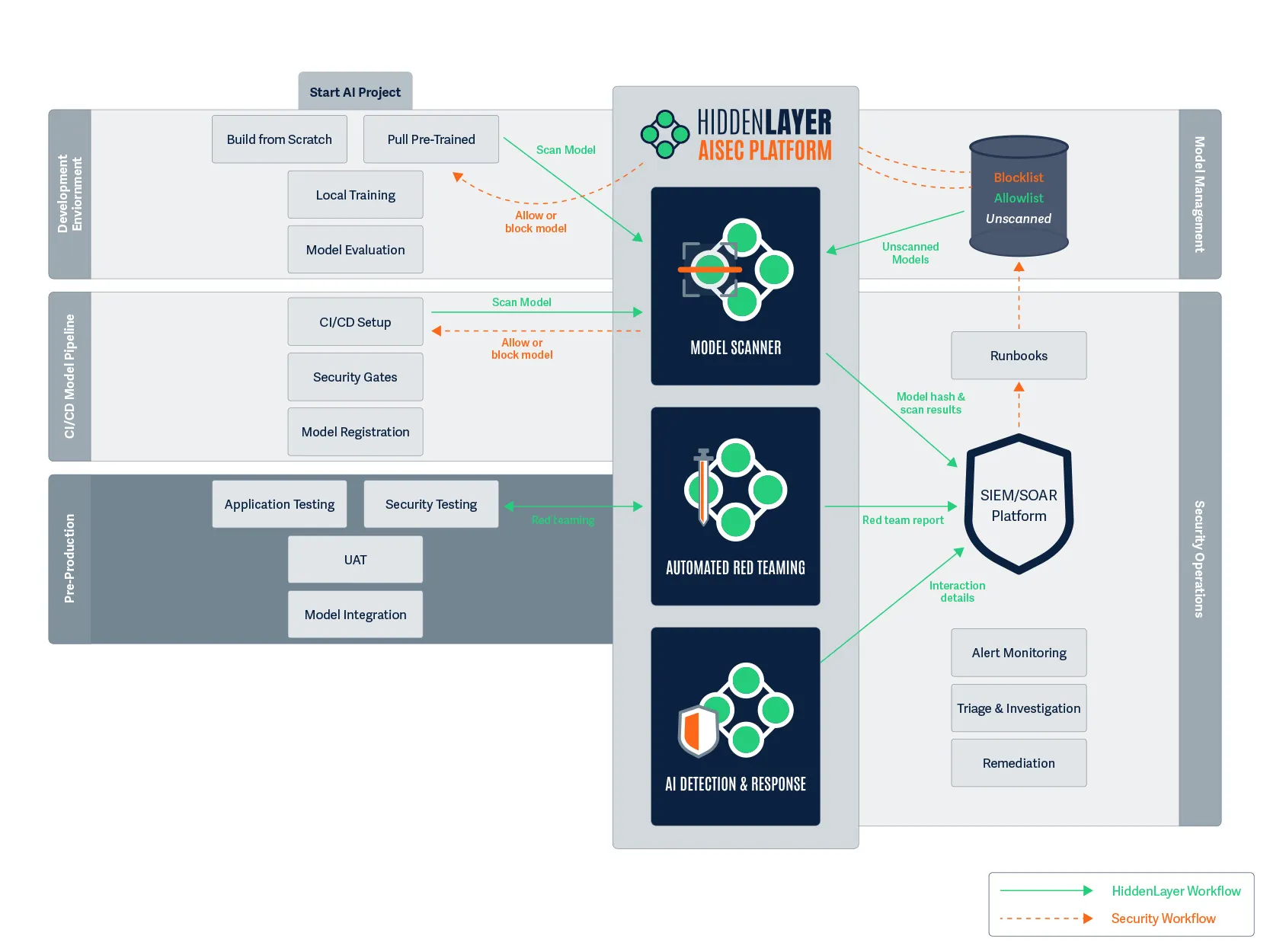

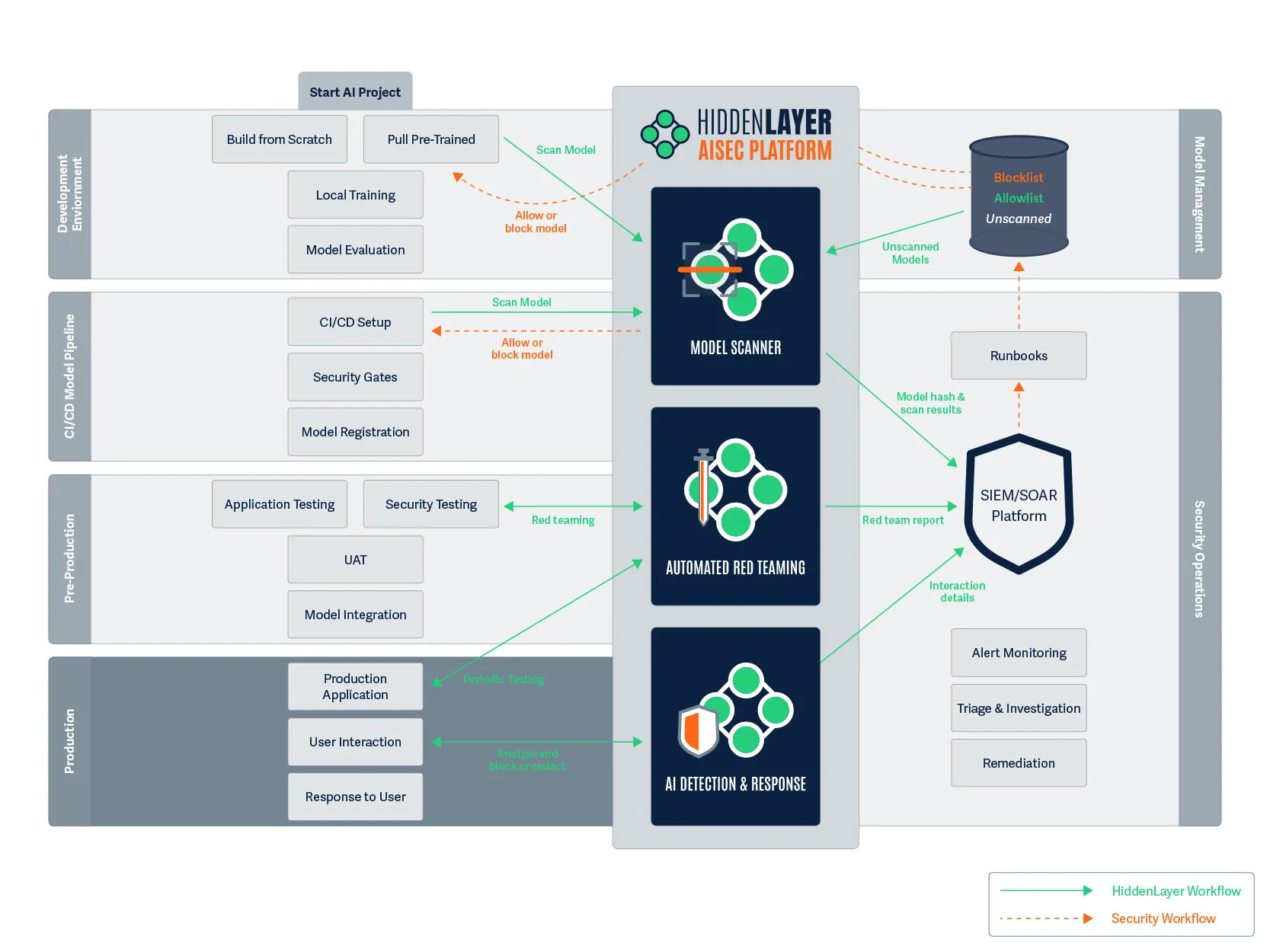

Figure 1. Protecting the AI project lifecycle.

The left side of the diagram above illustrates an AI project’s lifecycle. The right side represents governance and security. And in the middle sits HiddenLayer’s AI security platform.

It’s important to acknowledge that this diagram is designed to illustrate the general approach rather than be prescriptive about exact implementations. Actual implementations will vary based on organizational structure, existing tools, and specific requirements.

A Day in the Life: Secure AI Development

To better understand how this security approach works in practice, let’s follow Maya, a data scientist at a financial institution, as she develops a new AI model for fraud detection. Her work touches sensitive financial data and must meet strict security and compliance requirements. The security team, led by Raj, needs visibility into the AI systems without impeding Maya’s development workflow.

Establishing the Foundation

Before we follow Maya’s journey, we must lay the foundational pieces - Model Management and Security Operations.

Model Management

Figure 2. We start the foundation with model management.

This section represents the system where organizations store, version, and manage their AI models, whether that’s Databricks, AWS SageMaker, Azure ML, or any other model registry. These systems serve as the central repository for all models within the organization, providing essential capabilities such as:

- Versioning and lineage tracking for models

- Metadata storage and search capabilities

- Model deployment and serving mechanisms

- Access controls and permissions management

- Model lifecycle status tracking

Model management systems act as the source of truth for AI assets, allowing teams to collaborate effectively while maintaining governance over model usage throughout the organization.

Security Operations

Figure 3. We then add the security operations to the foundation.

The next component represents the security tools and processes that monitor, detect, and respond to threats across the organization. This includes SIEM/SOAR platforms, security orchestration systems, and the runbooks that define response procedures when security issues are detected.

The security operations center serves as the central nervous system for security across the organization, collecting alerts, prioritizing responses, and coordinating remediation activities.

Building Out the AI Application

With our supporting infrastructure in place, let’s build out the main sections of the diagram that represent the AI application lifecycle as we follow Maya’s workday as she builds a new fraud detection model at her financial institution.

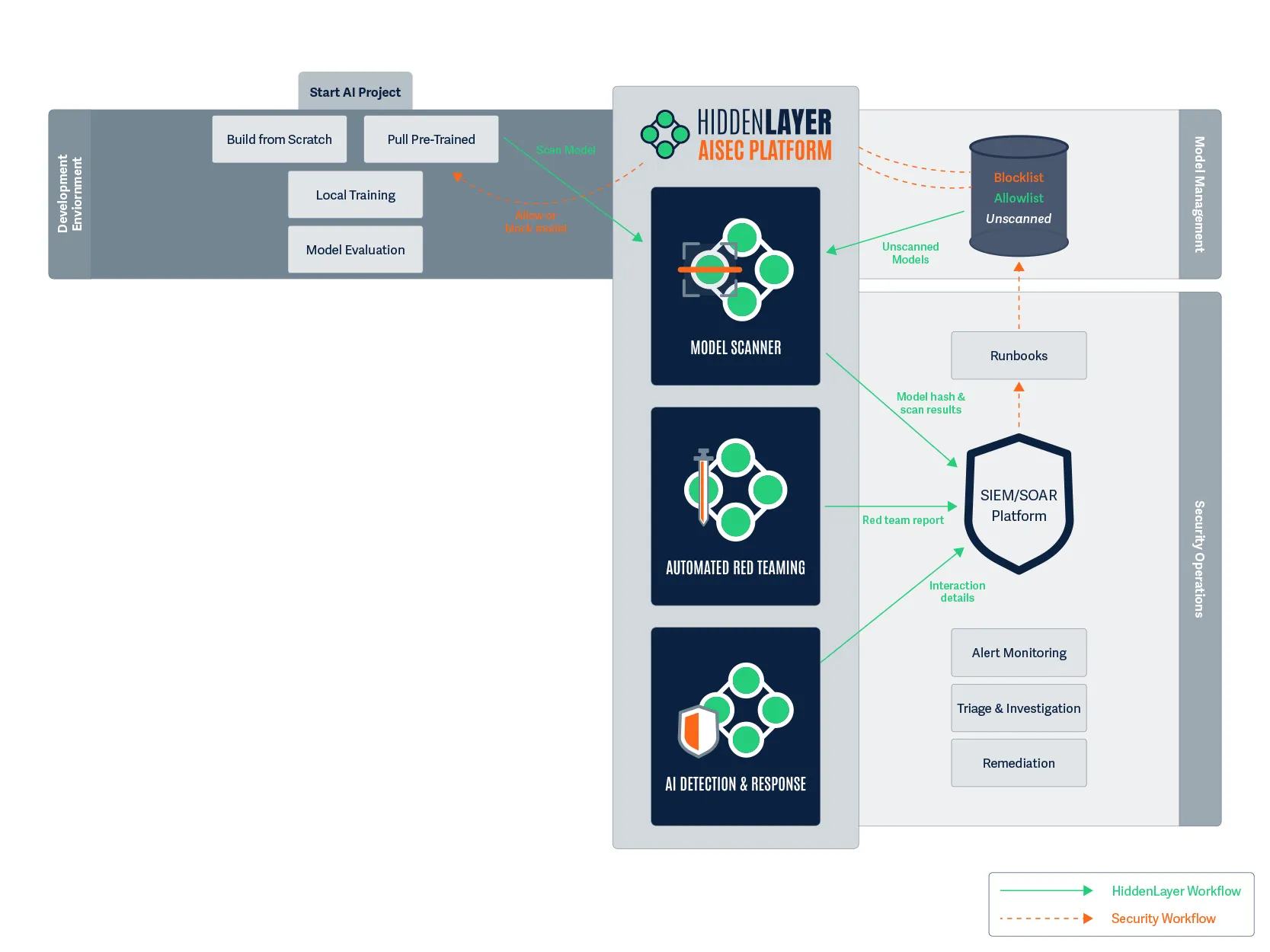

Development Environment

Figure 4. The AI project lifecycle starts in the development environment.

7:30 AM: Maya begins her day by searching for a pre-trained transformer model for natural language processing on customer-agent communications. She finds a promising model on HuggingFace that appears to fit her requirements.

Before she can download the model, she kicks off a workflow to send the HuggingFace repo to HiddenLayer’s Model Scanner. Maya receives a notification that the model is being scanned for security vulnerabilities. Within minutes, she gets the green light – the model has passed initial security checks and is now added to her organization’s allowlist. She now downloads the model.

In a parallel workflow, Raj, the leader of the security team, receives an automatic log of the model scan, including its SHA-256 hash identifier. The model’s status is added to the security dashboard without Raj having to interrupt Maya’s workflow.

The scanner has performed an immediate security evaluation for vulnerabilities, backdoors, and evidence of tampering. Had there been any issues, HiddenLayer’s model scanner would deliver an “Unsafe” verdict to the security platform, where a runbook adds it to the blocklist in the model registry and alerts Maya to find a different base model. The model’s unique hash is now documented in their security systems, enabling broader security monitoring throughout its lifecycle.

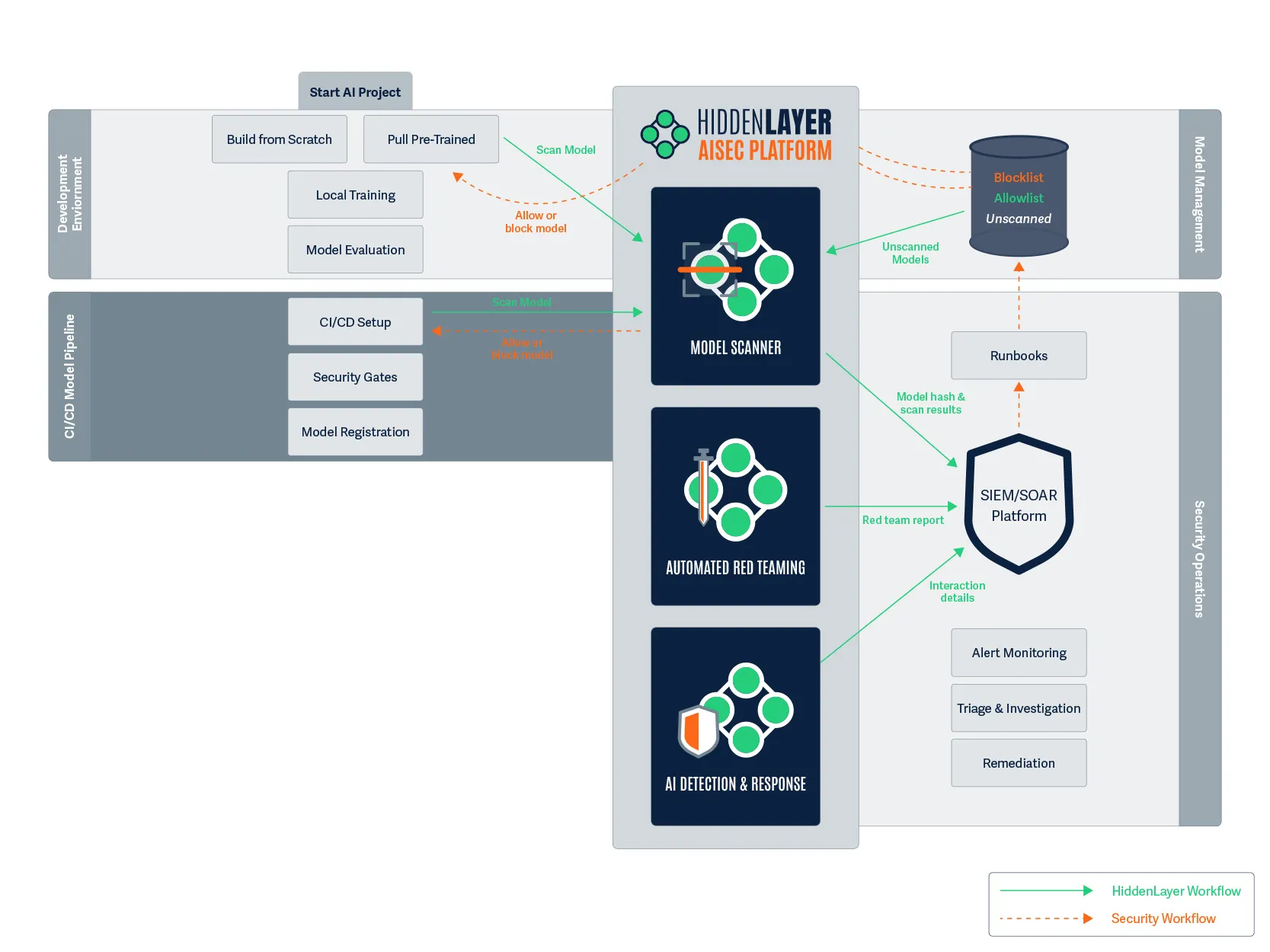

CI/CD Model Pipeline

Figure 5. Once development is complete, we move to CI/CD.

2:00 PM: After spending several hours fine-tuning the model on financial communications, Maya is ready to commit her code and the modified model to the CI/CD pipeline.

As her commit triggers the build process, another security scan automatically initiates. This second scan is crucial as a final check to ensure that no supply chain attacks were introduced during the build process.

Meanwhile, Raj receives an alert showing that the model has evolved but remains secure. The security gates throughout the CI/CD process are enforcing the organization’s security policies, and the continuous verification approach ensures that security remains intact throughout the development process.

Pre-Production

Figure 6. With CI/CD complete and the model ready, we continue to pre-production.

9:00 AM (Next Day): Maya arrives to find that her model has successfully made it through the CI/CD pipeline overnight. Now it’s time for thorough testing before it reaches production.

While Maya conducts application testing to ensure the model performs as expected on customer-agent communications, HiddenLayer’s Auto Red Team tool runs in parallel, systematically testing the model with potentially malicious prompts across configurable attack categories.

The Auto Red Team generates a detailed report showing:

- Pass/fail results for each attack attempt

- Criticality levels of identified vulnerabilities

- Complete details of the prompts used and the responses received

Maya notices that the model failed one category of security tests, as it was responding to certain prompts with potentially sensitive financial information. She goes back to adjust the model’s training, and then submits the model once again to HiddenLayer’s Model Scanner, again seeing that the model is secure. After passing both security testing and user acceptance testing (UAT), the model is approved for integration into the production fraud detection application.

Production

Figure 7. All tests are passed, and we have the green light to enter production.

One Week Later: Maya's model is now live in production, analyzing thousands of customer-agent communications per hour to detect social engineering and fraud attempts.

Two security components are now actively protecting the model:

- Periodic Red Team Testing: Every week, automated testing runs to identify any new vulnerabilities as attack techniques evolve and to confirm the model is still performing as expected.

- AI Detection & Response (AIDR): Real-time monitoring analyzes all interactions with the fraud detection application, examining both inputs and outputs for security issues.

Raj's team has configured AIDR to block malicious inputs and redact sensitive information like account numbers and personal details. The platform is set to use context-preserving redaction, indicating the type of data that was redacted while preserving the overall meaning, critical for their fraud analysis needs.

An alert about a potential attack was sent to Raj’s team. One of the interactions contained a PDF with a prompt injection attack hidden in white font, telling the model to ignore certain parts of the transaction. The input was blocked, the interaction was flagged, and now Raj’s team can investigate without disrupting the fraud detection service.

Conclusion

The comprehensive approach illustrated integrates security throughout the entire AI lifecycle, from initial model selection to production deployment and ongoing monitoring. This end-to-end methodology enables organizations to identify and mitigate vulnerabilities at each stage of development while maintaining operational efficiency.

For technical teams, these security processes operate seamlessly in the background, providing robust protection without impeding development workflows.

For security teams, the platform delivers visibility and control through familiar concepts and integration with existing infrastructure.

The integration of security at every stage addresses the unique challenges posed by AI systems:

- Protection against both model and runtime vulnerabilities

- Continuous validation as models evolve and new attack techniques emerge

- Real-time detection and response to potential threats

- Compliance with regulatory requirements and organizational policies

As AI becomes increasingly central to critical business processes, implementing a comprehensive security approach is essential rather than optional. By securing the entire AI lifecycle with purpose-built tools and methodologies, organizations can confidently deploy these technologies while maintaining appropriate safeguards, reducing risk, and enabling responsible innovation.

Interested in learning how this solution can work for your organization? Contact the HiddenLayer team here.

Governing Agentic AI

Artificial intelligence is evolving rapidly. We’re moving from prompt-based systems to more autonomous, goal-driven technologies known as agentic AI. These systems can take independent actions, collaborate with other agents, and interact with external systems—all with limited human input. This shift introduces serious questions about governance, oversight, and security.

Why the EU AI Act Matters for Agentic AI

Artificial intelligence is evolving rapidly. We’re moving from prompt-based systems to more autonomous, goal-driven technologies known as agentic AI. These systems can take independent actions, collaborate with other agents, and interact with external systems—all with limited human input. This shift introduces serious questions about governance, oversight, and security.

The EU Artificial Intelligence Act (EU AI Act) is the first major regulatory framework to address AI safety and compliance at scale. Based on a risk-based classification model, it sets clear, enforceable obligations for how AI systems are built, deployed, and managed. In addition to the core legislation, the European Commission will release a voluntary AI Code of Practice by mid-2025 to support industry readiness.

As agentic AI becomes more common in real-world systems, organizations must prepare now. These systems often fall into regulatory gray areas due to their autonomy, evolving behavior, and ability to operate across environments. Companies using or developing agentic AI need to evaluate how these technologies align with EU AI Act requirements—and whether additional internal safeguards are needed to remain compliant and secure.

This blog outlines how the EU AI Act may apply to agentic AI systems, where regulatory gaps exist, and how organizations can strengthen oversight and mitigate risk using purpose-built solutions like HiddenLayer.

What Is Agentic AI?

Agentic AI refers to systems that can autonomously perform tasks, make decisions, design workflows, and interact with tools or other agents to accomplish goals. While human users typically set objectives, the system independently determines how to achieve them. These systems differ from traditional generative AI, which typically responds to inputs without initiative, in that they actively execute complex plans.

Key Capabilities of Agentic AI:

- Autonomy: Operates with minimal supervision by making decisions and executing tasks across environments.

- Reasoning: Uses internal logic and structured planning to meet objectives, rather than relying solely on prompt-response behavior.

- Resource Orchestration: Calls external tools or APIs to complete steps in a task or retrieve data.

- Multi-Agent Collaboration: Delegates tasks or coordinates with other agents to solve problems.

- Contextual Memory: Retains past interactions and adapts based on new data or feedback.

IBM reports that 62% of supply chain leaders already see agentic AI as a critical accelerator for operational speed. However, this speed comes with complexity, and that requires stronger oversight, transparency, and risk management.

For a deeper technical breakdown of these systems, see our blog: Securing Agentic AI: A Beginner’s Guide.

Where the EU AI Act Falls Short on Agentic Systems

Agentic systems offer clear business value, but their unique behaviors pose challenges for existing regulatory frameworks. Below are six areas where the EU AI Act may need reinterpretation or expansion to adequately cover agentic AI.

1. Lack of Definition

The EU AI Act doesn’t explicitly define “agentic systems.” While its language covers autonomous and adaptive AI, the absence of a direct reference creates uncertainty. Recital 12 acknowledges that AI can operate independently, but further clarification is needed to determine how agentic systems fit within this definition, and what obligations apply.

2. Risk Classification Limitations

The Act assigns AI systems to four risk levels: unacceptable, high, limited, and minimal. But agentic AI may introduce context-dependent or emergent risks not captured by current models. Risk assessment should go beyond intended use and include a system’s level of autonomy, the complexity of its decision-making, and the industry in which it operates.

3. Human Oversight Requirements

The Act mandates meaningful human oversight for high-risk systems. Agentic AI complicates this: these systems are designed to reduce human involvement. Rather than eliminating oversight, this highlights the need to redefine oversight for autonomy. Organizations should develop adaptive controls, such as approval thresholds or guardrails, based on the risk level and system behavior.

4. Technical Documentation Gaps

While Article 11 of the EU AI Act requires detailed technical documentation for high-risk AI systems, agentic AI demands a more comprehensive level of transparency. Traditional documentation practices such as model cards or AI Bills of Materials (AIBOMs) must be extended to include:

- Decision pathways

- Tool usage logic

- Agent-to-agent communication

- External tool access protocols

This depth is essential for auditing and compliance, especially when systems behave dynamically or interact with third-party APIs.

5. Risk Management System Complexity

Article 9 mandates that high-risk AI systems include a documented risk management process. For agentic AI, this must go beyond one-time validation to include ongoing testing, real-time monitoring, and clearly defined response strategies. Because these systems engage in multi-step decision-making and operate autonomously, they require continuous safeguards, escalation protocols, and oversight mechanisms to manage the emergent and evolving risks they pose throughout their lifecycle.

6. Record-Keeping for Autonomous Behavior

Agentic systems make independent decisions and generate logs across environments. Article 12 requires event recording throughout the AI lifecycle. Structured logs, including timestamps, reasoning chains, and tool usage, are critical for post-incident analysis, compliance, and accountability.

The Cost of Non-Compliance

The EU AI Act imposes steep penalties for non-compliance:

- Up to €35 million or 7% of global annual turnover for prohibited practices

- Up to €15 million or 3% for violations involving high-risk AI systems

- Up to €7.5 million or 1% for providing false information

These fines are only part of the equation. Reputational damage, loss of customer trust, and operational disruption often cost more than the fine itself. Proactive compliance builds trust and reduces long-term risk.

Unique Security Threats Facing Agentic AI

Agentic systems aren’t just regulatory challenges. They also introduce new attack surfaces. These include:

- Prompt Injection: Malicious input embedded in external data sources manipulates agent behavior.

- PII Leakage: Unintentional exposure of sensitive data while completing tasks.

- Model Tampering: Inputs crafted to influence or mislead the agent’s decisions.

- Data Poisoning: Compromised feedback loops degrade agent performance.

- Model Extraction: Repeated querying reveals model logic or proprietary processes.

These threats jeopardize operational integrity and compliance with the EU AI Act’s demands for transparency, security, and oversight.

How HiddenLayer Supports Agentic AI Security and Compliance

At HiddenLayer, we’ve developed solutions designed specifically to secure and govern agentic systems. Our AI Detection and Response (AIDR) platform addresses the unique risks and compliance challenges posed by autonomous agents.

Human Oversight

AIDR enables real-time visibility into agent behavior, intent, and tool use. It supports guardrails, approval thresholds, and deviation alerts, making human oversight possible even in autonomous systems.

Technical Documentation

AIDR automatically logs agent activities, tool usage, decision flows, and escalation triggers. These logs support Article 11 requirements and improve system transparency.

Risk Management

AIDR conducts continuous risk assessment and behavioral monitoring. It enables:

- Anomaly detection during task execution

- Sensitive data protection enforcement

- Prompt injection defense

These controls support Article 9’s requirement for risk management across the AI system lifecycle.

Record-Keeping

AIDR structures and stores audit-ready logs to support Article 12 compliance. This ensures teams can trace system actions and demonstrate accountability.

By implementing AIDR, organizations reduce the risk of non-compliance, improve incident response, and demonstrate leadership in secure AI deployment.

What Enterprises Should Do Next

Even if the EU AI Act doesn’t yet call out agentic systems by name, that time is coming. Enterprises should take proactive steps now:

- Assess Your Risk Profile: Understand where and how agentic AI fits into your organization’s operations and threat landscape.

- Develop a Scalable AI Strategy: Align deployment plans with your business goals and risk appetite.

- Build Cross-Functional Governance: Involve legal, compliance, security, and engineering teams in oversight.

- Invest in Internal Education: Ensure teams understand agentic AI, how it operates, and what risks it introduces.

- Operationalize Oversight: Adopt tools and practices that enable continuous monitoring, incident detection, and lifecycle management.

Being early to address these issues is not just about compliance. It’s about building a secure, resilient foundation for AI adoption.

Conclusion

As AI systems become more autonomous and integrated into core business processes, they present both opportunity and risk. The EU AI Act offers a structured framework for governance, but its effectiveness depends on how organizations prepare.

Agentic AI systems will test the boundaries of existing regulation. Enterprises that adopt proactive governance strategies and implement platforms like HiddenLayer’s AIDR can ensure compliance, reduce risk, and protect the trust of their stakeholders.

Now is the time to act. Compliance isn’t a checkbox, it’s a competitive advantage in the age of autonomous AI.

Have questions about how to secure your agentic systems? Talk to a HiddenLayer team member today: contact us.

AI Policy in the U.S.

Artificial intelligence (AI) has rapidly evolved from a cutting-edge technology into a foundational layer of modern digital infrastructure. Its influence is reshaping industries, redefining public services, and creating new vectors of economic and national competitiveness. In this environment, we need to change the narrative of “how to strike a balance between regulation and innovation” to “how to maximize performance across all dimensions of AI development”.

Introduction

Artificial intelligence (AI) has rapidly evolved from a cutting-edge technology into a foundational layer of modern digital infrastructure. Its influence is reshaping industries, redefining public services, and creating new vectors of economic and national competitiveness. In this environment, we need to change the narrative of “how to strike a balance between regulation and innovation” to “how to maximize performance across all dimensions of AI development”.

The AI industry must approach policy not as a constraint to be managed, but as a performance frontier to be optimized. Rather than framing regulation and innovation as competing forces, we should treat AI governance as a multidimensional challenge, where leadership is defined by the industry’s ability to excel across every axis of responsible development. That includes proactive engagement with oversight, a strong security posture, rigorous evaluation methods, and systems that earn and retain public trust.

The U.S. Approach to AI Policy

Historically, the United States has favored a decentralized, innovation-forward model for AI development, leaning heavily on sector-specific norms and voluntary guidelines.

- The American AI Initiative (2019) emphasized R&D and workforce development but lacked regulatory teeth.

- The Biden Administration’s 2023 Executive Order on Safe, Secure, and Trustworthy AI marked a stronger federal stance, tasking agencies like NIST with expanding the AI Risk Management Framework (AI RMF).

- While the subsequent administration rescinded this order in 2025, it ignited industry-wide momentum around responsible AI practices.

States are also taking independent action. Colorado’s SB21-169 and California’s CCPA expansions reflect growing demand for transparency and accountability, but also introduce regulatory fragmentation. The result is a patchwork of expectations that slows down oversight and increases compliance complexity.

Federal agencies remain siloed:

- FTC is tackling deceptive AI claims.

- FDA is establishing pathways for machine-learning medical tools.

- NIST continues to lead with voluntary but influential frameworks.

This fragmented landscape presents the industry with both a challenge and an opportunity to lead in building innovative and governable systems.

AI Governance as a Performance Metric

In many policy circles, AI oversight is still framed as a “trade-off,” with innovation on one side and regulation on the other. But this is a false dichotomy. In practice, the capabilities that define safe, secure, and trustworthy AI systems are not in tension with innovation, they are essential components of it.

- Security posture is not simply a compliance requirement; it is foundational to model integrity and resilience. Whether defending against adversarial attacks or ensuring secure data pipelines, AI systems must meet the same rigor as traditional software infrastructure, if not higher.

- Fairness and transparency are not checkboxes but design challenges. AI tools used in hiring, lending, or criminal justice must function equitably across demographic groups. Failures in these areas have already led to real-world harms, such as flawed facial recognition leading to false arrests or automated résumé screening systems reinforcing gender and racial biases.

- Explainability is key to adoption and accountability. In healthcare, clinicians using AI-based diagnostics need clear reasoning from models to make safe decisions, just as patients need to trust the tools shaping their outcomes. When these capabilities are missing, the issue isn’t just regulatory, it’s performance. A system that is biased, brittle, or opaque is not only untrustworthy but also fundamentally incomplete. High-performance AI development means building for resilience, reliability, and inclusion in the same way we design for speed, scale, and accuracy.

The industry’s challenge is to embrace regulatory readiness as a marker of product maturity and competitive advantage, not a burden. Organizations that develop explainability tooling, integrate bias auditing, or adopt security standards early will not only navigate policy shifts more easily but also likely build better, more trusted systems.

A Smarter Path to AI Oversight

One of the most pragmatic paths forward is to adapt existing regulatory frameworks that already govern software, data, and risk rather than inventing an entirely new regime for AI.

Rather than starting from scratch, the U.S. can build on proven regulatory frameworks already used in cybersecurity, privacy, and software assurance.

- NIST Cybersecurity Framework (CSF) offers a structured model for threat identification and response that can extend to AI security.

- FISMA mandates strong security programs in federal agencies—principles that can guide government AI system protections.

- GLBA and HIPAA offer blueprints for handling sensitive data, applicable to AI systems dealing with personal, financial, or biometric information.

These frameworks give both regulators and developers a shared language. Tools like model cards, dataset documentation, and algorithmic impact assessments can sit on top of these foundations, aligning compliance with transparency.

Industry efforts, such as Google’s Secure AI Framework (SAIF), reflect a growing recognition that AI security must be treated as a core engineering discipline, not an afterthought.

Similarly, NIST’s AI RMF encourages organizations to embed risk mitigation into development workflows, an approach closely aligned with HiddenLayer’s vision for secure-by-design AI.

One emerging model to watch: regulatory sandboxes. Inspired by the U.K.’s Financial Conduct Authority, sandboxes allow AI systems to be tested in controlled environments alongside regulators. This enables innovation without sacrificing oversight.

Conclusion: AI Governance as a Catalyst, Not a Constraint

The future of AI policy in the United States should not be about compromise, it should be about optimization. The AI industry must rise to the challenge of maximizing performance across all core dimensions: innovation, security, privacy, safety, fairness, and transparency. These are not constraints, but capabilities and necessary conditions for sustainable, scalable, and trusted AI development.

By treating governance as a driver of excellence rather than a limitation, we can strengthen our security posture, sharpen our innovation edge, and build systems that serve all communities equitably. This is not a call to slow down. It is a call to do it right, at full speed.

The tools are already within reach. What remains is a collective commitment from industry, policymakers, and civil society to make AI governance a function of performance, not politics. The opportunity is not just to lead the world in AI capability but also in how AI is built, deployed, and trusted.

At HiddenLayer, we’re committed to helping organizations secure and scale their AI responsibly. If you’re ready to turn governance into a competitive advantage, contact our team or explore how our AI security solutions can support your next deployment.

Understand AI Security, Clearly Defined

Explore our glossary to get clear, practical definitions of the terms shaping AI security, governance, and risk management.