Introduction

Artificial Intelligence (AI) and Machine Learning (ML), the most common application of AI, are proving to be a paradigm-shifting technology. From autonomous vehicles and virtual assistants to fraud detection systems and medical diagnosis tools, practically every company in every industry is entering into an AI arms race seeking to gain a competitive advantage by utilizing ML to deliver better customer experiences, optimize business efficiencies, and accelerate innovative research.

CISOs, CIOs, and their cybersecurity operation teams are accustomed to adapting to the constant changes to their corporate environments and tech stacks. However, the breakneck pace of AI adoption has left many organizations struggling to put in place the proper processes, people, and controls necessary to protect against the risks and attacks inherent to ML.

According to a recent Forrester report, “It’s Time For Zero Trust AI,” a majority of decision-makers responsible for AI Security responded that Machine Learning (ML) projects will play a critical or important role in their company’s revenue generation, customer experience and business operations in the next 18 months. Alarmingly though, the majority of respondents noted they currently rely on manual processes to address ML model threats and 86% of respondents were ‘extremely concerned or concerned’ about their organization’s ML model security. To address this challenge, a majority of respondents expressed interest to invest in a solution that manages ML model integrity and security within the next 12 months.

In this blog, we will delve into the intricacies of Security for AI and its significance in the ever-evolving threat landscape. Our goal is to provide security teams with a comprehensive overview of the key considerations, risks, and best practices that should be taken into account when securing AI deployments within their organizations.

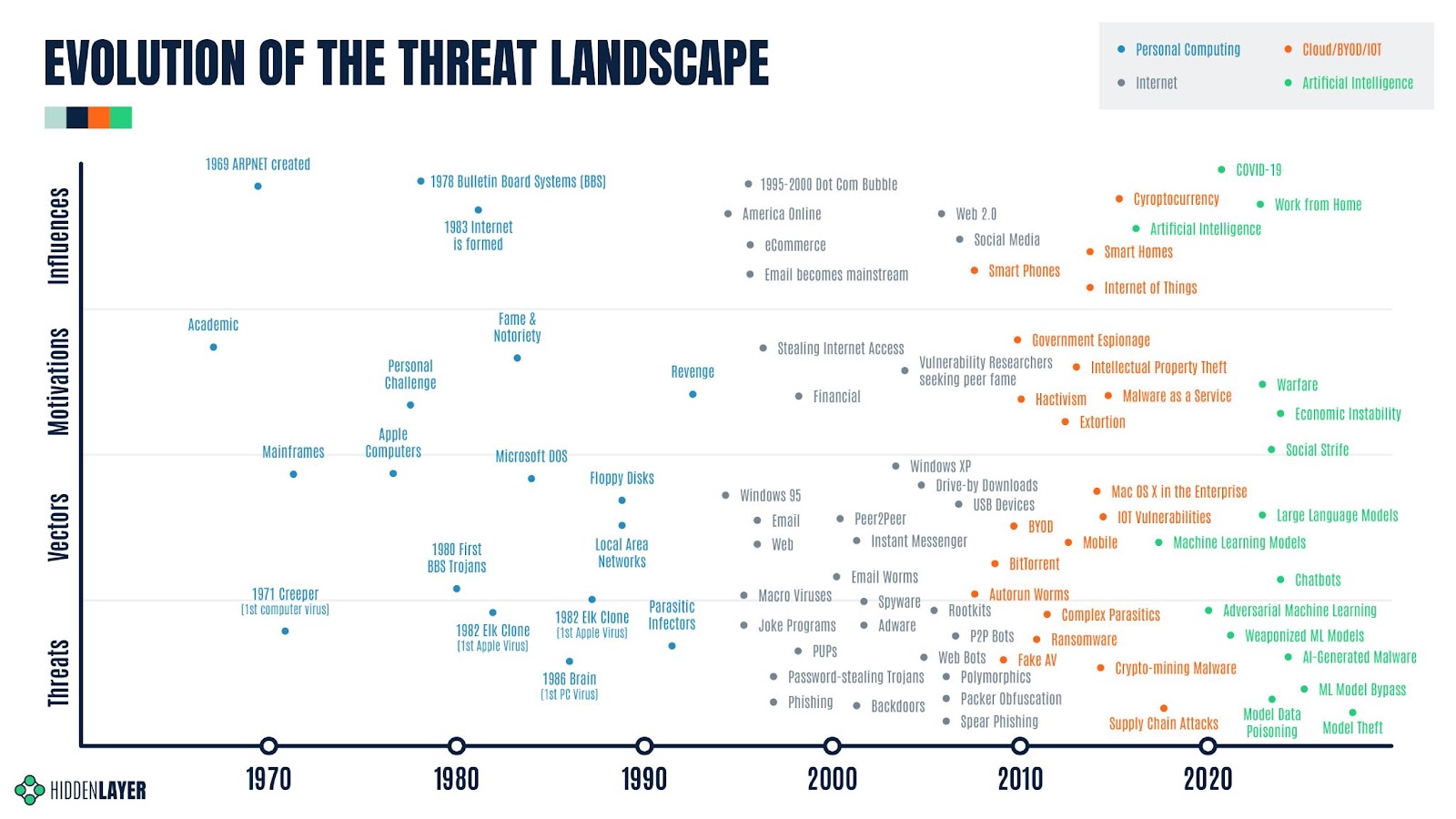

Before we deep dive into Security for AI, let’s first take a step back and look at the evolution of the cybersecurity threat landscape through its history to understand what is similar and different about AI compared to past paradigm-shifting moments.

Personal Computing Era – The Digital Revolution (aka the Third Industrial Revolution) ushered in the era of the Information Age and gave us mainframe computers, servers, and personal computing available to the masses. It also introduced the world to computer viruses and Trojans, making anti-virus software one of the founding fathers of cybersecurity products.

Internet Era – The Internet then opened a Pandora’s Box of threats to the new digital world, bringing with it computer worms, spam, phishing attacks, macro viruses, adware, spyware, password-stealing Trojans just to name a few. Many of us still remember the sleepless nights from the Virus War of 2004. Anti-virus could no longer keep up spawning new cybersecurity solutions like Firewalls, VPNs, Host Intrusion Prevention, Network Intrusion Prevention, Application Control, etc.

Cloud, BYOD, & IOT Era – Prior to 2006, the assets and data that most security teams needed to protect were primarily confined within the corporate firewall and VPN. Cloud computing, BYOD, and IOT changed all of that and decentralized corporate data, intellectual property, and network traffic. IT and Security Operations teams had to adjust to protecting company assets, employees, and data scattered all over the real and virtual world.

Artificial Intelligence Era – We are now at the doorstep of a new significant era thanks to artificial intelligence. Although the concept of AI has been a storytelling device in science fiction and academic research since the early 1900s, its real-world application wasn’t possible until the turn of the millennia. After OpenAI launched ChatGPT and it said hello to the world on November 30, 2022, AI became a dinner conversation in practically every household across the globe.

The world is still debating what impact AI will have on economic and social issues, but there is one thing that is not debatable – AI will bring with it a new era of cybersecurity threats and attacks. Let’s delve into how AI/ML works and how security teams will need to think about things differently to protect it.

How does Machine Learning Work?

Artificial Intelligence (AI) and Machine Learning (ML) are sometimes used interchangeably, which can cause confusion to those who aren’t data scientists. Before you can understand ML, you first need to understand the field of AI.

AI is a broad field that encompasses the development of intelligent machines capable of simulating human intelligence and performing tasks that typically require human intelligence, such as problem-solving, decision-making, perception, and natural language understanding.

ML is a subset of AI that focuses on algorithms and statistical models that enable computer systems to automatically learn and improve from experience without being explicitly programmed. ML algorithms aim to identify patterns and make predictions or decisions based on data. The majority of AI we deal with today are ML models.

Now that we’ve defined AI & ML, let’s dive into how a Machine Learning Model is created.

MLOps Lifecycle

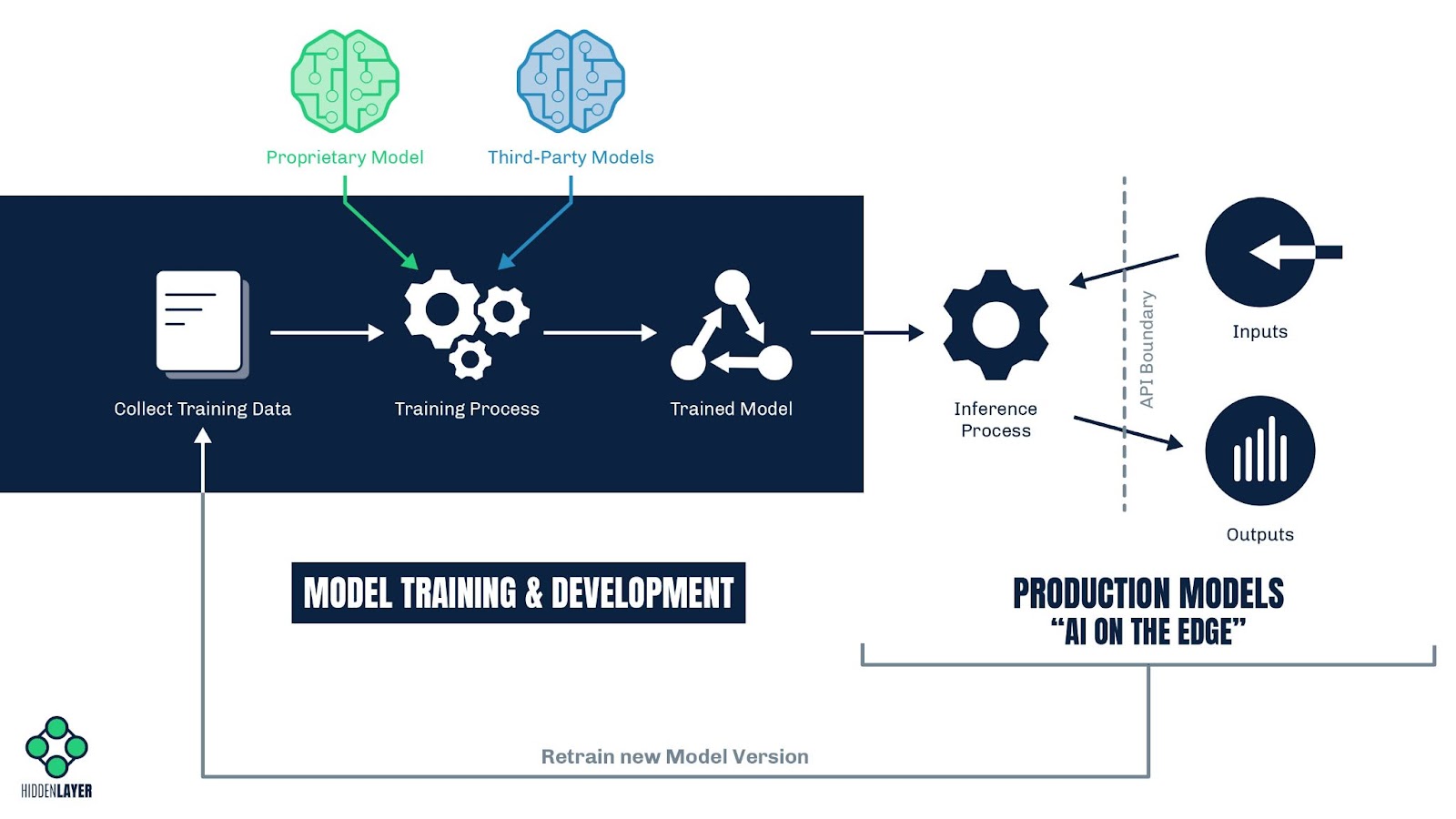

Machine Learning Operations (MLOps) are the set of processes and principles for managing the lifecycle of the development and deployment of Machine Learning Models. It is now important for cybersecurity professionals to understand MLOps just as deeply as IT Operations, DevOps, and HR Operations.

Model Training & Development

Collect Training Data – With an objective in mind, data science teams begin by collecting large swaths of data used to train their machine learning model(s) to achieve their goals. The quality and quantity of the data will directly influence the intent and accuracy of the ML Model. External and internal threat actors could introduce poisoned data to undermine the integrity and efficacy of the ML model.

Training Process – When an adequate corpus of training data has been compiled, data scientist teams will start the training process to develop their ML Models. They typically source their ML models in two ways:

- Proprietary Models: Large enterprise corporations or startup companies with unique value propositions may decide to develop their own ML models from scratch and encase their intellectual property within proprietary models. ML vulnerabilities could allow inference attacks and model theft, jeopardizing the company’s intellectual property.

- Third-Party Models: Companies trying to bootstrap their adoption of AI may start by utilizing third-party models from open-source repositories or ML Model marketplaces like HuggingFace, Kaggle, Microsoft Azure ML Marketplace, and others. Unfortunately, security controls on these forums are limited. Malicious and vulnerable ML Models have been found in these repositories, making the ML Model an attack vector into a corporate environment.

Trained Model – Once the Data Science team has trained their ML model to meet their success criteria and initial objectives, they make the final decision to prepare the ML Model for release into production. This is the last opportunity to identify and eliminate any vulnerabilities, malicious code, or integrity issues to minimize risks the ML Model may pose once in production and accessible by customers or the general public.

ML Security (MLSec)

As you can see, AI introduces a paradigm shift in traditional security approaches. Unlike conventional software systems, AI algorithms learn from data and adapt over time, making them dynamic and less predictable. This inherent complexity amplifies the potential risks and vulnerabilities that can be exploited by malicious actors.

Luckily, as cybersecurity professionals, we’ve been down this route before and are more equipped than ever to adapt to new technologies, changes, and influences on the threat landscape. We can be proactive and apply industry best practices and techniques to protect our company’s machine learning models. Now that you have an understanding of ML Operations (similar to DevOps), let’s explore other areas where ML Security is similar and different compared to traditional cybersecurity.

- Asset Inventory – As the saying goes in cybersecurity, “you can’t protect what you don’t know.” The first step in a successful cybersecurity operation is having a comprehensive asset inventory. Think of ML Models as corporate assets to be protected.

ML Models can appear among a company’s IT assets in 3 primary ways:

- Proprietary Models – the company’s data science team creates its own ML Models from scratch

- Third-Party Models – the company’s R&D organization may derive ML Models from 3rd party vendors or open source or simply call them using an API

- Embedded Models – any of the company’s business units could be using 3rd party software or hardware that have ML Models embedded in their tech stack, making them susceptible to supply chain attacks. There is an ongoing debate within the industry on how to best provide discovery and transparency of embedded ML Models. The adoption of Software Bill of Materials (SBOM) to include AI components is one way. There are also discussions of an AI Bill of Materials (AI BOM).

- File Types – In traditional cybersecurity, we think of files such as portable executables (PE), scripts, macros, OLE, and PDFs as possible attack vectors for malicious code execution. ML Models are also files, but you will need to learn the file formats associated with ML such as pickle, PyTorch, joblib, etc.

- Container Files – We’re all too familiar with container files like zip, rar, tar, etc. ML Container files can come in the form of Zip, ONNX, and HDF5.

- Malware – Since ML Models can present themselves in a variety of forms with data storage and code execution capabilities, they can be weaponized to host malware and be an entry point for an attack into a corporate network. It is imperative that every ML Model coming in and out of your company be scanned for malicious code.

- Vulnerabilities – Due to the various libraries and code embedded in ML Models, they can have inherent vulnerabilities such as backdoors, remote code execution, etc. We predict the volume of reported vulnerabilities in ML will increase at a rapid pace as AI becomes more ubiquitous. ML Models should be scanned for vulnerabilities before releasing into production.

- Risky downloads – Traditional file transfer and download repositories such as Bulletin Board Systems (BBS), Peer 2 Peer (P2P) networks, and torrents were notorious for hosting malware, adware, and unwanted programs. Third-party ML Model repositories are still in their infancy but gaining tremendous traction. Many of their terms and conditions release them of liability with a “Use at your own risk” stance.

- Secure Coding – ML Models are code and are just as susceptible to supply chain attacks as traditional software code. A recent example is the PyPi Package Hijacking carried out through a phishing attack. Therefore traditional secure coding best practices and audits should be put in place for ML Models within the MLOps Lifecycle.

- AI Red Teaming – Similar to Penetration Testing and traditional adversarial red teaming, the purpose of AI Red Teaming is to assess a company’s security posture around its AI assets and ML operations.

- Real-Time Monitoring – Visibility and monitoring for suspicious behavior on traditional assets are crucial in Security Operations. ML Models should be treated in the same manner with early warning systems in place to respond to possible attacks on the models. Unlike traditional computing devices, ML Models can initially seem like a black box with seemingly indecipherable activity. HiddenLayer MLSec Platform provides the visibility and real-time insights to secure your company’s AI.

- ML Attack Techniques – Cybersecurity attack techniques on traditional IT assets grew and evolved through the decades with each new technological era. AI & ML usher in a whole new category of attack techniques called Adversarial Machine Learning (AdvML). Our SAI Research Team goes into depth on this new frontier of Adversarial ML.

- MITRE Frameworks – Everyone in cybersecurity operations is all too familiar with MITRE ATT&CK. Our team at HiddenLayer has been collaborating with the MITRE organization and other vendors to create and maintain MITRE ATLAS which catalogs all the tactics and techniques that can be used to attack ML Models.

- ML Detection & Response – Endpoint Detection & Response (EDR) helps cybersecurity teams conduct incident response to traditional asset attacks. Extended Detection & Response (XDR) evolved it further by adding network and user correlation. However, for AI & ML, the attack techniques are so fundamentally different it was necessary for HiddenLayer to introduce a new cybersecurity product we call MLDR (Machine Learning Detection and Response).

- Response Actions – The response actions you can take on an attacked ML Model are similar in principle to a traditional asset (blocking the attacker, obfuscation, sandboxing, etc), but the execution method is different since it requires direct interaction with the ML Model. HiddenLayer MLSec Platform and MLDR offer a variety of response actions for different scenarios

- Attack Tools – Microsoft Azure Counterfit and IBM ART (Adversarial Robustness Toolbox) are two of the most popular ML Attack tools available. They are similar to Mimikatz and Cobalt Strike for traditional cybersecurity attacks. They can be used to red team or they can be used to exploit by threat actors with malicious intent.

- Threat Actors – Though the ML attack tools make adversarial machine learning easy to execute by script kiddies, the prevalence and rapid adoption of AI will entice threat actors to up their game by researching and exploiting the formats and frameworks of machine learning.

Take Steps Towards Securing Your AI

Our team at HiddenLayer believes that the era of AI can change the world for the better, but we must be proactive in taking the lessons learned from the past and applying those principles and best practices toward a secure AI future.

In summary, we recommend taking these steps toward devising your team’s plan to protect and defend your company from the risks and threats AI will bring along with it.

- Build a collaborative relationship with your Data Science Team

- Create an Inventory of your company’s ML Models

- Determine the source of origin of the ML Models (internally developed, third party, open source)

- Scan and audit all inbound and outbound ML Models for malware, vulnerabilities, and integrity issues

- Monitor production models for Adversarial ML Attacks

- Develop an incident response plan for ML attacks